Cursor driven development

As regular readers of my blogs already know, I have been using vscode with the continue plugin & local ollama models for code completion / dev type work with LLMs.

I now wanted to use Cursor to take that up a level . My team had a hackathon recently where we used Cursor & Gemini and seeing how powerful it is in action I wanted to play with it more.

There was a small hobby project I hadn’t got round to and I was keen to try out development purely by prompting i.e me not having to write a single line of code myself and to go from requirements to working prototype!

This post describes my adventures with Cursor to finally get that home project I’d been putting off started.

On installing Cursor you get given a choice of what editor/ide to integrate it with and yes vim is there but as you knew I would I chose vscode.

Then I was asked what vscode extensions I wanted to move over . It complained about continue so I elected to not move that across. I still had a non Cursor version of vscode I could start up when I wanted to. I use “code " for non Cursor vscode from the terminal or “cursor” to start the cursor vscode set up.

However it wasn’t immediately clear how I could get started with the AI features . There’s a welcome set of tasks . You hover over each one and see a small screen capture of how to complete . It took me a few minutes to figure out how to actually complete each task! tl;dr just get started and it ticks the actions off for you.

My Mac is already set up for python & node and sqlite is on the mac by default so that meant I was good to go .

I had created a local git folder for this with a README that said this

A containerised web solution that catalogues dvds, cds and blueray discs.

The catalogue records title, genre, media type and location.

The solution will integrate with a local llm using ollama to provide a NLP interface

to query the structured data .

Sqlite is the database used to store the data.

The solution requires the ability to add new media and genre types

The web front end should be written in node .

The majority of the data is added to the database via another containerised

solution written in python which uses a local vision llm managed by ollama

to identify media in an uploaded image that is labelled with the location.

The identified media are added to the database categorising each media identified

appropriately .

I then prompted Cursor with the following prompt.

It was using Claude-3.5-sonnet but you can swap that out but I hadn’t tried Claude before so that was another thing I could try out.

Convert this to a set of requirements that can be used to develop the code required

It gave me the following as a response

I'll break this down into functional and non-functional requirements:

# Functional Requirements

1. Media Management

- System must store media items with the following attributes:

- Title

- Genre

- Media Type (DVD, CD, Blu-ray)

- Location

- System must support adding new media items

- System must support adding new genre types

- System must support adding new media types

2. Image Processing

- System must process uploaded images of media collections

- System must identify individual media items from images

- System must extract media information from images

- System must associate location information with uploaded images

- System must automatically categorize identified media

3. Query Interface

- System must provide natural language query capabilities

- System must allow users to search media catalogue

- System must return relevant media information based on queries

4. User Interface

- System must provide a web-based interface

- System must allow manual media entry

- System must support image uploads

- System must display catalogue information

# Non-Functional Requirements

1. Architecture

- Solution must be containerized

- System must use SQLite for data storage

- Frontend must be implemented in Node.js

- Image processing component must be implemented in Python

- System must integrate with Ollama for LLM capabilities

- System must use local LLM for natural language processing

- System must use local vision LLM for image processing

2. Integration

- Components must communicate effectively between containers

- System must integrate with local Ollama instance

- Database must be accessible to all components

3. Performance

- System must handle image processing efficiently

- Database queries must perform adequately for catalogue size

4. Scalability

- Database must support growing media collection

- System must handle multiple concurrent users

This requirements specification provides a foundation for development planning and implementation.

I then prompted with

Create the necessary code in the local repo for the media management functionality

I’m not going to cut & paste it all here but I was given a set of commands to create the dbase , set up the schema, and the code for the media management functionality. So a few moments later after a bit of copying / pasting code I was there. I had to work with it to fix a few issues but it was so much easier than starting even with my continue/ollama set up.

I also prompted this as a halfway house to the NLP interface

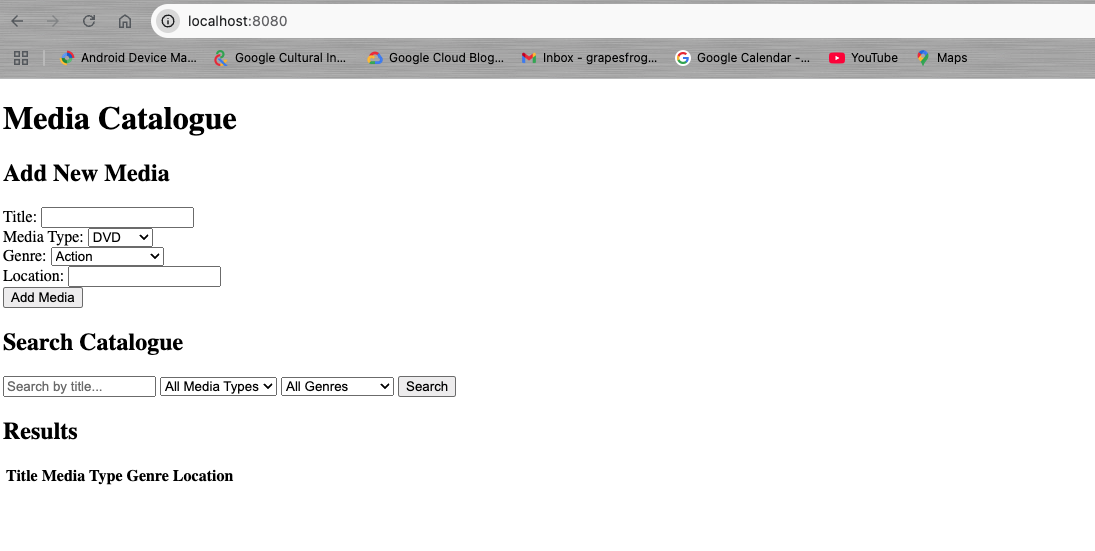

provide the code to create a web front end to add data and query the catalogue for testing.

It gave me instructions to create the web front end

And with two simple commands

Start back end running:

cd media-catalogue/backend

npm run dev

Start front end

cd media-catalogue/frontend

python3 -m http.server 8080

I then had a functional cataloguing application without me writing a single line of code myself. I was the copy paste operator in this peer programming exercise.

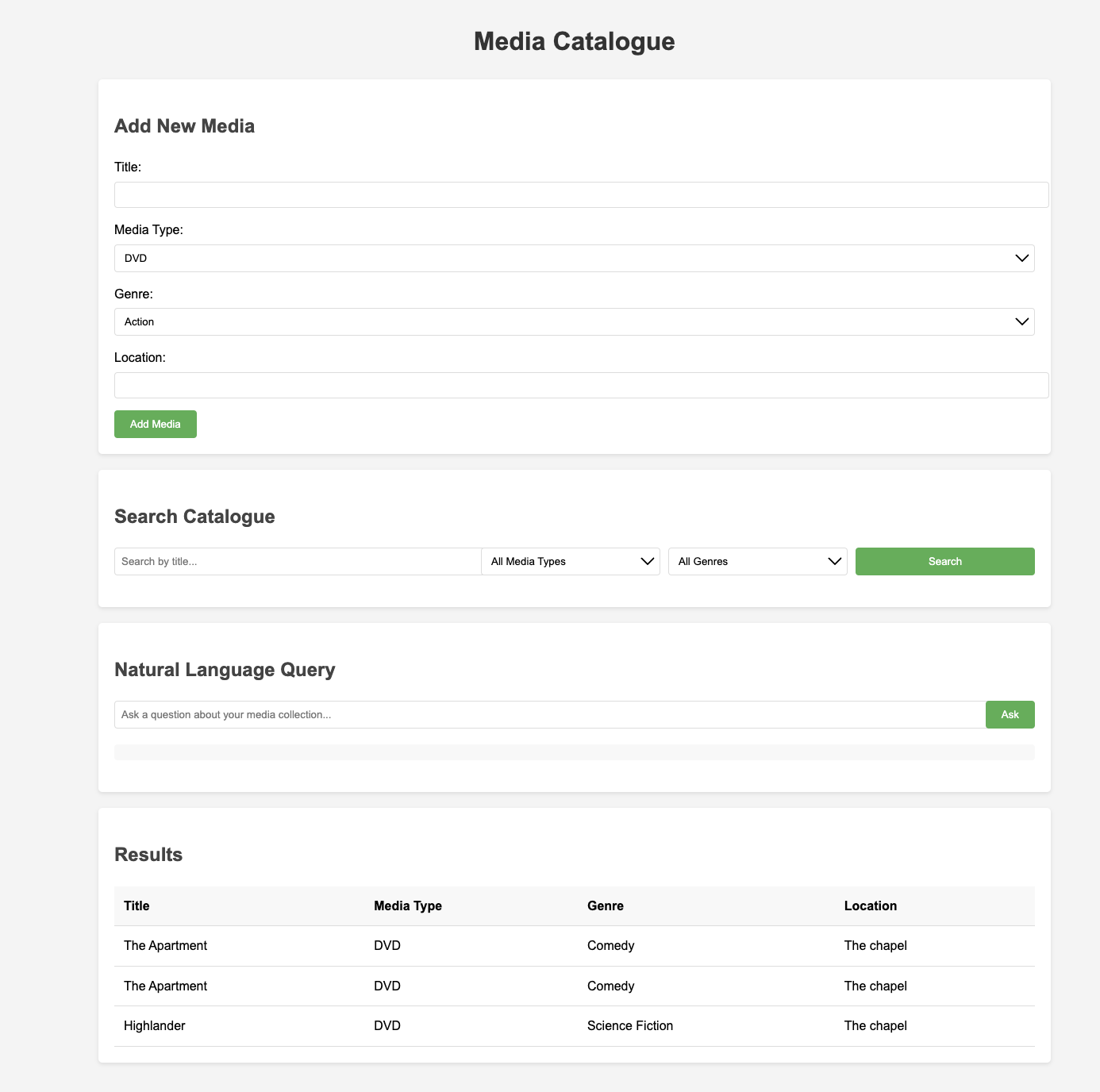

I then added the NLP interface via a few more prompts and a bit more copying & pasting. It selected llama2 but it also provided more code to adapt to making it more flexible regarding what local model to use when I prompted appropriately. The ease of updating my code by just clicking apply to the file where the code needed to be adjusted makes it all so easy.

I had an error when starting the front end which was a typo that claude helpfully told me so my front end now looked like this after fixing that

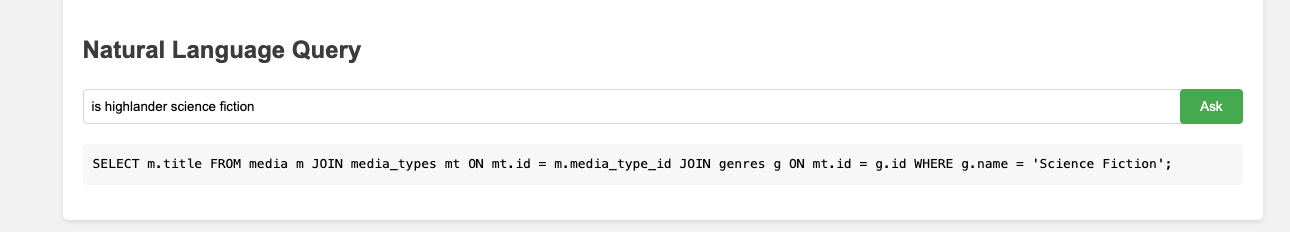

The NLP interface needs more debugging but it does work producing the sql for the NLP query I ask it !

But I wanted to post this before I start to wind down for xmas and to turn it around in a few hours from requirements through to working prototype to blog post .

I’m kinda happy that I had to actually do a considerable amount of coding as a day job once upon a time as I wouldn’t appreciate the power of what Cursor gives you from what you had to do before. I am also appreciative that it’s because of what came before that I can create a demonstrable mvp in a few hours. Sure I need to add some extra code such as preventing duplicates, better error handling but the framework is all here and all it took was me and Claude on a lazy sunday afternoon ! To add to this I chose Node for the media management functionality which I am not that comfortable with (The whole routes thing always gets me confused for some reason!) causing me to be a little open jawed at this. I need to do the vision part of the project and when I get round to doing that I’ll upload all the code to GitHub

Observations

- You still need to have some coding experience as you need to guide the AI . We used another tool at the hackathon where debugging wasn’t a thing and ultimately with that tool we could only get so far as result.

- Using Cursor you really can get something up and running easily in a few hours as long as you can debug the code to guide it to fixing issues that inevitably arise - Human in the loop

- Open your folder first before starting using this and figure out how to save chat sessions across new sessions

- Focus on getting the code working before getting it to work as a docker container! I wasted about an hour with docker related issues as the code worked out of the gate initially but I went down the get this thing working with Docker tunnel first!

- If privacy of your codebase is something you are concerned with then maybe you need to read the privacy statement as you are sending your prompts to an LLM hosted on the internet unlike my continue + Ollama set up where everything is local.

- It takes up less resources locally ( My Mac is underpowered for most local LLM dev work unless I use the smaller models ) using cursor i barely noticed any slow down 🙂

- I would pay for this if I was a hard core developer and had no significant privacy concerns.

- I will more than likely now use this set up for my hobby development projects