Cursor driven development Part II

So as promised, here’s the rest of my journey of creating a cataloguing application by prompt driven development and crucially without resorting to taking over and writing any actual code myself.

I stuck to using cursor ( with claude-3.5-sonnet ) prompting and cutting & pasting. Guiding when there were issues .

I added an admin page since the first post on this exercise.

And yes in less than a day’s worth of work in total I had an application that met my original requirements and most of my extended requirements.

My tl;dr requirements :

- Ability to load data generated from a photo passed to gemini into a sqlite dbase

- Generating the code for The schema for the sqlite dbase

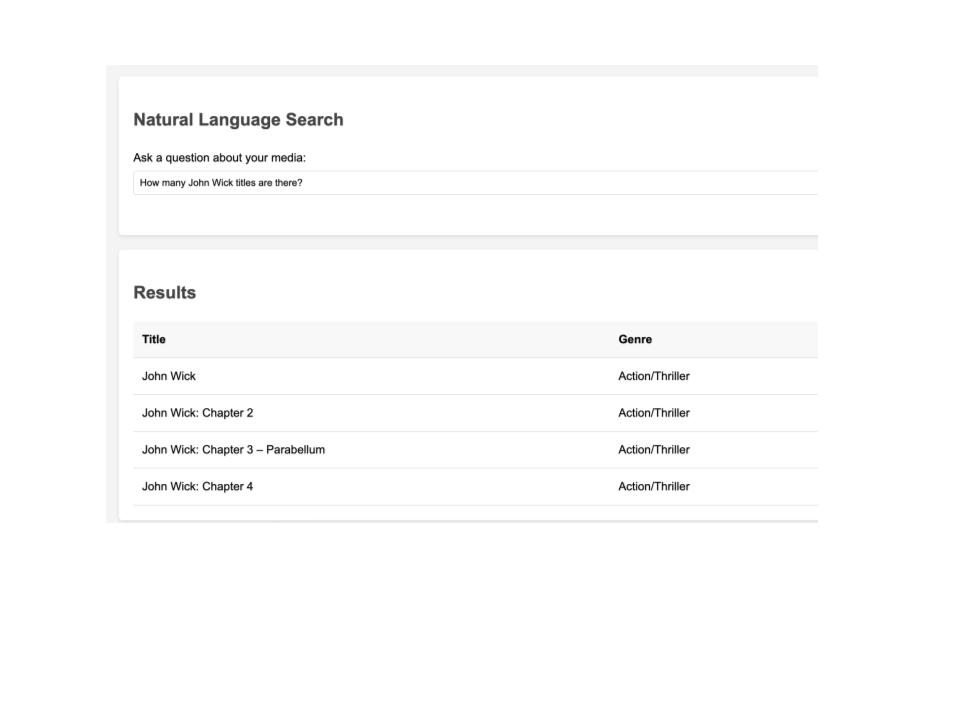

- Generating the front end app so that you can query via direct search or an nlp interface

- Generating an admin interface that allows you to delete individual entries or all entries

Issues along the way include generated code inadvertently removing the NLP interface and the form not working at all. Not being able to capture errors so having to introduce some logging output . Fixing the NLP interface but then removing the box that showed the sql output that NLP query generated in the first version.

Despite introducing errors when fixing issues, it was able to correct the mistakes it introduced with a set of prompts.

For example after it generated fixes to the sqlite integration buttons on the index.html page broke due to cascading errors . a series of prompts were then required to get it to generate the fixes to the code it broke

Here’s an example of one of the walkthroughs of fixing a bug generated by the model by using the model to fix its bugs.

I prompted with

The index.html buttons no longer work

It responded with and gave me the code to apply

Let's fix the index.html functionality. The issue might be related to the event handlers. Here's the updated index.html with the correct button handling:

However the fixes also removed the NLP search functionality which I guess fixed the problem of it not working! So another prompt

This update removed the LLM search and the form still does not work

It responded with

You're right. Let's restore the LLM search functionality and fix the form. Here's the corrected version:

No acknowledgment of the fact it’s own responses broke everything! But hey it’s a LLM

But its fixes didn’t work at all so another prompt was required and you get the picture.

Giving it error messages worked well . For the following error

following errors: Failed to load resource: the server responded with a status of 404 (File not found) Error loading media types: SyntaxError: Unexpected token '<', "<!DOCTYPE "... is not valid JSON

loadMediaTypes @ script.js:24 and [Deprecation] Listener added for a 'DOMSubtreeModified' mutation event. Support for this event type has been removed, and this event will no longer be fired.

It was able to provide code to fix

And I loved how it provides explanations of what the code it was providing accomplishes like this at the end of the code that needs to be applied in response to the above prompt :

Key changes:

1. Removed deprecated DOMSubtreeModified event

2. Simplified the media loading process

3. Added better error handling for fetch requests

4. Updated the genre filter functionality

5. Removed references to media types

6. Added more console logging for debugging

7. Improved error messages

The 404 errors should now be resolved, and the JSON parsing errors should be fixed. Try refreshing your page and check the browser console (F12) for any remaining errors.

Once the NLP functionality was fixed a little trouble shooting to figure out why nothing was being returned meant I had to guide it to use Ollama with the model anyway the tl;dr is I have web app that meets my initial requirements as well as meeting the additional admin functionality. I’m particularly impressed in the NLP -> SQL functionality

For the final part of the exercise as I hadn’t tried getting the LLM to explore the code base as such and yes I know it generated all the code but humour me . I prompted with

Explain the relationship between the backend and frontend

I’ve added that output at the end of this post. I could have probably used a different prompt

A few more observations to add to my initial post

- To get a prototype up and running fast absolutely this approach works but you do need to have some coding experience as I mentioned in my first post before having great success else you will spend a long time figuring out what needs fixing and how to guide it to fix those issues .

- As your app becomes more complex you’re going to need to have more in depth development skills. By adding an admin page and changing the schema I ended up with cascading errors that all needed to be fixed. I do code so I was able to figure out what needs to be done, ask it to introduce some code to generate errors so I could assist it in trouble shooting by providing it with information that helped guide it to the right outcomes.

- Work on smaller more focused units of functionality at a time ( kind of what you’d do anyway I hope!)

- You need to trust the model to get to the right outcome and figure out when you need to jump in to course correct . It’s a bit like when first getting used to using Google maps to navigate you have to trust it to get you there but also use your intuition when you need to step in.

- Get it to explain the code/ generate an architecture walk through as the last step. This will be invaluable for anyone who needs to pick up the code

- A takeaway from this is to take a step back and figure out if you actually need to generate an application or just knit together the tools you already have. The project I chose is more efficient if I just prompt from the phone -> csv -> to a Google sheets tbh but this was an academic exercise .

- I chose Node to see how well I could do using a language that isn’t my go to as the router stuff kinda does my head in. However I have coded in node before so knew enough to be dangerous I guess but also it meant I was way more less tempted to take over and start typing away which meant I kept to the criteria for this exercise.

Would I let anyone else use the app as is?

No as it needs a few updates

- Provide more feedback to the user that it’s doing something like calling back to the model ( I am using inspect to track what’s happening)

- Some controls to validate the csv has the right sort of data in the right column . As is it just rejects if the titles are wrong .

- Tidying up the columns in the admin page

- Fixing the delete all button on the admin page as that code isn’t there as yet

It does everything I need it to do and I’ll get around to getting it to complete the outstanding tasks at some point but today is not that day.

Here’s the repo and here’s the architecture overview it generated:

### Architecture Overview

Frontend (localhost:3000) Backend (localhost:8080) [HTML/CSS/JavaScript] <—————-> [Node.js/Express/SQLite]

### Communication Flow:

1. **Frontend (localhost:3000)**

- Served by Python's simple HTTP server

- Contains:

- `index.html` - User interface

- `script.js` - Handles user interactions and API calls

- `styles.css` - Styling

- Makes API requests to backend using `fetch()`

2. **Backend (localhost:8080)**

- Runs on Node.js with Express

- Handles:

- Database operations (SQLite)

- API endpoints

- Business logic

- LLM integration (Ollama)

### Key Interactions:

1. **Adding Media**

```javascript

Frontend: script.js

fetch(`${API_BASE_URL}/media`, {

method: 'POST',

body: JSON.stringify({title, genre, location})

})

↓

Backend: /api/media

router.post('/media', async (req, res) => {

// Saves to SQLite database

})

- Searching Media

Frontend: script.js

fetch(`${API_BASE_URL}/media/search?title=${searchTerm}`)

↓

Backend: /api/media/search

router.get('/media/search', async (req, res) => {

// Queries SQLite database

})

- Natural Language Queries

Frontend: script.js

fetch(`${API_BASE_URL}/llm-search`, {

method: 'POST',

body: JSON.stringify({query})

})

↓

Backend: /api/llm-search

router.post('/llm-search', async (req, res) => {

// 1. Gets data from SQLite

// 2. Sends to Ollama

// 3. Returns results

})

CORS (Cross-Origin Resource Sharing):

- Frontend and backend run on different ports

- Backend must allow requests from frontend:

app.use(cors({

origin: 'http://localhost:3000',

methods: ['GET', 'POST', 'DELETE']

}));

Data Flow:

- User interacts with frontend

- Frontend makes API request to backend

- Backend processes request

- Backend returns JSON response

- Frontend updates UI with results

This separation allows for:

- Independent development/deployment

- Better scalability

- Clear separation of concerns

- Different technologies for different purposes