Data ecosystem buzzword bingo

The industry I am in suffers from the buzzword jargon merry go round and there is a constant process of naming patterns and paradigms. I used to be a DBA so I have kept half an eye on the data ecosystem and what’s happening in that space. Unsurprisingly they are not avoiding the buzzword merry go round. I was particularly bemused by the term “data lake house” . I figured I wasn’t the only one bemused so here I am with another way too long post. I wanted to deconstruct the following terms

- Data lake

- Data lake house

- Data mart

- Data mesh

The aim of this post is to explain the data ecosystem buzzwords in as simple a way as possible from my point of view. If you already know what they all mean then obviously this post isn’t for you but it was a nice exercise for me anyway and hopefully some of you will enjoy my journey through the data ecosystem hot words.

To provide a baseline let’s start with the definition of a Data warehouse. This has been a term used for years it’s not new. The term data warehouse originated from the 1980s and is attributed to researchers from IBM.

The definition of a data warehouse I use is that it is a central repository of data from one or more disparate sources. It stores current and historical data in a single store that is used for providing insights and creating analytical reports . The schema is defined up front and it has an integrated analysis engine.

See the following if you want different words to describe data warehouses

https://en.m.wikipedia.org/wiki/Data_warehouse

https://cloud.google.com/learn/what-is-a-data-warehouse

https://aws.amazon.com/data-warehouse/

https://docs.microsoft.com/en-us/azure/architecture/data-guide/relational-data/data-warehousing

And as you can see from reading the cloud specific definitions from the leading 3 cloud providers each cloud provider has a product that fits the definition so BigQuery in Google Cloud, Redshift in AWS and Azure synapse analytics on Azure. There are oss solutions such as hive (Before you say but Hadoop! That fits into the data lake bucket) , managed services of which are available on each cloud provider .

I am aware that you can extend Data warehouse systems to use federated data stores but I am trying to keep things as simple as I can as this gets complicated & ambiguous fast.

From here on in I’m going to stick to Google cloud to illustrate with a cloud provider example if I need to. If your cloud of choice is one of the others the links above are a good place to start your journey.

I felt starting with the definition of a Data warehouse which I was pretty familiar with helps me orientate how to understand the buzzwords in the data space today.

Now unfortunately it’s less clear cut with the rest but I’m up for it . Where I want to dig into an actual example as you’d expect I’m going to use the cloud I currently know best, Google Cloud and if that cloud isn’t your cloud of choice you can find the equivalent services from your cloud of choice.

Data lake - I think of it as storing data from disparate sources in a central store. Unlike a data warehouse where the data is stored in a consistent format the data is stored in its raw format or in whatever format the original processor or collector stored it. To understand these two ways of distinguishing between formats the following example should help illustrate that

- The data is generated directly from sensors so CSV, json etc that is the raw format

- Data is entered straight into a form that is written into sheets or directly into a database. That data then needs to be copied to the central store in an appropriate format that is potentially usable without the originating data engine

Each data file when copied to the store needs to have an associated metadata / ID so that it can be queried/ processed at some point. You can’t just throw the data into the lake and hope to use it later if you don’t know what it is or where it is.

Typically the data is stored in an object store such as cloud storage or S3. Hadoop based systems are often used as data lakes but nowadays they use object stores such as GCS (GCS) rather than HDFS with Spark to process. Storage and the analytics engine are not integrated but to be of any use you do need an analytic/ processing engine so in my definition it includes an analytic/processing engine which is not integrated to the storage system unlike a Data Warehouse where the analytic engine is an integral part of the system.

The Wikipedia definition is less precise in comparison.

“A data lake is a system or repository of data stored in its natural/raw format,[1] usually object blobs or files. A data lake is usually a single store of data including raw copies of source system data, sensor data, social data etc.,[2] and transformed data used for tasks such as reporting , visualization , advanced analytics and machine learning . A data lake can include structured data from relational databases (rows and columns), semi-structured data (CSV , logs, XML , JSON ), unstructured data (emails , documents, PDFs ) and binary data (images, audio , video)”

The Wikipedia definition particularly “structured data from relational database files” is ambiguous that just leads to more questions

Are you keeping deltas or copies ?

I have to admit I am struggling to understand how or even why you would want to query a database file if you aren’t using the actual database engine to query it. Maybe it doesn’t mean that at all ?

How long should you keep data before determining that you’re never actually going to use it? a year, 10 years ?

Can you just reconstruct a copy of the data from the source as needed for data lakes?

Usually a single store? What defines usually 8/10 users or what? You can see why I use my own definition.

I have no issues with a data lake architecture where you can swap bits in and out yes even use federated data stores although at that point just call it a data mesh, leave the data where it is and be done with it !

A key selling point of data lakes is that you can keep the data and not worry how you may use it till you suddenly find a use for that data . I get how in some use cases that is definitely useful e.g you want to train machine learning models to help predict a certain thing which may not have been considered when building out your data ecosystem.

You can definitely see how data lakes can quickly become a data swamp ( a dumping ground for unorganised data ) and your storage costs going exponentially up with no obvious ROI as you’re keeping the data just in case! Guess it’s the data ecosystem equivalent of insurance.

I am not disputing the formal definition but for me to be able to help with decision making I have to be more precise hence my interpretation. You’re perfectly free to disagree with my definition! Oh and a great use case for data lakes are as archives or backups for your data.

To try and see what a data lake is in practice let’s dig into Google Cloud’s Data lake offering.

It starts with a helpful page explaining what a Data lake is here

The definition of data lake it starts with is pretty standard “A data lake is a centralized repository designed to store, process, and secure large amounts of structured, semistructured, and unstructured data. It can store data in its native format and process any variety of it, ignoring size limits.”

It then uses this overview statement: " A data lake provides a scalable and secure platform that allows enterprises to: ingest any data from any system at any speed—even if the data comes from on-premises, cloud, or edge-computing systems; store any type or volume of data in full fidelity; process data in real time or batch mode; and analyze data using SQL, Python, R, or any other language, third-party data, or analytics application."

Ps. Full fidelity just means fully reproduces the original production data set in every detail! (Don’t get me started on not keeping things simple and assuming everyone is a native english speaker !)

So when Google cloud talks about data lakes it refers to the ecosystem around the central data store. From that overview statement It implies it also covers the ingestion and a way to process and analyse the data that is stored. So it’s really a data lake architecture more than the core definition which makes sense. My personal definition does not cover how you get the data into the store nor does the wikipedia definition. Obviously you need a way to get the data into your data store in any paradigm. I just decided it was such an obvious requirement I didn’t feel the need to explicitly call that out.

But to really understand What Google Cloud means when it says Data Lake a picture is needed as it’s difficult to see how the moving parts fit together

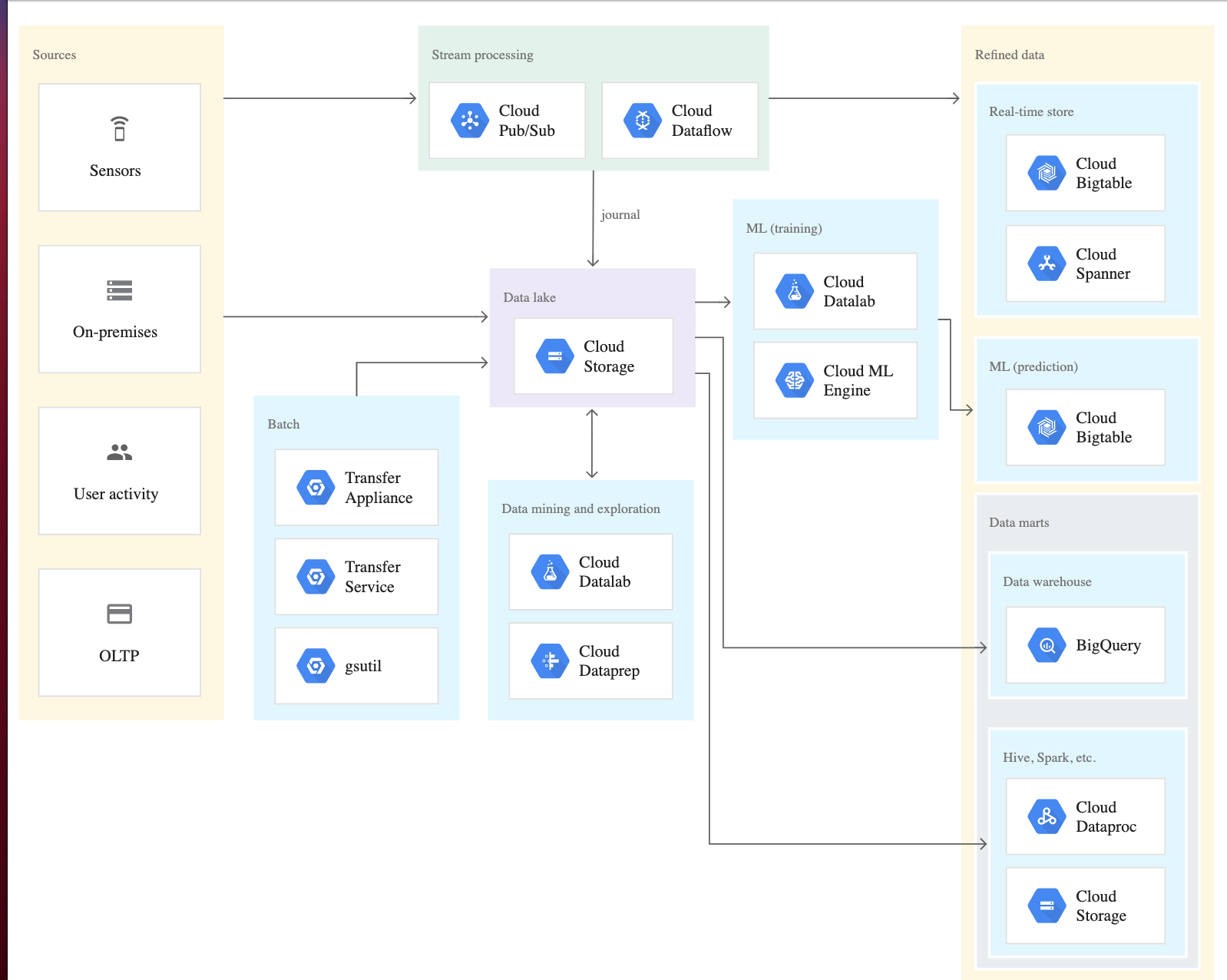

The diagram below is an example which shows how flexible a system you can build depending on the tools you wish to use and your use case.

The diagram is borrowed from Cloud Storage as a data lake which talks through quite nicely how you can build a data lake on Google cloud using some of the products listed on the data lake landing page. I’d also add Cloud Data Fusion to the diagram to manage the data pipeline.

That article kind of skips over metadata management which I personally feel is key to making a data lake useful and not just a swamp of data you spend way too much time trying to get useful insights out of, so I would recommend reading What is Data Catalog? alongside that article .

When digging into Google Cloud’s Data lake solution I am not confused by language such as that from the wikipedia definition which states " A data lake can include structured data from relational databases (rows and columns)" as Google Cloud’s Data lake definition does not imply that you can just take the database files as is and dump then in Cloud storage and then use an analysis tool to query that.

Data lake house ( at this point I thought they were extracting the Michael!) . I had no idea what this actually was so had no “personal definition” to start with.

A common Pattern in the data ecosystem is to move data from a data lake to a more structured data warehouse. The Google cloud data lake diagram above shows this. Data lake houses negate the need for the movement of data from data lakes to data warehouses. In very simple terms, a data lake house makes it possible to use data management features that are inherent in data warehousing on the raw data stored in a low-cost data lake . It seems to me that BigQuery using GCS as an external data store would meet this definition as you can treat a BigQuery external table like you do a standard BigQuery table. There are limitations as you would expect with external tables but if you can accept those limitations then if you use BigQuery with GCS , Data lake or Data lake house what paradigm you decide to apply from a practical point of view is kinda meaningless .

There is no Wikipedia definition though but googling away you can find papers and references and obviously depending on what you read there are plenty of articles that will push back on my less than enthusiastic appreciation of this particular paradigm.

Data mart - A more targeted version of a data warehouse that reflects the controls ,access rights and processes specific to each business unit ( e.g sales , finance etc) within an organisation. The wikipedia definition lists a number of reasons why to use a data mart. As it’s basically a mini data warehouse I don’t really have much to say here as I feel the use case and paradigm it represents is actually clear.

Data mesh - Mesh’s are everywhere and the data ecosystem didn’t want to miss that boat! I had a vague ill defined idea that it was based on the idea of the mesh I more commonly dealt with from the k8s ecosystem.

I’m trying to deconstruct here so a quick explanation of what a service mesh is in that ecosystem so you can understand where I was coming from.

A service mesh is a framework that enables managed, observable, and secure communication across your services, Service meshes harmonize and simplify the common concerns of running a service such as monitoring, networking, and security in a consistent manner

So extrapolating to the data world I had in my head the concept of data pods which had a common mgt layer providing a way to discover and access the data in each pod and providing governance controls similar to a service mesh . Each data pod is the original data source so no need to move data from that or store In a central store except if the use case dictated that it made sense to do so such as in creating a data lake to provide training data. The data pods are distributed and you can tightly control authorisation and authentication to the datapods via the mesh framework .

Not sure if that was going to be even half way close but thought I’d share where I started when digging into this one.

I started with the original 2019 martin flower article How to Move Beyond a Monolithic Data Lake to a Distributed Data Mesh for the definitive explanation as this was the article where the concept was originally described . It is a good read but to really get to a definitive definition the follow up article Data Mesh Principles and Logical Architecture is the one as it dives into the principles which are defined as:

Domain-oriented decentralized data ownership and architecture - So that the ecosystem creating and consuming data can scale out as the number of sources of data, number of use cases, and diversity of access models to the data increases; simply increase the autonomous nodes on the mesh.

Data as a product - So that data users can easily discover, understand and securely use high quality data with a delightful experience; data that is distributed across many domains.

Self-serve data infrastructure as a platform - So that the domain teams can create and consume data products autonomously using the platform abstractions, hiding the complexity of building, executing and maintaining secure and interoperable data products.

Federated computational governance - So that data users can get value from aggregation and correlation of independent data products - the mesh is behaving as an ecosystem following global interoperability standards; standards that are baked computationally into the platform.

So what do the principles mean in practice :

Domain-oriented decentralized data ownership and architecture - For each data source management or ownership , authorisation and access is managed by the custodians of the data source in question. For example it may be a data source that has monitoring data that is owned by the operations team. If the security team wants access to this source then it’s up to the operations team to provide that access and the converse if the security team wanted to grant access to some of their data to the operations team. The data sources are not centralized in one location.

Data as a product - This maps well to service meshes as I typically see them in use. It is up to the data custodian to make their data discoverable, a trusted source , secure , self describing and interoperable. Data is not centralized . Basically have an API to your data and ensure the data sets that are needed by other teams are accessible.

Self-serve data infrastructure as a platform This describes a unified way to manage the data pipelines technology stack and infrastructure in each domain. It requires a domain agnostic infrastructure capability for setting up data pipeline engines, storage, and streaming infrastructure. The original 2019 article indicates that this can be owned by a single data infrastructure team. The list below are just some of the key capabilities that the platform needs to supply as listed in the original article:

- Scalable polyglot big data storage

- Encryption for data at rest and in motion

- Data product versioning

- Data product schema

- Data product de-identification

- Unified data access control and logging

- Data pipeline implementation and orchestration

- Data product discovery, catalog registration and publishing

- Data governance and standardization

- Data product lineage

- Data product monitoring/alerting/log

- Data product quality metrics (collection and sharing)

- In memory data caching

- Federated identity management

- Compute and data locality

Federated computational governance This allows the independent data products to be viewed as one mega data pool adhering to a common governance model. The underlying data sources are the responsibility of the specific custodians (as defined by the _Domain-oriented decentralized data ownership and architecture principal) _and it’s up to the custodian to implement the appropriate governance controls such as incorporation into a data catalog, validating data quality, encryption ,implementation of authorization and authentication .

This approach means you can query data sources,do joins across disparate sources without having to understand the intricacies of each data source.

Digging through the martin fowler articles the focus seems to be on data warehouses/marts and data lakes

Even though Data mesh as formally defined is relatively new there have been products around that met the definition before it was formally defined.Years ago I recall using pentaho to achieve many of the objectives that a data mesh addressed ( I was pleasantly surprised to see Pentaho was still around)

As you would anticipate Google cloud has a product to help you build your data mesh. The blog post here describes this so I won’t go into too much detail as this section of this post is already way too long!

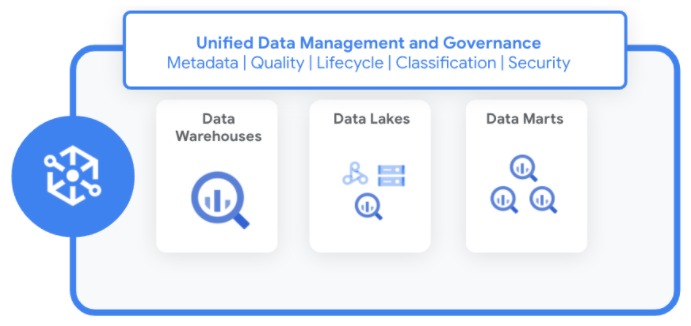

This diagram gives a high level view of what dataplex is:

Datplex itself is not called a data mesh though it’s referred to as a data fabric that you can use to build a data mesh. I guess I need to dig into it to see what other uses there are for Dataplex but that’s for another time.

So it seems my conceptualization of what a data mesh is wasn’t really that far off after all. Who knew using a sensible name for something would allow people to figure out what it was without having to dig into pages and pages of explanatory articles on the theory of it :-) I did enjoy reading the theory though but sometimes you just want to know is a thing right for your use case.

Now I have a proper personal definition of what a data mesh is based on actually digging into what it is rather than an ill defined idea based on its name : A unified platform to host and expose disperate and distributed data sources which are under separate domain authority while adhering to a high level governance model. The mesh platform allows cross domain access to the data sources in a consistent manner.

Definitely doesn’t sound like it’s for the faint hearted but it should stop the proliferation of data silos !

I hope my view from the outside has helped demystify the jargon so when your data colleagues talk about their paradigms you too can join in the conversation too!

For those hoping for a flowchart I am afraid I am going to disappoint for now. I may come back at a later date and add one we’ll see .

Thanks to him indoors for his insightful comments which helped me formulate my thoughts on this.