GAI Is Going Well part deux

It’s been a never ending series of reports about the on going adverse outcomes related to the use of GAI whether deliberate attacks or just unfortunate side effects. Since I wrote GAI Is Going Well I’ve continued to indulge in my hobby of collecting articles related to the adverse effects of working with LLMs/GAI ( the distinction between the two is becoming more distinct so I am resorting to writing it like that now ) based applications .

I categorise my list into 4 buckets

- Adverse effects that result from deliberate attacks or just unfortunate outcomes

- Regulating the use of GAI and advisories related to AI

- Research articles mostly on how to jailbreak & craft dubious prompts. This bucket has the adverse effect of unfortunately acting as a blueprint for attacks as not all of the articles are published after responsible disclose so that the issues can be fixed ( if they even can in some cases) before publication.

- Mitigations & tooling

I have already written about bucket 1 and that bucket keeps growing ☹️ but the research article bucket is also continually growing at at a ridiculous pace too. I felt before I got too overwhelmed by the sheer volume of articles & papers I’d better write the next post in my GAI Is Going Well series . This time I discuss research articles that are related to LLM/GAI cyber security.

This post would be way too long if I listed all the papers that have caught my eye in this space so I’m just going to list some of them under a few categories. This is just to provide you the reader with an insight into the research in this space .

I keep my list of articles on GitHub . The adverse effects list isn’t as extensive as that recorded in places like https://incidentdatabase.ai/ and never will be. I try to update it every month or so with articles that have caught my eye.

Overview papers

One paper I found as a good primer when I started keeping track was [2303.12132] Fundamentals of Generative Large Language Models and Perspectives in Cyber-Defense . This is a report by the research arm of the Swiss DoD on the impact LLM could have on cyber-securty. It was published a year ago in March 2023 but it’s as relevant then as it is today . With the advent of multi -modal models though I figure a follow up would be great.

The best overview paper to date imho is [2307.10169] Challenges and Applications of Large Language Models . If you had to choose one paper to get a grasp of the scale of the issue I’d recommend reading this one. It’s 49 pages (75 with references)

[2403.08701] Review of Generative AI Methods in Cybersecurity discusses both offensive and defensive strategies. It acknowledges that it’s a double-edged sword stating " Although GenAI has the potential to revolutionise cybersecurity processes by automating defences, enhancing threat intelligence, and improving cybersecurity protocols, it also opens up new avenues for highly skilled cyberattacks" so its not all smelling of roses

[2402.14020] Coercing LLMs to do and reveal (almost) anything is one of the newer overview type papers, it has an accompanying GitHub repo. I include it as I am thinking that the equivalent of the many tools such as nmap and nessus are going to have to be part of the tools that any self respecting LLM security practitioner will get used to using. I will get round to writing a post on the tools and mitigations to complete this series of posts.

Papers on prompt injection & Jailbreaking attack

If you want to know about prompt injection I’ll just point you at Simon Willison: Prompt injection Simon coined the term . I’d also suggest you look at his explanation at the difference between prompt injection & jail breaking they are different things.

There are a ton of articles on Jailbreaking tl;dr forcing outcomes from using LLMs that ignore pre-coded rules . [2307.15043] Universal and Transferable Adversarial Attacks on Aligned Language Models covers jail breaking models that have had alignment training ( fine tuned to prevent harmful or objectionable output) . tl;dr aligned models may stop the average users but with enough determination they don’t pose much of an obstacle if you have some time & the funds . However it seems that newer models “do seem to evince substantially lower attack success rates” . So the lesson here is keep things up to date so in this case keep the foundational model you use up to date . Also use filters to strip out potential dodgy requests being passed back to the model .

[2402.15570] Fast Adversarial Attacks on Language Models In One GPU Minute describes a gradient-free targeted attack that can jailbreak aligned LMs with high attack success rates within one minute. Scroll past the references to see the examples .

This article Jailbroken AI Chatbots Can Jailbreak Other Chatbots | Scientific American talks about the paper [2311.03348] Scalable and Transferable Black-Box Jailbreaks for Language Models via Persona Modulation tl;dr tell the target LLM it’s a research assistant or some other suitable persona then get it to produce output to do bad things & automate using another LLM .

[2311.17035] Scalable Extraction of Training Data from (Production) Language Models describes an attack that extracts training data from LLMs by using random-token-prompting attacks. It’s a well written paper and if you scroll past the references you can see example outputs from the attacks. This was responsibly disclosed .

I had to include this ArtPrompt: ASCII Art-based Jailbreak Attacks against Aligned LLMs . It describes how to use ASCII art to circumvent alignment .It has been tested against on five LLMs. Evaluating against multimodal models seems to be next up .

Revealing what’s under the covers or in other words obtaining information from the layers involved with making a model accessible . [2403.06634] Stealing Part of a Production Language Model discusses how to extract details about closed models via their API end points . It discusses extracting the embedding projection layer of a transformer language model. Layers that are accessible via the api end point . This was responsibly disclosed and mitigations have been put in place by both model providers discussed.It would now cost way more than what it cost the authors to do for the original attacks. It’s a lesson that should be heeded if you are going to make your own models available via a public api end point.

Papers on attacks that sound similar to traditional threats

[2403.02817] Here Comes The AI Worm: Unleashing Zero-click Worms that Target GenAI-Powered Applications discusses how a GenAI worm is replicated by using prompt injection techniques and propagation achieved by exploiting the application layer. It introduces the concept of adversarial self-replicating prompts which are prompts that triggers the GenAI model to output the prompt (so it will be replicated next time as well) and perform a malicious activity. It demonstrates how to implement it as a RAG-based GenAI worm. It’s semantics whether its intended behaviour or not the issue is it can lead to adverse outcomes so understanding this threat vector is important as I feel like the authors of the paper that this may well be exploitable at some point in the future.

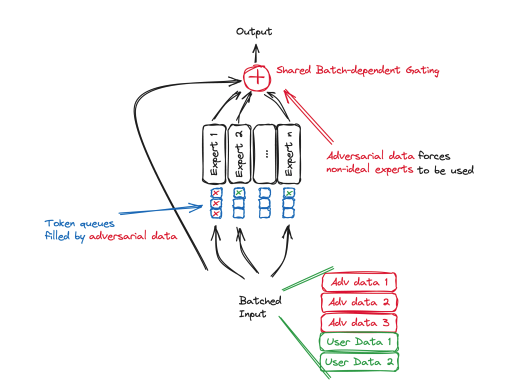

Mixture of experts is becoming a popular architectural approach however [2402.05526] Buffer Overflow in Mixture of Experts is potentially a viable attack vector

The image below from the paper illustrates the attack vector

The attack relies on MoE routing that uses finite sized buffer queues for each individual expert. The adversary pushes their data into the shared batch, that already contains user data. As tokens get distributed across different experts, adversarial data fills the expert buffers that would be preferred by the user, dropping or routing their data to experts that produce suboptimal outputs.

The paper describes a Denial of expert attack This made me chuckle ) and ist repsonsibly disclosed/ discussed as it includes mitigations

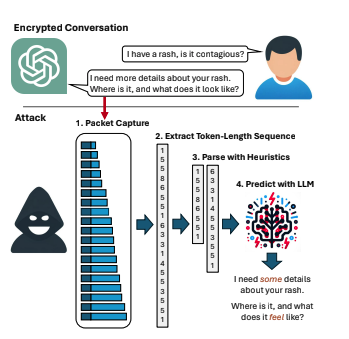

A great example of responsible disclosure is What Was Your Prompt? A Remote Keylogging Attack on AI Assistants which describes a side channel attack . In this attack as illustrated in the image below taken from the paper a chat session is intercepted and the packet headers used to figure out the length of each token , the tokens are extracted and reconstructed in sequence using a LLM . Crucially the chat session has to be in streaming mode as the tokens are sent sequentially

This was responsibly disclosed and cloudflare have an excellent write up of both the attack and the mitigation here: Mitigating a token-length side-channel attack in our AI products I love that it uses wireshark as part of the write up.

[2402.06664] LLM Agents can Autonomously Hack Websites I squeezed this one in as automating attacks is a thing and using GAI/LLMs to automate attacks is an obvious way to enable adverse actors

And so on

This [2402.14589] Avoiding an AI-imposed Taylor’s Version of all music history just made me laugh as it is like reading a black mirror script . I love the inspiration. Go read it, as it is a short paper and will bring a smile or maybe a slightly horrified grimace to your face.

The attack vectors are constantly evolving and with prompt injection & jailbreaking being relatively easy to do, protecting your LLMOps pipeline and implementing mitigation techniques is important. I am an advocate for open research but equally I am well aware that everyone can read the same articles I do and not all of them are good guys ( or gals)