A brief tour of policy auditing & enforcement options for GKE

Admission controllers

When enforcing policies in K8s (I am assuming that you are running an up to date version of k8s) and when I say k8s I will specifically be talking about GKE (but you knew that 😀) you use Admission controllers ( This shouldn’t be optional hence “use” with no qualifier!) . Admission controllers essentially provides a way to govern how the cluster is used by intercepting calls to the k8s api prior to persistence of the object, but after the request is authenticated and authorized. You typically have a number of Admission controllers configured. This post from the kubernetes blog site is excellent and I really have nothing to add to explain the concept as both the blog and docs explain the concept well imho

Pod security policies

I’ll start with Pod security policies as this is available out of the box. Pod security admission control is an admission controller that although optional you should enable as a must do action when setting up your K8s clusters ! I am being particularly opinionated about that but it really should be mandatory!

Pod security policies provides you with the ability to define a set of sensible default settings such as not running as root for your Pods, The GKE doc on Using Pod Security policies has a good explanation . This restricted-psp.yaml is a good starting policy.

Network Policies

You will almost certainly want to control what traffic can flow between your pods and services by defining network policies . This also helps to limit the blast radius in case a pod or service is compromised as traffic doesn’t get to services and pods it isn’t allowed to communicate with. You start your cluster with the –enable-nework-policy flag to enable network polices. Then you need to configure your policies.

Note: if using Pod security policies and network policies together then make sure you follow this [guidance] (https://cloud.google.com/kubernetes-engine/docs/how-to/hardening-your-cluster#using_networkpolicy_and_podsecuritypolicy_together )

OPA

Open policy agent (OPA). So what is OPA very briefly? Taking the elevator pitch from the landing page it’s a “general-purpose policy engine with uses ranging from authorization and admission control to data filtering. You install it as an admission controller!

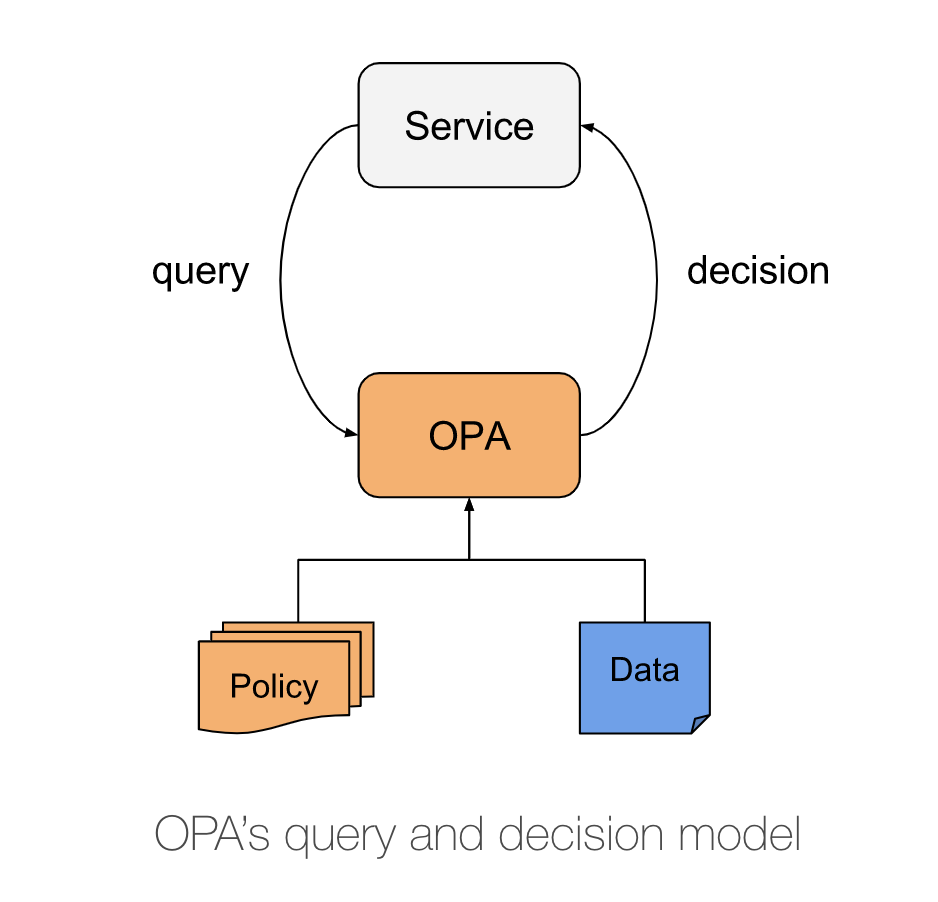

You integrate OPA within your k8s cluster as a sidecar container admission controller. The diagram below shows the flow of interacting with the OPA policy engine . Basically “policy’ queries are offset from your service to the OPA engine.

Policies are documents that are written in rego ( I know yet another DSL to learn ). If you are going to use OPA directly then there is an OPA extension for my favourite editor Visual studio code which you can find here

Deploying OPA into your cluster is the usual YAML wrangling. Once installed you need to create policies which you store as a ConfigMap or secret. I would anticipate in many cases that in production they will be stored as secrets if the policy is considered sensitive.

TIP: The kicking the tyres walkthrough is actually worth reading before looking at the too much YAML deployment guide.

Gatekeeper

Gatekeeper uses the OPA framework but crucially it uses CRD’s ( yep yet another acronym) to create and extend the policy library. CRD’s are K8s custom resource defintions. Basically they extend the k8s api and the beauty is you can use good ole kubectl to manage them. CRD’s are ideal if you want to manage the state of something or things in a declarative manner similar to How Deployment manager or Terraform manage Infrastructure. CRD’s by themselves just store & retrieve structured data so you need a controller to achieve a declarative API . In a nutshell you define the desired end state and the controller maintains the state. So ( admittedly cutting out a lot of the path to get here - This post is already way too long! ) means you can treat your policies like you should be treating your infrastructure by using an infrastructure as code approach with each definition of state stored in a repo and version controlled.

Anyway back to Gatekeeper which is a validating webhook that enforces CRD-based policies executed by Open Policy Agent . There is no mutating functionality as yet though .

(As an aside I at this point went off to grok the key difference between controllers and operators This is quite a nice explanation)

To use gatekeeper install as described in the repo. Once installed you need to define a constraint template which is a YAML file that contains the Rego that enforces the constraint and the schema of the constraint. You use kubectl to deploy it. To actually use the constraint template you create another YAML file the constraint file itself that essentially has values that the constraint template needs to enforce. The repo has a nice simple example showing how the constraint is linked to the constraint template.

The audit functionality runs a periodic check against policies enforced in the cluster storing the results in the status field of the failed constraint.

Falco

Falco is designed to detect anomalous activity in your applications. It monitors and detects container, application, host, and network activity in the context of this post I am only interested in the k8s.audit events . The k8s.audit.events records requests and responses to kube-apiserver . It can detect a variety of events such as the creation & destroying of pods & services and the Creating/updating/removing config maps or secrets.

You install it using the DaemonSet manifests or preferrably use Helm. Deploying on GKE requires eBPF (extended Berkeley Packet Filter) to supply the stream of system calls to the Falco engine. You need to configure your cluster for audit logging . It was I admit slightly confusing trying to figure out exactly what you have to do to configure it. To save you time figuring out how you want to configure your set up this post which walks through using Falco on GKE with pub/sub and cloud functions is quite nice. The rules are expressed as YAML but no rego ( yeah!) and stored as a ConfigMap. The default k8s_audit_rules file is quite straightforward. There are a set of playbooks that you can use with cloud functions that are pretty nice.

So what did I think

Guess I should summarise my thoughts after this tour. You should use Pod security and network policies regardless and with all things security defence in depth is the correct thing to do. So complementing Pod security and network policies with another policy enforcer control is a must do and being able to detect potential issues is also a must. I liked Falco a lot but it wasn’t that simple.

I really really like Falco , defining the rules is the easiest to grok from a standing start imho ( When I first found out about Falco I took it for a spin and it was a delight). It has a great list of policies already supplied by default and it integrates with the CSCC . Oh and that great post walking you through hooking it up with cloud functions & Pub/sub. It is still my favourite but it is primarily an audit tool so in the end I had to invite Gatekeeper to the party. Why gatekeeper though when rego is a pain frankly? Well it enforces and the fact CRD’s lend themselves well to an IaC approach. The consistency that using CRD’s give you was really the tipping point despite the ugly rego. So that is reluctantly where I’d point folks looking for a consistent way to work with K8s. Yes you will have to get used to rego but after having stared at a few templates writing this post I don’t think the learning curve will be a big one just it’s kinda ugly and everyone coming to it new will spend a few iterations swearing too much as you make some mistakes with the syntax!.

Gatekeeper I am hoping will get better fast. Falco today is the more finished. The learning curve with Falco is a lot lower. plus the collection of default policies is a good base as well as being a good way to learn how to put together your own rules. If you think you will be okay with the operational overhaed of managing both then use both Falco & Gatekeeper. Gatekeeper for enforcement, Falco for auditing. Gatekeeper can enforce and audit but the audit features imho are just not as good as what Falco provides in terms of maturity and ease of use. If you want one tool to rule them all then Gatekeeper if you are willing to go along with the ride as it becomes more feature complete.

Both Gatekeeper and Falco are CNCF projects like K8s is so neither are right or wrong choices.