Security in the Cloud when coming from an On-premises world

In this post on thinking about security when coming from an on-premises world to the cloud I do focus on Google Cloud so some of the techniques I discuss may not easily map to your cloud of choice that aside the principles are pretty much the same .

How is security in the cloud different?

When addressing security concerns on-premises you are responsible for everything from the physical security of assets, the operating system, application servers , the applications, and managing user permissions .

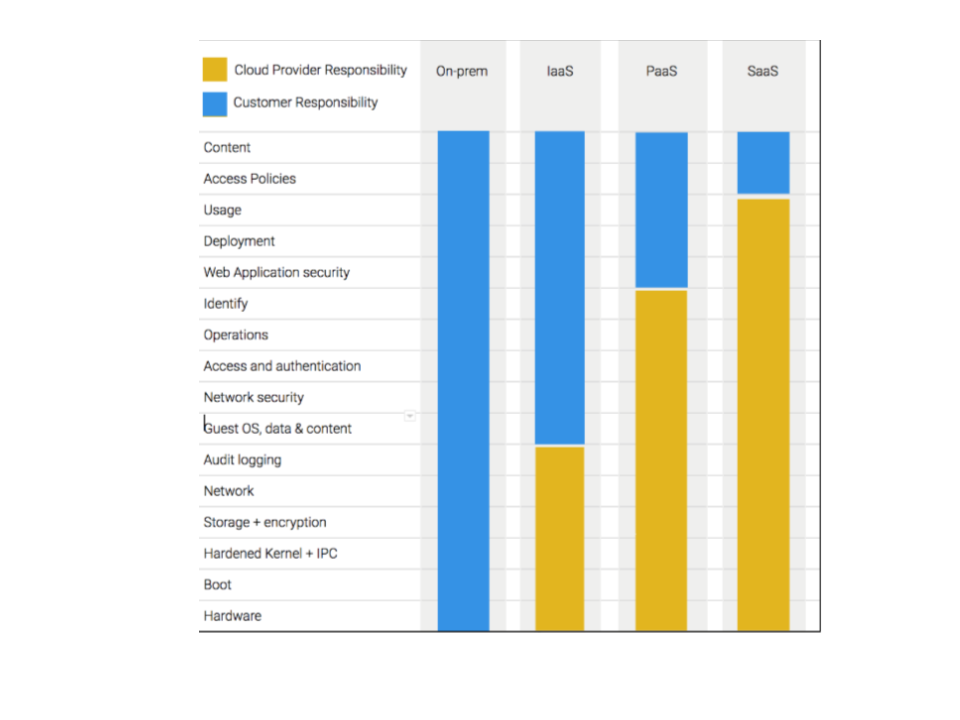

Google Cloud has a shared security model where as the Cloud service provider Google Cloud is responsible for the platform. The diagram below illustrates the continuum for the shared responsibility model.

When using the cloud, you are highly likely to be using services that cover IaaS through to a SaaS model .

Google Cloud describes their approach to securing Google Cloud in the security overview whitepaper and a description of how the multi layered defense in-depth approach applies to Google Cloud is described in the Google Infrastructure Security Design Overview whitepaper

A defense in-depth approach applies as you would on-premises . A difference evident when using the cloud is that the controls in the cloud are designed for scale and as they are built into the platform are fully integrated. This does not however preclude you from building upon the skills and expertise you already have. You can if you choose to, elect to extend your on-premises controls to the cloud . Google Cloud has several security partners that have solutions designed to work with Google Cloud where you may already be using the on-premises versions.

A key differentiator between on-premises security and the cloud is one of scale and in Google Cloud’s case applying the lessons learned from Google running global services for millions of users.

By default services on Google Cloud are exposed via publicly accessible endpoints which tends to be the opposite approach from on-premises. Google Cloud has default settings to help protect you from inadvertently opening out access to unintended sources as well as providing a set of products to help you secure access points and implement controls which let you authorize who has access to your resources in Google Cloud.

Google cloud also provides several private access options negating the need to expose your on-premises hosts or the VM’s running in the cloud to the internet to reach certain Google Cloud API’s and services.

A characteristic of the Cloud that you do not often see on-premises is elasticity of resources scaling in and out. This requires understanding how to monitor what those resources scaled up for . Maybe a bit of sneaky bitcoin mining?

if the resources have disappeared due to autoscaling actions can you still get insights ? Google Cloud’s Security Command Center helps you with addressing the need to get insights into your resources including the fungible resources that come and go as part of autoscaling actions . Implementing controls for resources that can scale up and down and how to monitor them is a skill your teams will need .

As with on-premises adhering to the principle of least privilege is highly recommended. Implementing break glass accounts and/or granting sensitive elevated permissions only when needed is desirable. IAM is applied differently in Google Cloud but the core principles of least privilege still apply. Understanding how to implement IAM and the implications of the configuration is a core skill set that whoever in your organization will be administering security controls should understand.

Using the Security controls of Google Cloud

As a customer using Google Cloud your concerns focus on your part of the shared security model and addressing a specific set of concerns that can usually fit into one of the following categories:

- Managing users

- Managing end user devices and applications

- Granting permissions

- Protecting your environment from external threats

- Protecting your environment from malicious insiders

- Preventing Data exfiltration

- Protecting & managing access to data appropriately

- Protecting your applications

- Knowing what is happening in your environment

Arguably there are other categories that you may want to add but for the purposes of this post we’ll stick to the ones listed. These categories are the same whether you are dealing with your on-premises requirements or the cloud.

Managing Users

Centrally managing users who can access your resources is best practice whether on-premises or in the cloud. Increasingly using cloud based Identity systems are the default option as the cloud providers have invested time and effort in providing scalable systems with availability that any self-managed system will struggle to meet. The operational overhead of managing an identity system is the Cloud provider’s responsibility leaving you to focus on managing your users.

Google Cloud uses Google Accounts for authentication and access management. As a prerequisite for granting access to Google Cloud resources, employees must have access to a Google identity. These identities can be granted via one of the Cloud Identity versions . This does not however mean you cannot use your existing Identity system you can synchronize users using Google Cloud Directory Sync (GCDS) which is a Google provided connector tool that integrates with most Enterprise LDAP management systems . You can also use a third party provider to allow the use of your corporate identity system.

The users using Google Cloud are typically developers, data scientists, and operational staff not your whole company. You may have applications hosted on Google Cloud that all your employees can access but authentication for those is distinct from authenticating directly against Google Cloud to access resources

Managing end user devices and application access

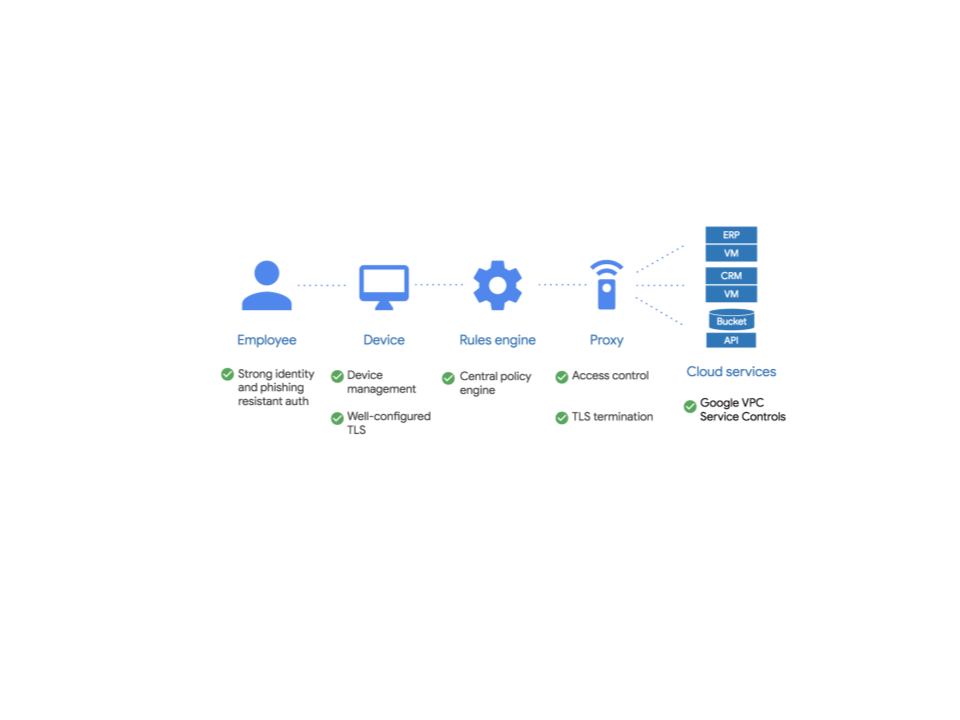

Commonly Enterprise customers use a vpn to enforce controls about how their end users can access their network . Google has adopted an alternative approach to allowing every employee to work from untrusted networks BeyondCorp . BeyondCorp is an enterprise security model that builds upon 6 years of building zero trust networks at Google, combined with best-of-breed ideas and practices from the community. By shifting access controls from the network perimeter to individual devices and users, BeyondCorp allows employees to work more securely from any location without the need for a traditional VPN. The reason why this approach was adopted :

- OTP codes are required for access to VPNs, and are easily phished.

- Applying firewall rules and opening ports is not a scalable process.

- Mistakes will be made.

- Mobile devices do not work well with VPNs.

- Network security is like having a strong exterior with a soft inside.

BeyondCorp combines Device management with a proxy to address the problems with traditional ways of allowing remote access.

Google has however enabled BeyondCorp for everyone as a Google Cloud service Identity-aware-proxy which when combined with Cloud Identities /G-Suites device management capabilities addresses the issues outlined with a VPN approach.

Granting Permissions

Whether you use a cloud based or on-premises identity provider you apply the principle of least privilege as a best practice approach. This is so you only grant the permissions needed by your users to carry out their function or to access the data needed. This concept is integral to the way permissions are granted in Google Cloud.

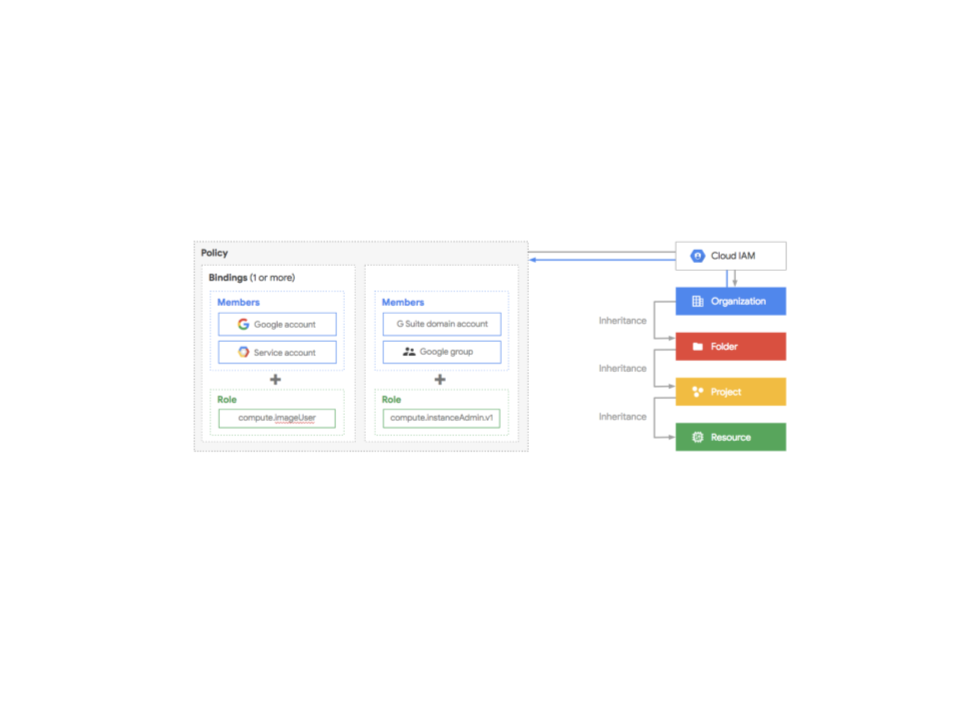

When it comes to what your users are or are not allowed to do using Google Cloud services Cloud IAM ultimately dictates what permissions they are granted. Cloud IAM is used to define who (identity) has what access (role) to _which resource . _For example, granting users permission to access Cloud Storage buckets or launch an instance requires Cloud IAM to be configured to grant those permissions. Permissions are granted in the form of IAM roles ( a collection of permissions). A Cloud IAM policy is created which is a collection of statements defining who (Identities) has what type of access (IAM Roles). The IAM Policy must be attached to a resource.

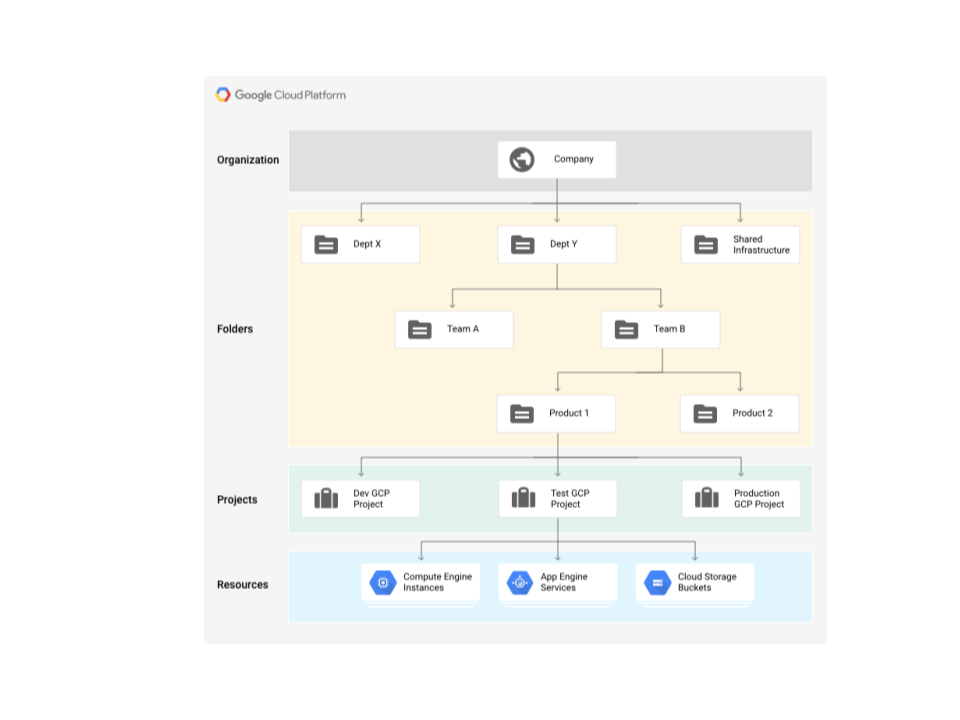

A resource is defined within the Cloud Resource Manager , which is used to create a hierarchical representation of your Google Cloud organisation . The diagram below reflects one example of how you can define your Google Cloud organisation

The Cloud Resource Manager provides attach points and inheritance for access control (IAM policies) and organization policies. The diagram below illustrates this.

Protecting your environment

Be it on-premises or in the cloud you want to be able to protect your environment from uninvited guests, unauthorized insiders, exposure to vulnerabilities and attacks from nefarious ne’er-do-wells.

Whether on-premises or in the cloud you need to use a combination of controls to address the problem. On-premises you would use a combination of products typically sourced from multiple vendors that you then need to manage. Firewalls, load balancers and switches are some of the tools that you will be familiar with. When using Google Cloud you also use a combination of security controls and network controls as required but unlike on-premises the tools are integrated into the platform. You can also augment the platform tooling with partner solutions many of which you may already be using so letting you extend your familiar security tooling to help protect your Google Cloud environment.

Ultimately a defense in-depth blended controls approach is taken whether on-premises or on Google Cloud. The controls on Google Cloud are optimized for a cloud environment. Familiar features may on the surface appear to be similar to what you are used to on-premises have differences you need to understand to ensure that you are configuring them appropriately. To illustrate this same but different aspect we’ll lookin this section at the unique Google Cloud features exhibited by those services that feel familiar.

Subnets - As with an on-premises network, a VPC network can be subdivided into subnets, or subnetworks and you can treat a subnet as a boundary in terms of traffic . All sounds pretty familiar but it’s the differences that need to be understood. A subnet in Google Cloud can stretch across multiple zones within a region and you can extend a subnet without reconfiguring your existing workload.

Firewalls - With a traditional on-premises stateful firewall, you have a firewall within your network, all traffic between subnets or the internet passes through it. Google Cloud firewalls are also stateful, and as with traditional on-premises firewalls, also provide the core capability of setting up accept/deny and ingres/ egress rules. However every VPC network functions as a distributed firewall . Firewall rules are defined at the network level and connections are allowed or denied on a per-instance basis. You can think of Google Cloud firewall rules as existing not only between your instances and other networks, but between individual instances within the same network.

Another unique feature of Google Cloud firewalls is the ability to set up source and target filtering rules for ingress rules by using service accounts . A service account represents an identity associated with a Compute Engine (Compute Engine) virtual machine (VM) instance. This means that you focus on setting up rules where the source or destination is an identity rather than an IP address . As you grant permissions to service accounts these rules are effectively acl’d.

In addition to those familiar but different services Google Cloud has services that are very unique a few of the key ones you are likely to deploy as part of your mitigating strategy in addition to the services listed above are :

Shared VPC - Together with IAM lets you centrally manage your VPC network ,limiting who can make changes to network and security controls. It also lets you restrict which projects can use what subnets.

Private access - Enables virtual machine (VM) instances on a subnet to reach Google APIs and services using an internal IP address rather than an external IP address

Organization policy - Enforcement controls for org wide standardization. Inheritance for easy scalability across the org. For example you can implement an organization policy that helps prevent anyone from outside of your organization being able to access your Google Cloud resources.

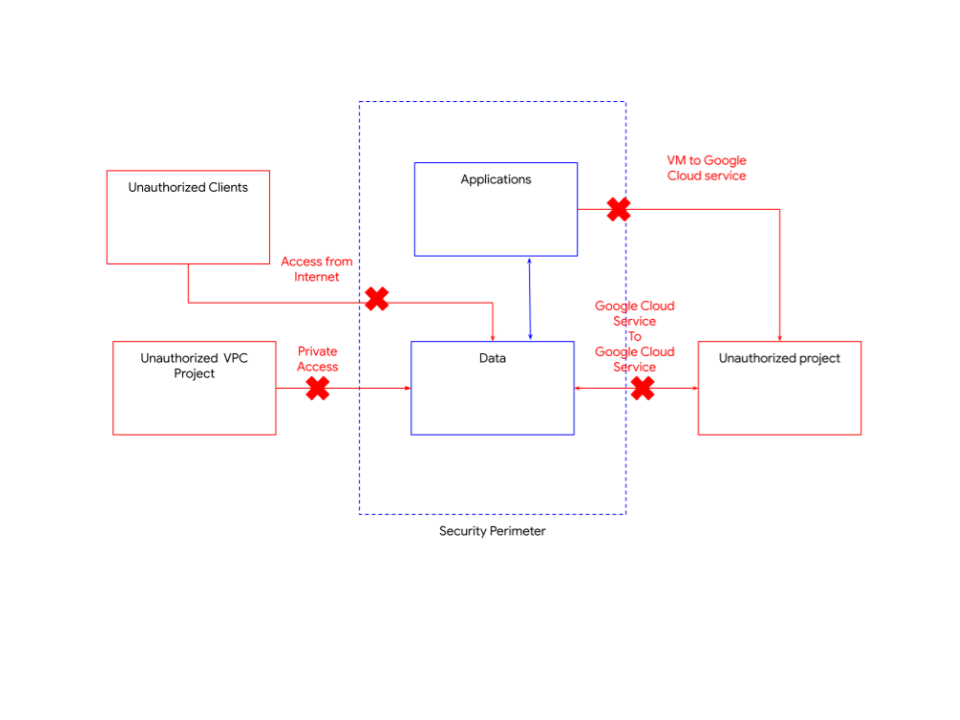

Security zones - Perimeter security for API based Google Cloud services, for example Cloud Storage and BigQuery. Extend a security perimeter across hybrid cloud environments. It lets you enforce context-aware access. The diagram below illustrates how this perimeter works . It is another layer that works along with other security controls such as IAM and various network controls

By employing security zones as part of your defense in-depth approach, it helps to protect against malicious insiders. With private Google access, it provides a further barrier to access beyond validating authorization as if they are not accessing from an authorized part of your network then they cannot access the projects in the protected zone. Security zones also help to prevent malicious insiders from copying data to unauthorized projects

Data management

Managing data inevitably involves being concerned with

- Classification of data

- who should have access to that data

- How that data should be stored.

- How that data should be accessed

- Monitoring who has access to your data when

- Protecting data streams and data at rest

Addressing these concerns typically requires implementing security controls that

- Manage who has access to what

- Prevent unauthorized access

- tokenize and de-identify data to help meet PII requirements

- Encryption

- Auditing & logging

- And enforcing data access paths

On-premises this is achieved by using subnets and firewalls to enforce boundaries based on traffic,implementing appropriate encryption and key management services, enforcing role based access controls, auditing access and if PII data is involved implementing appropriate de-identification and tokenization methods. Implementing the controls on-premises is achieved by using a combination of various third party products. There is often an operational overhead in getting all the controls to work well together . Google Cloud addresses these same data management concerns by providing a set of tools that provide the same functionality but they are integrated with the platform providing a holistic approach. You can augment the platform tooling with many solutions that you may already be using on-premises.

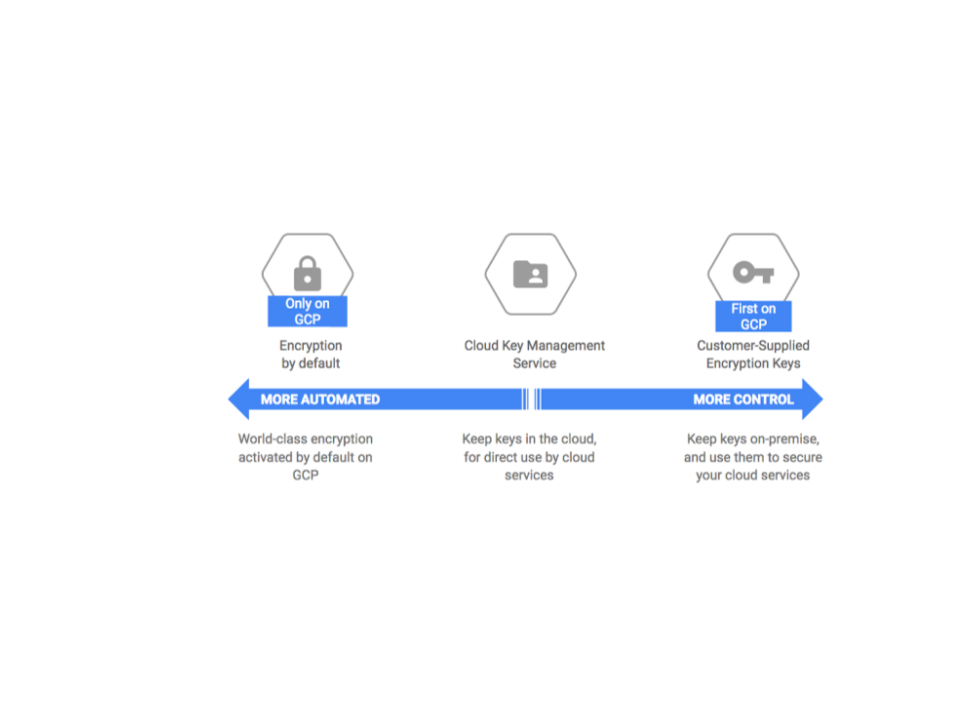

When it comes to encryption at rest Google Cloud has a continuum of approaches. The encryption strategy you adopt depends on whether you want to store keys in the cloud or on-premises. This is dictated by your use-case and the nature of your data (for example, is there financial, personal health, private individual, military, government, confidential or sensitive data?) Maybe you need to comply with regulations that dictate how you manage encryption. Do you need to ensure that you manage the keys that will allow you to decrypt your data on-premises? If so then you would use Customer supplied encryption keys (CSEK) or Cloud External Key Manager (Cloud EKM)

In addition, Google Cloud also has Cloud HSM which is a managed cloud-hosted hardware security module (HSM) service. Completing the Variety of ways you can meet your key management and encryption needs .

Auditing is crucial to having an effective data management strategy . It is also important for your entire security strategy we discuss that topic later in this article

The section on Granting permissions discusses how you enforce who has access to what.

To classify data and thus process it appropriately, you can take advantage of the Cloud Data Loss Prevention API . This helps you to classify and redact sensitive data elements. It is integrated with the platform so using it to classify and redact data in Google Cloud services such as Cloud Storage is relatively easy compared to using non-platform integrated solutions

We mentioned VPC security zones and other network controls earlier which can be used to help enforce data access paths

Protecting your application

When protecting your application plenty of focus is placed on preventing unauthorized access from the perimeter. If you’ve followed best practice when developing your application, you will have taken a defense in-depth approach to not only protecting the perimeter of your environment but also layering on security controls throughout your application tiers. We’ve discussed earlier several controls that help with that defense in-depth approach.

When looking at perimeter controls you focus on the end user access points, are users authorized to access your application? Can you defend against DDOS and application attacks such as sql injection ?

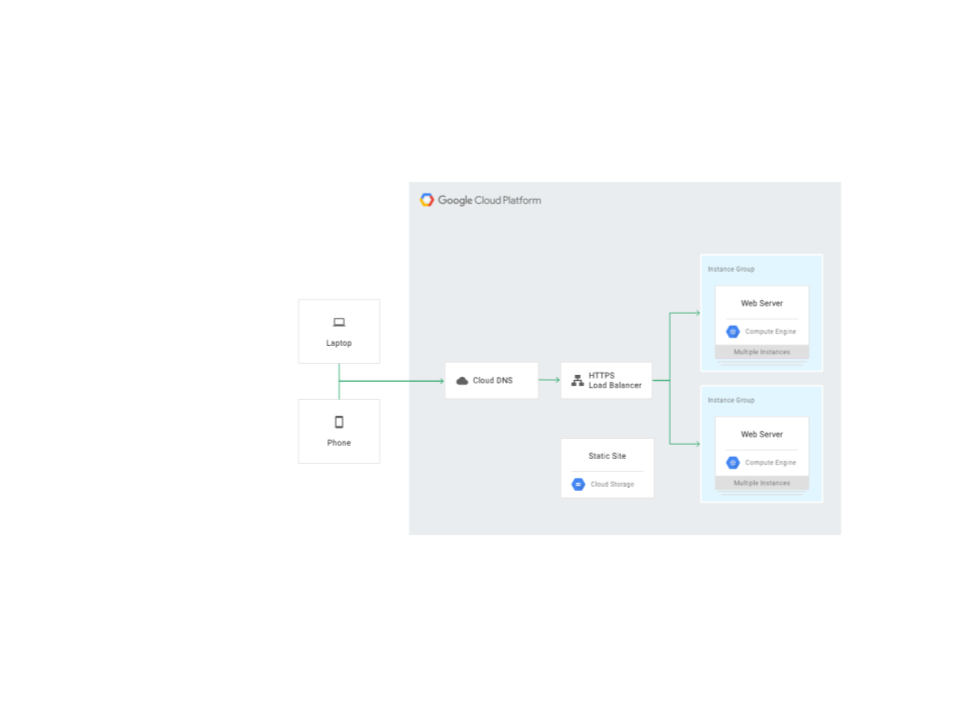

To illustrate how Google Cloud has a joined up approach let’s consider an internet facing application deployed on Google Cloud.

The architecture depicted is a common pattern using Cloud Load Balancing (GLB) as the entry point where users access your application. Cloud Load Balancing is all software and done by the Google Front ends(GFE). The GFEs are distributed around the world but load balance in sync with each other by working with other software defined systems/control plane.

By employing a single Intelligent anycast, it means that your resources fronted by the GLB in all regions have the same single frontend VIP. As we are using an internet facing application as an example, we are concerned with HTTP(S) Load Balancing or SSL proxy Load Balancing . They are built on Google infrastructure and able to mitigate and absorb many Layer 4 and below attacks, such as SYN floods, IP fragment floods, port exhaustion, etc.

The Load-Balancing service can front instances in multiple regions. The architectural implementation of fronting instances no matter where they are located in the Google Cloud network means you are able to disperse your attack across instances around the globe.

In addition the instances behind a load balancer are most often used along with managed instance groups that can autoscale in response to large traffic spikes.

To compliment the features that are inherent in the load balancing service, you can also use Cloud Armor.

Cloud Armor works along with GLB and enables you to deploy and customize defenses for your internet-facing applications. Similar to GLB, Google Cloud Armor is delivered at the edge of Google’s network, helping to block attacks close to their source. It is designed to defend your services against infrastructure DDoS attacks via HTTP(S) load balancing Some of it’s defensive capabilities include the ability to

- Allow or block traffic

- Deploy IP whitelists and blacklists for both IPv4 and IPv6 traffic

- Enable geolocation-based control, and application-aware defense for SQL Injection (SQLi) and Cross-site Scripting (XSS) attacks (in alpha )

We discussed earlier beyond corp and Identity aware proxy (IAP) . You can augment GLB and Cloud Armor by using IAP as a way to manage authentication and authorization in combination with Cloud IAM.

When an application or resource is protected by Cloud IAP, it can only be accessed through the proxy by users, who have the correct Cloud Identity and Access Management (Cloud IAM) role .

In addition to these integrated tools, you can also augment with a partner solution maybe extending some of the tooling you may be employing today on-premises to help protect your Google Cloud resources thus leveraging your existing skills .

It’s highly probable that your application has APIs that third party services need to connect with. Thus you also need to consider how to ensure that your API calls are authenticated. This is typically accomplished by deploying an API proxy which acts as an abstraction layer that “fronts” for your backend service APIs. It provides not only a proxy but management feature , security features such as authenticating & validating what is calling your API’s using short lived tokens & logging as a minimum

Google Cloud gives you two options Cloud Endpoints which is a great choice if you are all with Google Cloud alternatively you can choose Apigee edge which is cross platform and has a set of enterprise features in addition to the core features expected of any API proxy like rate limiting, quotas, analytics, and more.. You may actually be using Apigee edge today on-premises and it works equally as well on Google Cloud.

In addition to the defense in-depth strategy you also need to ensure good coding practice where security is a first class citizen and as such equal attention paid to writing secure code as to writing performant code. Use security scanners as part of your testing pipeline. The Google Cloud platform integrated scanner service covers some basic vulnerabilities and may be worth investigating

Enforcement/ monitoring /alerting

Underlying all the security concerns discussed in this chapter is the requirement to watch what’s going on. Are your policies appropriate? Do you know who is accessing what? Is your traffic flowing as expected? You want to be able to look for and alert on anomalies, audit your environment and enforce your policies .

On-premises there are many many ways for you to monitor your applications, collect logs , provide insights into the logs and alert on anomalies . You can extend many common on-premises solutions to Google Cloud using the Cloud Security Command center to provide a single pane of glass holistic security focused view of your on-premises and Google Cloud resources if you decide to do that.

Google Cloud has built into the platform Cloud monitoring and logging. The cloud logging service collects and stores logs from applications and services. When running applications on GCE, it supports a set of common applications . The Cloud Logging agent is a modified version of the fluentd log data collector. You may already be using a fluentd based log collector on-premises so how it works will not be unfamiliar.

You can also use the API to export your logs to other services . If you have a centralised way to collect logs, you can export your Google Cloud logs to your on-premises centralized sink.

An important reason for collecting log files is to provide an audit trail. Google Cloud provides Audit logs to help you answer the questions of “who did what, where, and when?” by tracking certain events that affect your project, such as API calls and system events. As with the other log types these can be exported for longer term storage.

To gain insights into your network traffic, you may employ features like Netflow which lets you export network telemetry data to reporting collectors. Google Cloud provides VPC flow logs to give you insights into your network traffic. The VPC log data being visible in Stackdriver as with the other logs discussed earlier.

Being able to alert and act upon those alerts is an important part of the monitoring puzzle.

By creating logs based metrics from your audit logs and /or application logs, you can create charts and alerting policies . You can set up alerts to notify you as you set up notifications with your on-premises systems maybe using one of email, pagerduty , hipchat , slack .

Even after implementing your layers of security controls you want to ensure that your policies are enforced. Human error together with the complexity of ensuring that you have actually identified all the areas that need protecting are common issues even for the most detailed of operators. With Google Cloud you can use Cloud functions along with audit logs to ensure that things that get changed are validated and the changes discarded or rolled back.

If you are comfortable with oss you can use tooling such as forseti which is a community driven set of modular tools that originated from tooling used within Google to provide an inventory of your resources, monitor and enforce your policies as well as providing a way for you to understand your IAM policies .

If you got this far thanks for reading and I hope you took away a few things to help you think about how to approach security on your journey to the cloud namely:

- Tooling on the cloud may be different from what you are used to on-premises but the principles are the same .

- Google Cloud ( and by implication any of the larger Cloud providers ) provide integrated tooling

- Defense in depth same principles apply in the cloud as they do on-premises

- You have the ability to use existing tooling or oss tooling with your cloud deployments