Dev Locally With LLMs

Continuing my LLMs for non ML experts this is a post on how I got to my dev env .

I’ve been playing around with local models learning how to create RAG based applications by hosting models locally on my laptop and also on our home server ( Yes I still hug tin ). I thought it would be nice to walkthrough how I got to my set up and then how I intend to move to using AI studio ( in another post)

I started developing with LLMs locally as a way for early experimentation and prototyping. I have all the flexibility and control I needed. It’s where I started playing around with different models, vector databases , embeddings , quantization and other rabbit holes. I’d not got round to exploring agents as yet but that’s coming! And yes I know another bunch of buzzwords

Security & privacy is a great by-product of this approach allowing you to quickly prototype and having some degree of control of your data that is used to fine tune the model and who can access it . However I wasn’t exposing access to my fine tuned local model and by implication any local data I may have been using. I mostly used this blog to fine tune so it’s a moot point for my purposes.

Local development does come with some limitations and here’s what bard had to say about that:

-

Hardware requirements: Running LLMs locally can be demanding on hardware resources, requiring powerful machines with sufficient RAM, CPU, and GPU capabilities. This can be a barrier for developers with limited hardware resources.

-

Scalability limitations: Local setups may not be scalable enough to handle increasing demand and user traffic, especially for large-scale applications with growing user bases.

-

Maintenance overhead: Local development requires ongoing maintenance and updates to the LLM models and infrastructure, which can be time-consuming and error-prone.

To figure out how to manage local LLMs I have been using a couple of Local LLM Management Frameworks to abstract the complexity of managing, maintaining and running LLMs locally .

Ollama - is an open-source framework that simplifies the deployment and management of large language models on Linux systems (and so runs on MACs) . It provides a CLI for managing LLM configurations, installing models, and conducting inference. It also has a ux

GPT4All - is a community-driven project that aims to democratise access to large language models by providing a free and open-source platform for running GPT-4 models locally. You can read the paper here ( its short )

These local LLM management frameworks offer several advantages:

-

Simplified deployment: They provide a user-friendly interface for deploying and managing LLM models without the need for extensive configuration or scripting.

-

Resource optimization: They employ techniques like virtualization and resource allocation to optimise model performance and minimise hardware requirements.

-

Centralised management: They provide a centralised platform for managing LLM models, configurations, and data, simplifying model sharing and collaboration.

A colleague also introduced me to openllm

I’ve pretty much settled on Ollama and I also use it with the ChatGPT-Style Web UI Client for Ollama

But what about your IDE? I hear you cry !

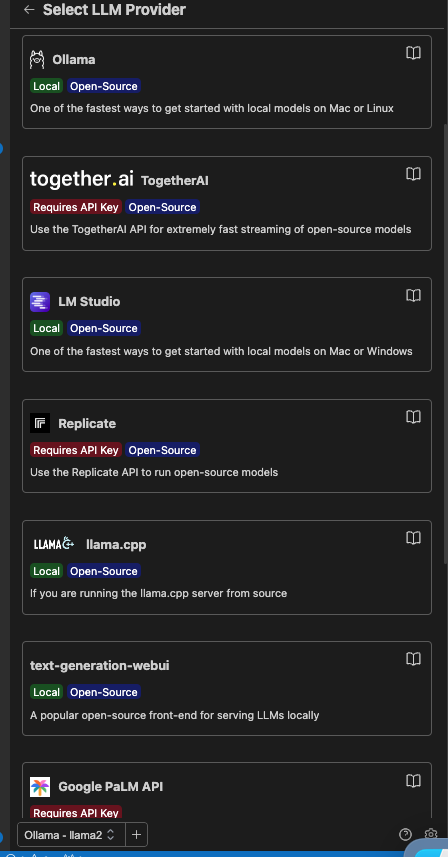

As well as using bard more these days ( the Double-check response button is a a great way to get confidence in the responses) I also use a plugin with my IDE of choice (visual studio code) called continue .

I use continue when dabbling with visual studio code to do things such as check up on how to do something on Google cloud occasionally or look up something as an adjutant. Saves me jumping out of the IDE . However if I am actually working with Google cloud directly than I do find that duet AI embedded in the Google cloud console is so good and really us the way to go ! I use continue with Ollama using Code Lama as the local model .

These days my hands on tends to be gcloud commands, YAML or python infrequently enough that I need to be reminded how to do a thing. I have tried code llama python but I preferred the more generic codellama . To set it up once installed I just needed to configure the the ContinueConfig object in ~/.continue/config.py to point to Ollama using codellama as below

models=Models(

default=Ollama(model="codellama-7b")

When I spun up my dev env for this blog post I noticed this in the continue plugin and the fact that I couldn’t see codellama anymore as an option ( shows how often I spin this and why I need an AI assistant to remind me of things I haven’t used in a while )

Clicking through provided you with guidance on how to set up your preferred LLM provider. Nice touch as that wasn’t available when I started using continue!

So now it’s a json file and you have this

{

"title": "CodeLlama-7b",

"model": "codellama-7b",

"contextLength": 2048,

"completionOptions": {},

"apiBase": "http://localhost:11434",

"provider": "ollama"

}

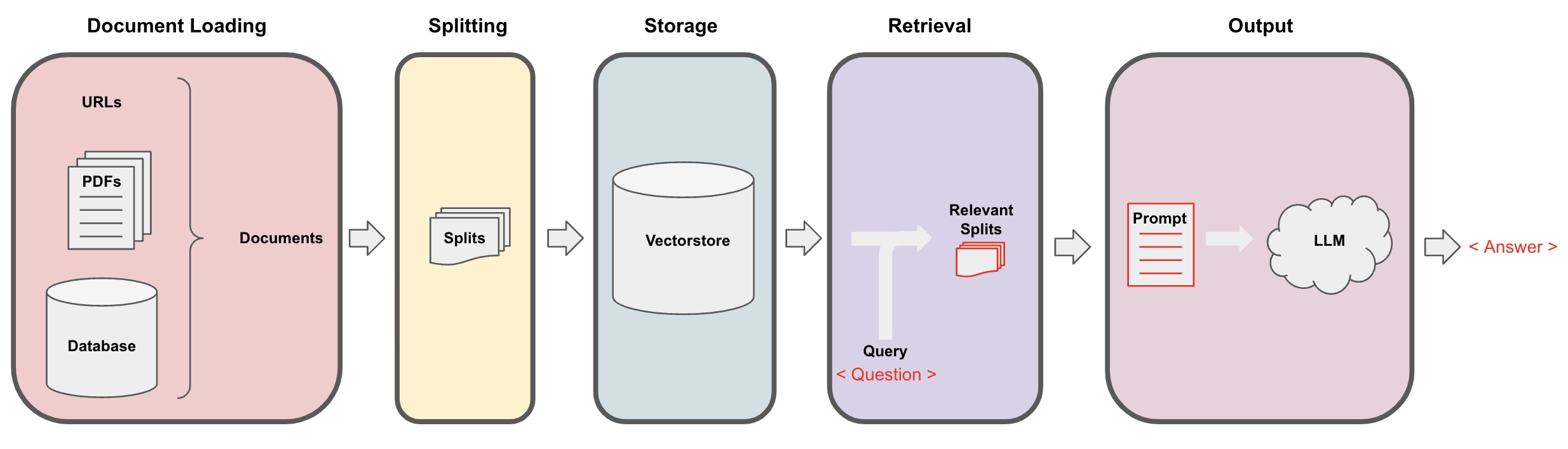

I also use langchain as it just makes it really fast to build simple apps and is a great way to learn about the building blocks required to build LLM based applications .

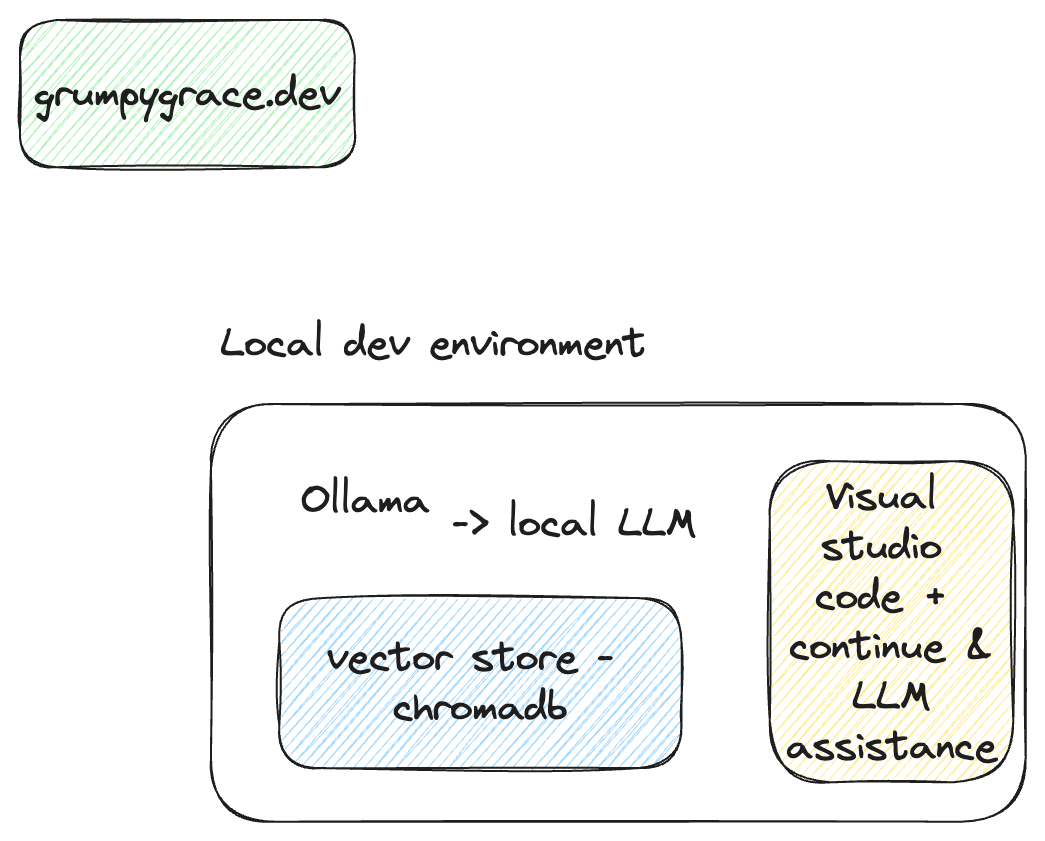

So my dev env looks like this

To create an app that looks like the diagram below is a breeze when using langchain

Diagram of RAG app using Langchain image from https://js.langchain.com/assets/images/qa_flow-9fbd91de9282eb806bda1c6db501ecec.jpeg

For a walk through see QA and Chat over Documents

Here’s the few lines of code it takes to set that up using a local copy of llama2 to use some of my blog posts that I can then q& a with.

from langchain.document_loaders import WebBaseLoader

from langchain.vectorstores import Chroma

from langchain.embeddings import GPT4AllEmbeddings

from langchain import PromptTemplate

from langchain.llms import Ollama

from langchain.callbacks.manager import CallbackManager

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

from langchain.chains import RetrievalQA

import sys

import os

class SuppressStdout:

def __enter__(self):

self._original_stdout = sys.stdout

self._original_stderr = sys.stderr

sys.stdout = open(os.devnull, 'w')

sys.stderr = open(os.devnull, 'w')

def __exit__(self, exc_type, exc_val, exc_tb):

sys.stdout.close()

sys.stdout = self._original_stdout

sys.stderr = self._original_stderr

# load the web docs and split it into chunks

loader = WebBaseLoader(['https://grumpygrace.dev/posts/analogieswithcs/', 'https://grumpygrace.dev/posts/castle/','https://grumpygrace.dev/posts/top-10-sec-llm/'])

data = loader.load()

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

all_splits = text_splitter.split_documents(data)

with SuppressStdout():

vectorstore = Chroma.from_documents(documents=all_splits, embedding=GPT4AllEmbeddings())

while True:

query = input("\nQuery: ")

if query == "exit":

break

if query.strip() == "":

continue

# Prompt

template = """Use the following pieces of context to answer the question at the end.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

{context}

Question: {question}

Helpful Answer:"""

QA_CHAIN_PROMPT = PromptTemplate(

input_variables=["context", "question"],

template=template,

)

llm = Ollama(model="llama2:7b", callback_manager=CallbackManager([StreamingStdOutCallbackHandler()]))

qa_chain = RetrievalQA.from_chain_type(

llm,

retriever=vectorstore.as_retriever(),

chain_type_kwargs={"prompt": QA_CHAIN_PROMPT},

)

result = qa_chain({"query": query})

Now I’m tempted to do more with AI studio and Vertex AI so I started thinking how to extend my local development and hosting to move to the cloud. Google cloud have two environments for developing with LLMs and which one is for you depends (once an architect, always an architect!)

I asked Bard to tell me the difference between the two :

When to use AI Studio

- You are new to AI and want to learn the basics

- You are working on a small or simple AI project

- You want to use pre-built models and don’t need to customize them

- You don’t want to set up your own infrastructure

- You want to collaborate with other people on an AI project

When to use Vertex AI

- You are a developer or data scientist who needs to build production-grade AI applications

- You need to use large or complex models

- You need to deploy your AI applications to GCP

- You need more flexibility and control over your AI infrastructure

I think Bard provided a good enough answer looking at the posts here and here . For my simple purposes of creating a chatbot to ask questions of my blog posts AI studio it is !

So first I needed to get my API key but being in the UK this meant I had to wait as it wasn’t available in my region at the time of writing( I am doing this as if I was a dev with no alternative ways to get my hands on this so wait it was) so for now I’ll leave this here and continue this journey in a part ii when I can get an API key .

However if you’re impatient to get on with your journey to using vertex AI this notebook is a great way to do so https://github.com/run-llama/llama_index/blob/main/docs/examples/managed/GoogleDemo.ipynb

A couple of notes:

- I can swap out the document loader from using webbaseloader to point to locally stored docs . If you want a walkthrough of the code there is a friendly AI chatbot /code copilot who can do that for you 😀

- This code does mean I have to recreate the vector database each time you don’t want to do that for anything you want to use semi regularly and definitely not in production but as I was comparing local models that was fine for me .

- I’ve been using ollama and continue for a while but it seems setting it up has changed since I originally set it up 🤷🏽so you need to edit a config.json instead now as described here or just click the presets in continue and let it do the editing for you!

- There is no guarantee that the code snippet or how i describe my dev env will still work months in the future. I seem to have to tweak something each time I come back to it !

- Alas at the time of writing being in the UK I couldn’t get my Google PALM API key but patience is a virtue