Automating security testing of LLM pipelines

In this post I’m exploring how to automate security & privacy testing as part of your MLOps pipeline when developing with LLMs.

This post will help you to understand what is involved in implementing automated security & privacy tests as part of your MLOps pipeline. However If you are looking to actually implement an MLOPs pipeline with integrated security & privacy testing then I have linked out to the “how” throughout the post so you will be jumping out if you want hands on. So something for everyone who has a vested interest in this topic.

It’s my take on what you can do, use it as a serving suggestion. I just care that you do actually implement automatic security & privacy testing however you choose to do that 🙂 !

Automating security and privacy testing of an MLOps pipeline is essential to ensure that the pipeline is checked for security and privacy risks whenever you push any changes. This is important because new vulnerabilities can be introduced at any time, such as when new data is added to the corpus of data used to train the model .

Benefits of automating security and privacy testing of an MLOps pipeline include:

- Improved efficiency and accuracy - Manual testing is time-consuming and error-prone. Automated testing is more efficient and accurate than manual testing.

- Increased scalability - Automated testing can be scaled to complex and dynamic MLOps pipelines.

- Early detection of vulnerabilities - Automated testing can help to identify security and privacy vulnerabilities early in the development process.

- Continuous monitoring - Automated testing can help to ensure that the pipeline is continuously monitored for security and privacy risks.

I am assuming the following:

- You are following best practices and have separate dev, test, stage and production environments.

- You will be using Vertex AI pipelines for your MLOps pipeline ( usual note re replacing with whatever the equivalent is on your cloud of choice)

- You integrate a CI/CD process to work with your MLOps pipeline whenever updates/ changes are being made.

- You have implemented guardrails and adopted mitigating strategies ( This is about testing not implementing the guardrails) I talk about mitigating strategies in https://grumpygrace.dev/posts/top-10-sec-llm/

Serverless machine learning pipelines on Google Cloud is a nice summary of how Vertex AI pipelines work tl;dr: Each step in a pipeline is a container, and the output of each step can be an input to the next step. It’s serverless so will handle provisioning and scaling the infrastructure to run your pipeline. This means you’ll only pay for the resources used while your pipeline runs. Supports Kubeflow Pipelines (KFP) and TensorFlow Extended (TFX).

Happily you don’t need to start from scratch and can start from this repo which has a reference implementation of Vertex AI pipelines and includes terraform scripts to create the infrastructure on Google Cloud, CI/CD integration using cloudbuild and existing templates to support training and prediction pipelines for common ML frameworks such as TensorFlow and XGBoost. It uses pytest as the testing framework .

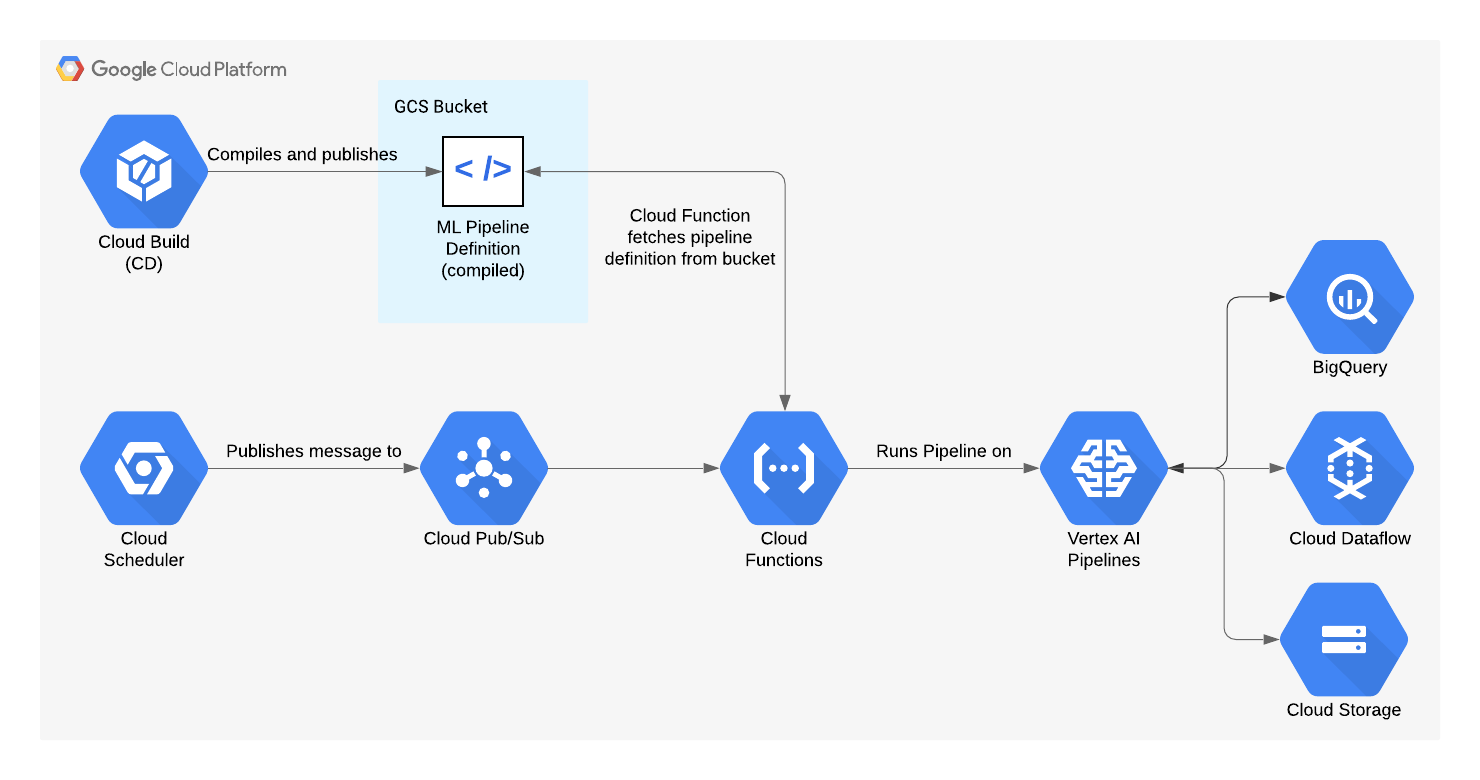

The e2e process looks like diagram 1 ( liberally borrowed from the repo)

Diagram 1: End to end Deployment Pipeline

Diagram 1: End to end Deployment Pipeline

I would suggest having a poke around this repo to see how Vertex pipelines can be implemented and to at least look at the pipeline tests to understand how to create pytest tests. This post will still be here if you want to get some hands on with this. 😀

There are various testing frameworks available but I will stick to using pytest and thus python mostly for convenience where I can. The nature of the issues you need to test for when developing with LLMs does make using traditional testing frameworks more of a challenge but I’ve done a lot of digging around ( to at least save you some time and effort if nothing else ) and it is feasible to automate the more “unique” tests required to have a pipeline that has end to end security tests.

When you need to update your model you will start your pipeline and your tests should automatically be evoked as part of this as the ML process occurs .

However despite me discussing automation you will always need Human feedback as the potential for bias, harmful outputs etc will need human eyes to supplement the automated test results

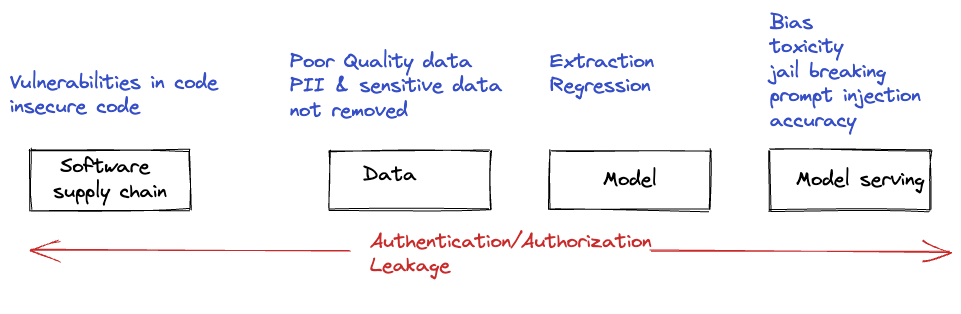

Diagram 2 : Testing pipeline

Diagram 2 : Testing pipeline

Diagram 2 illustrates where in the pipeline security & privacy testing should be incorporated as a basic starting point.

Software supply chain

Download Assured OSS packages using a remote repository | Assured Open Source Software | Google Cloud is a nice tutorial that shows how to integrate Google Cloud Assured Open Source Software service with your CI/CD pipeline . This feature is part of the Software Delivery Shield which is Google Cloud’s fully managed, end-to-end software supply chain security solution

Data collection/ prep

I would argue that the most important place to start is to work out what tests you need to do at the data collection and preparation stage . Data is at the core of what LLMs need so ensuring you test for issues at that stage is crucial as everything else really will be closing the gate after the horse has bolted .

Yes I know it can be audio and video, images as well but for the purposes of this post I will assume the data is text.

I know the more pedantic amongst you will be thinking is this really a test or just part of the data processing stage? Well it’s both. The data needs to meet a quality bar before the pipeline process can proceed.

Implementing your tests for when your corpus of data is relatively small means that as the corpus grows you can test the additional data in manageable chunks .

You need to have a staging process for additional data being added to your corpus of data so you can validate that it passes your tests before adding to the existing corpus of data.

Your tests need to ensure that any PII and sensitive data is identified so that it can then be removed or de-identified from the corpus used to train the model.

You can do this by using Cloud Data Loss Prevention | Sensitive Data Protection (DLP). Inspect Google Cloud storage and databases for sensitive data has example python code for identifying data that does not meet the required criteria e.g sensitive data is included. This example ( I have a soft spot for this particular tutorial so it is nice to be able to use it here) shows how you can use cloud functions to evoke a DLP scan so the scan is evoked automatically when data is uploaded to a GCS bucket.

You don’t necessarily need to use pytest for the data ingestion testing stage but if you wanted to be consistent then you could use a pytest function that calls sensitive data protection. You would thus assert that no findings should be returned for the test to be a success .

Another important check is testing for drift. Drift is where a model may start to adjust its predictions based on new training data that may move outside of the original operating criteria, or a model may begin to degrade as the data changes and exhibits new features or concepts. This can lead to inaccurate responses and biased results . You can do this by incorporating unit tests within the pytest framework.

I found two solutions I felt were worth considering to help you test your data and models both included security tests :

Deepchecks The deepcheck docs have a good section on drift which is worth taking time out to read.

Evidently has 50+ tests that can test for things like drift and regression .

Deepchecks and Evidently can both test data and the model so although I include them at the start of the ML pipeline they do cover the process from validation through to production.

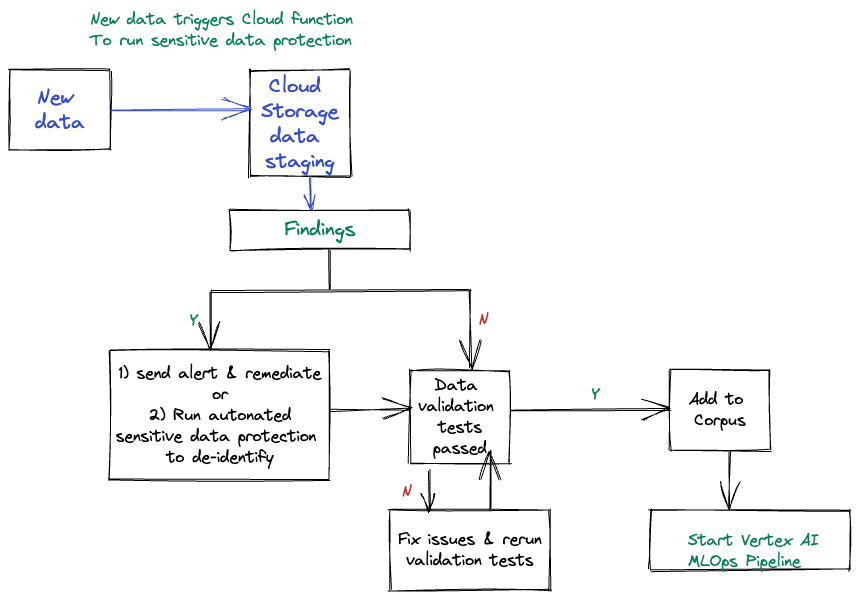

You could use successful test results for where the updated data corpus contains no PII or sensitive data and it passes data validation checks as the trigger to execute a Cloud Function to trigger the execution of ML pipelines on Vertex AI.

Diagram 3 illustrates this process

Diagram 3 : Data validation testing flow

Diagram 3 : Data validation testing flow

Data leakage

Data leakage can occur at various points in the pipeline ( Hence why no dedicated box in diagram 1)

Testing for data leakage is making sure you test the data that is being used in the corpus used for training as discussed above and also that you test for the vulnerabilities that lead to leakage so automated tests need to be incorporated that should include tests for the following :

- Authentication & authorization - This is to check that only authorized accounts have access to the resources that are used as part of the Vertex pipeline in environments ( dev, test, stage and production)

- Validating that inputs are limited in the capacity to generate incorrect and harmful outputs

- Validating that sensitive data cannot be exposed

Use the Python Client for Cloud Asset Inventory API to use Method: analyzeIamPolicy | Cloud Asset Inventory to check if only authorized users have access to resources in your Vertex AI pipeline . Here’s a snippet to help ( just don’t forget to enable the api in your project!)

Pipeline resources

Checking the security of your infrastructure is important as many mitigations require securing the pipeline so you will need to create and run tests to validate controls around the resources that make up your Vertex AI pipeline. These are not specific to developing with LLM .

If you are not already working with a security admin to help implement the monitoring and testing of your security environment then you should start they will help you leverage the suite of security tools available when using Google cloud .

Security Health Analytics is a managed vulnerability assessment service that can automatically detect common vulnerabilities and misconfigurations across a set of specified Google Cloud services. You can send findings to a pub/sub subscription and have cloud functions report a failure if any findings are detected.

Before I get pulled up re Security Health Analytics being a monitoring service, yes it is but that doesn’t stop you using as part of your tests.

Model exfiltration & poisoning

The ability to exfiltrate the model or poison the model can occur at various points in the pipeline. The tests discussed in Pipeline resources and in data leakage are both applicable.

Model serving/ text generation

As you get towards the end of the pipeline you need to employ more esoteric testing methods. I discussed red teaming in https://grumpygrace.dev/posts/top-10-sec-llm/ as a way to help mitigate this issue. By using a LLM scanner like garak you can use its garak’s auto red-team feature to automatically scan the model or chatbot to detect model specific security & privacy issues such as hallucination, data leakage, prompt injection, misinformation and toxicity generation. The source is available at GitHub - leondz/garak: LLM vulnerability scanner as you may need to do some development work to get it to work with your models by developing a plugin. You will need to containerize garak to integrate it directly into you Vertex AI pipeline ( hint moving from conda to a container is well documented)

I found the following repos useful so figure you might too.