Forensics

So let’s assume you have put into practice all the guidance to protect your cloud compute resources . Defence in depth , mitigations against ransomware and unauthorised crypto mining and the myriad of other best practices provided by govts, NIST, and your cloud provider of choice .

You’ve done everything you can and you have still been compromised. As I have said on numerous occasions being compromised may happen so you need to be prepared for that and not only have recovery plans in place but have a robust incident plan in place .

Part of that incident response is how you will approach forensics. Forensics in the way I will be using it in context in this post is the ability to provide evidence of what has occurred without affecting the evidence. Compromised evidence is pretty much no evidence at all .

NIST cloud computing Forensic challenge white paper describes the following 8 steps or attributes for digital forensics

-

Search authority. Legal authority is required to conduct a search and/or seizure of data.

-

Chain of custody. In legal contexts, chronological documentation of access and handling of evidentiary items is required to avoid allegations of evidence tampering or misconduct.

-

Imaging/hashing function. When items containing potential digital evidence are found, each should be carefully duplicated and then hashed to validate the integrity of the copy.

-

Validated tools. When possible, tools used for forensics should be validated to ensure reliability and correctness.

-

-

Analysis. Forensic analysis is the execution of investigative and analytical techniques to examine, analyze, and interpret the evidentiary artifacts retrieved.

-

Repeatability and reproducibility (quality assurance). The procedures and conclusions of forensic analysis should be repeatable and reproducible by the same or other forensic analysts [6].

-

Reporting. The forensic analyst must document his or her analytical procedure and conclusions for use by others.

-

Presentation. In most cases, the forensic analyst will present his or her findings and conclusions to a court or other audience

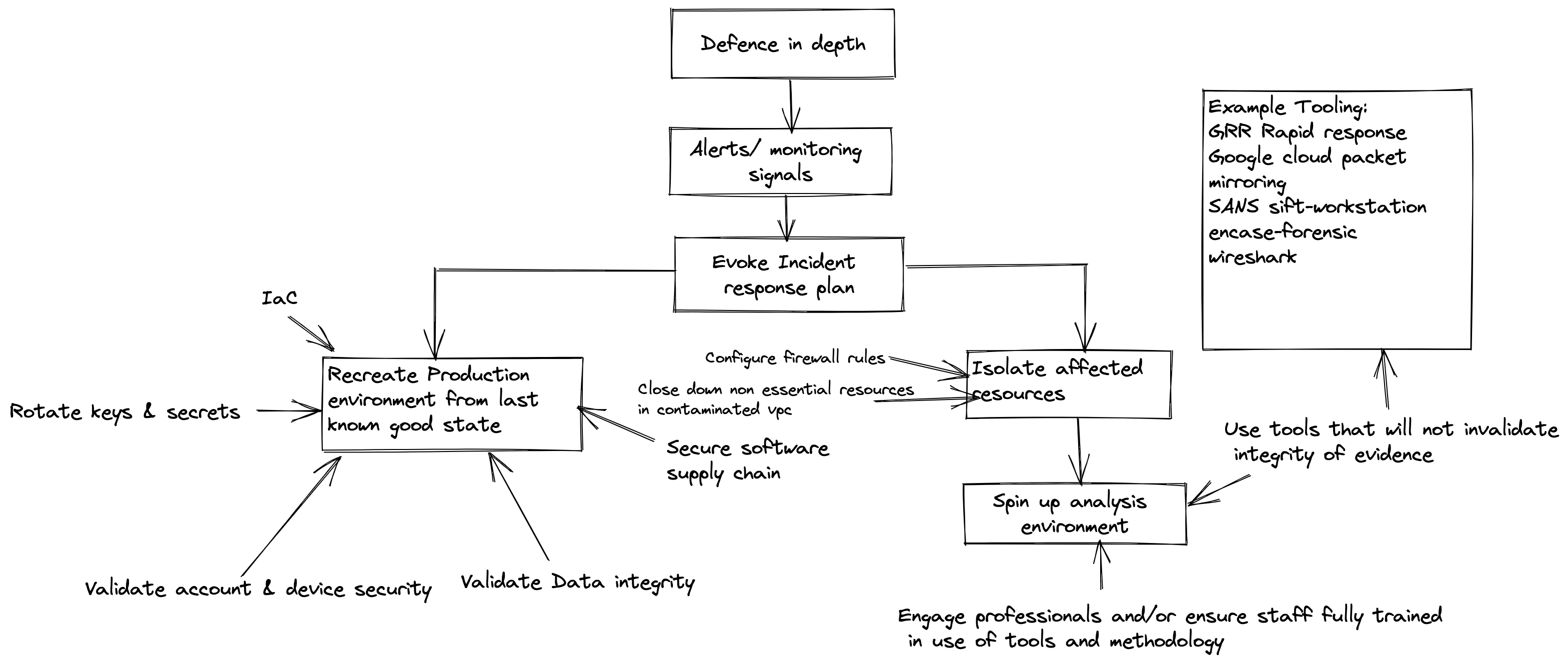

When evoking your incident response that will require forensic evidence so that the attributes above can be met I see essentially four steps:

- Isolate

- Engage professionals

- Collect

- Analyse

I’m going to walk through these stages and as you would expect from me by now I will use Google Cloud as a way to illustrate where I need to.

All the steps discussed should be undertaken in parallel . The schematic below is a sketch of how What I’ll be talking about in this post fits together :

I am going to assume you have put defences in depth in place and followed best practices.

Isolate

This stage requires you to isolate your compromised environment from the rest of your production environment. You need to isolate to provide amongst other reasons :

- A relatively clean production environment without the compromised resources to allow you to get your resources back up and running

- Prevent recontamination

- To provide a source( contaminated) environment that can be used for forensic analysis providing non compromised evidence trails

Compromises will likely be due to one of the exploited vulnerabilities listed below which were detailed in Google Cloud’s November Threat horizons report

The table below is taken from the report and lists the exploited vulnerabilities in Cloud Instances

When implementing your isolation strategy your objective is to preserve the compromised resource as well as you can while trying to bring your production resources back on line in a non compromised way.

You may want to keep the compromised compute resources “live” so that memory can be analysed. So this means not shutting it down immediately and taking a snapshot as a first step but using firewall rules to lock down traffic to and from the infected compute resources.

Meanwhile you should be ensuring that you are mitigating the potential vulnerabilities. We’ll come back to that dodgy compute instance in a bit

- Compromised accounts - Do any of your alerts or dashboards indicate that you have exposed service account details. Google Cloud SCC monitors whether service accounts have downloadable keys and if you use GitHub for example …..

- Compromised access to authorised devices - I’m assuming you implement zero trust

- Have you been able to confirm that the devices your admins and developers use are not compromised and are being used only by authorised personnel?

- Can you confirm that your admins & developers have not had their accounts compromised and that mfa is implemented

- Create a new production environment ( clean folders and projects) using IaC to recreate your cloud resources.

- If you aren’t using GitOps and IaC techniques as yet all is not lost This post is still for you! I am aware that GitOps & IaC is a nirvana yet to be reached by many for all sorts of valid reasons. This tweet encapsulates the situation well

-

Howver if you’re using the cloud you can take advantage of solutions such as terraformer to create terraform files based on your existing cloud infrastructure. If you are using Google Cloud you can use its declartive export for terraform as an alternative to terraformer. It’s worth having your ops teams learn how to use terrafrom and for them to practice regularly bringing up replicated infrastructure using the terraform files created.

-

Ensure that your firewall rules and policies are defined to limit exposure from over exposed network paths and over permissions

-

Ensure that your software supply chain is as secure as it can be

- Are new instances being created from known good sources?

- Are GKE clusters being created following hardening guides or using Autopilot

- Are all required patches being applied to the new instances?

- Are New service accounts being used with only the permissions they need

- Are secrets rotated for the recreated production environment

-

Has data been restored to a known good state ?

Coming back to isolating your compromised compute resources ( This would have been occurring at the same time as you are recreating your production environment)

Regardless of whether live forensics is required or not you must isolate the suspected contaminated compute resources from your production environment.

The most direct and effective way to isolate the contaminated compute resources is to create very restrictive firewall rules . These rules can use the service account of the infected compute resources or use network tags attached to the contaminated resources as the targets of the rule. The firewalls rules will typically direct traffic to a target analysis VPC ( See collect and analyse below)

You need to undertake live analysis so you can examine the contents of the contaminated compute resources in real time but this means you need to keep it up and running.

You maybe able to Suspend and resume an instance as a strategy as this preserves the guest OS memory, device state, and application state.

If it’s determined that you don’t need to undertake live analysis then you can Create and manage disk snapshots of the instance or create a Machine image

then shut the contaminated instance down and recreate the instance in an isolated environment for further analysis .

Make sure you have strategy re the use of snapshots and machine images which should have been determined as part of your DR planning.

If you decide to use snapshots you should follow Best practices for persistent disk snapshots

If you are running workloads in GKE then as part of your DR strategy use Backup for GKE to back up Kubernetes resources and underlying persistent volumes . You can restore the backups into a separate cluster

If you have engaged a professional firm to help you they will advise on whether live analysis is required, For example they may want to try and extract details about symmetric encryption keys used in a ransomware attack which requires live analysis.

Engage professionals

Arguably this is step 0

I can’t stress this enough but you should call in professionals dedicated to dealing with cyber threats. Just having the monitoring signals and undertaken isolation with more than likely a few folks who do not engage in dealing with cyber threats on a daily basis beyond maybe instigating your recovery mechanisms it’s unlikely that you will have a great outcome in following up .

I am not saying you shouldn’t have the ability to undertake some forensic capabilities yourself, it’s just that for most companies you should have a cyber threat intelligence company engaged to help you collate evidence.

It’s insurance, most of the time you hope you won’t need it but when you do you need to be able to enact it asap .

Your team already have or should have the ability to interpret the signals that you collect from monitoring & alerting anyway so you will have a baseline capability and indeed these baseline signals are how you realised you were compromised or maybe you are victim of ransomware

This aside you will have to undertake steps 3 and 4. Your engaged Cyber threat intelligence company may provide you with guidance re what you should or should not do or maybe they will provide resources to help with the end to end process

Collect and Analyse

For steps 3 & 4 they are closely related so I’ll discuss them together

You would examine the signals you have obtained from your

- logging and monitoring so in Google cloud : Cloud Monitoring & Logging

- Your threat detection tooling in Google cloud Security command center

- and if you have any third party SIEMs .

You should have the ability to create a forensics analysis environment. On Google cloud this will be a project that has the tools and data collection resources on an instance in a dedicated VPC network . The principle of least privilege must be followed to give access with dedicated accounts used to access the environment .

The forensics analysis VPC is peered with the source network that contains the contaminated instance

This post : How to use live forensics to analyze a cyberattack | Google Cloud Blog

has some nice diagrams and a nice walkthrough showing what this configuration could look like.

To create the forensic analysis environment you can use Terraform ( yes I know IaC again) to create an on demand a VPC that contains an instance with forensic analysis tools installed such as:

google/grr - remote live forensics for incident response - An incident response framework for remote live forensics. You need to install an agent on the source contaminated instance which the server in the analysis instance can communicate with.

OpenText EnCase Forensic Software - Provides an integrated workflow to collect forensic data while ensuring evidence integrity

The Sleuth Kit - A c library and set of command line tools that can be used to investigate disk images

Wireshark Network analyser I’ve been using this on & off for years from when it was called ethereal. .

The SIFT Workstation | SANS Institute - is a downloadable pre configured VM that has a collection of open source forensic tooling ( including the Sleuth kit mentioned above )

for GKE workloads sysdig is a good option and you should definetly read Security controls and forensic analysis for GKE apps

I’ve just given a sample of open source tooling you can also use third party options which provides you with support options.

Once you have your analysis environment up you can capture live network traffic using Packet Mirroring . Packet Mirroring copies traffic from mirrored sources and sends it to a collector destination. To configure Packet Mirroring, you create a packet mirroring policy that specifies the source and destination.

The target forensics environment is configured to capture the mirrored packets and you can use tools like wireshark to examine the mirrored network traffic.