Large language Models for IT folks who are not ML experts

Understanding & supporting large language model based applications - a guide for IT professionals who are not machine learning (ML) experts

Prompts, original words & editing: by Grace Mollison

Text generation by ChatGPT

Most Diagrams by Grace Mollison, other diagrams liberally borrowed & acknowledged

Proof read by him indoors ( albeit reluctantly)

Introduction

Like many in my bubble I’ve been playing around with the Large language model( LLM ) based chatbots. As an IT professional who is not an ML expert I was thinking, wouldn’t it be nice to have a blog post that was aimed at the folks who although not developing LLMs would still need to provide the infrastructure, implement security controls etc to allow them to be deployed as part of a solution.

My second thought was to have fun writing such a post and use a LLM chatbot to produce most of the words and let’s see how that works.

Research in this area has been going on for years. It’s not an overnight thing, but now that it’s been commoditized, the general public can finally have fun with it. I’m not going to spend time correlating the trough of disillusionment with crypto leaving a gap to be filled for the next hype cycle but yes I admit I have thought that the timing of the explosion in interest was coincidental. Definitely a chat worth having over a cuppa .

I’ve learned a lot in this area to ensure that I had enough knowledge to fact check what was generated before I embarked on this exercise. You just can’t blindly trust a Generative AI (GAI) to generate reliable content as the output while often brimming with confidence is sometimes short on fact ( well at the time of generating this blog post that was the case anyway!) . During this exercise I have tried to provide reasonable prompts and to fact check the output .

I have verified and edited the output from ChatGPT as I saw fit. I have experienced LLM’s accepting unreliable sources and generating truely baffling responses, so I have an inherent mistrust in the veracity of their output, while admiring the speed at which it’s produced (https://en.wikipedia.org/wiki/Never_Mind_the_Quality,_Feel_the_Width )

Most of the effort in generating this blog post was in validating the content with trusted sources ( via my favoured search engine obviously) , adding my own original words , editing and creating the diagrams!

I have edited the responses or rephrased the prompts slightly to get a section more akin to what I had in mind if ChatGPT’s attempts didn’t give me quite what I wanted .

That’s my intro. Here’s an edited version of what ChatGPT had to say when I asked it to provide me with an intro . I have thoughts about this but that’s for another day :

In this post we will cover the basics of language models and natural language processing, the working mechanisms of large language models, the applications of these models, the techniques for evaluating large language models, and the best practices for building these models.

Our target audience is IT professionals who want to learn about large language models but do not have a background in ML or NLP. We assume that readers have a basic understanding of programming concepts, but we will explain technical terms in plain language whenever possible.

Introducing large language models

Grace: This section is a 101 on the fundamentals of LLMs and is actually a combination of text generated by a minimum of 5 prompts with a few additions from me.

ChatGPT seemed to assume that people understood the jargon so I prompted it to create a glossary of terms using this prompt:

Provide a glossary of LLM terms to include in the blogpost such as tokens, prompts, embeddings etc, provide examples of how to use each of the terms in the glossary

I have edited the generated glossary also adding additional definitions along the way. I felt for the purposes of getting the concepts across to folks who just wanted to understand the basics it did a good enough job. For the purists among you though I have included some references for you at the end of this section to go and dig into if you want a deeper dive.

- Tokens: Tokens are the smallest unit of text in natural language processing. For example, in the sentence “I love to eat pizza,” the tokens would be “I,” “love,” “to,” “eat,” and “pizza.”

- Prompts: Prompts are short text strings used to guide the output of a language model. For example, a prompt for a chatbot might be “How can I help you today?”

- Embeddings: Embeddings are a way to represent words or phrases as vectors in a high-dimensional space. For example, the word “cat” might be represented as a vector with values like [0.3, 0.1, -0.2, …].

- Fine-tuning: A process of adjusting the weights of a pre-trained model on a specific task or dataset to improve its performance on that task. Fine-tuning is commonly used to adapt large language models to specific use cases or domains.

- Backpropagation: Backpropagation is an algorithm used to train neural networks by adjusting the weights of the network based on the error between the predicted output and the actual output.

- Stochastic gradient descent: Stochastic gradient descent is an optimization algorithm used to minimise the error between the predicted output and the actual output by adjusting the weights of the neural network.

- Natural Language Processing (NLP): NLP is a field of artificial intelligence that focuses on the interaction between computers and human language. It involves tasks such as text classification, sentiment analysis, and language translation.

- Attention: Attention is a mechanism used in neural networks to focus on specific parts of the input during processing. This allows the model to give more weight to important information and ignore irrelevant information.

- Transformer: A transformer is a type of neural network architecture used for natural language processing tasks. It uses attention mechanisms to process input and has been used in the development of large language models like GPT-3. Transformer explainer is an excellent way to understand how transformers work.

- Perplexity: Perplexity is a measure of how well a language model can predict a sequence of words. A lower perplexity score indicates better performance. It is often used as an evaluation metric for language models.

- Hyperparameter: A parameter that is assigned a value prior to training as part of the configuration settings

- Temperature: Temperature is a hyperparameter that controls the “creativity” or “randomness” of the generated text. It’s used to adjust the probabilities of the predicted words. A higher temperature value will result in more diverse and unexpected predictions, while a lower temperature value will lead to more conservative and predictable outputs. For example, if the model is generating text and the predicted probabilities for the next word are: “apple” (0.5), “orange” (0.3), “banana” (0.2), a high temperature value would increase the likelihood of selecting one of the less probable options, such as “banana,” while a low temperature value would make it more likely to choose the most probable option, “apple.”

A primer on Neural networks

Neural networks are a type of machine learning algorithm that are modelled after the structure and function of the human brain. They are used in a variety of applications, including natural language processing, image recognition, and speech recognition. Neural networks have a rich history, with the first artificial neuron being created in the 1940s and the first neural network being developed in the 1950s.

Structure of Neural Networks

The basic building blocks of neural networks are neurons, layers, and weights. A neuron is the basic computational unit of a neural network and is modelled after the neurons in the brain. Neurons receive input from other neurons, process that input, and output a signal to other neurons. Layers are collections of neurons that process input data in parallel. There are several types of neural network architectures, including feedforward, recurrent, and convolutional.

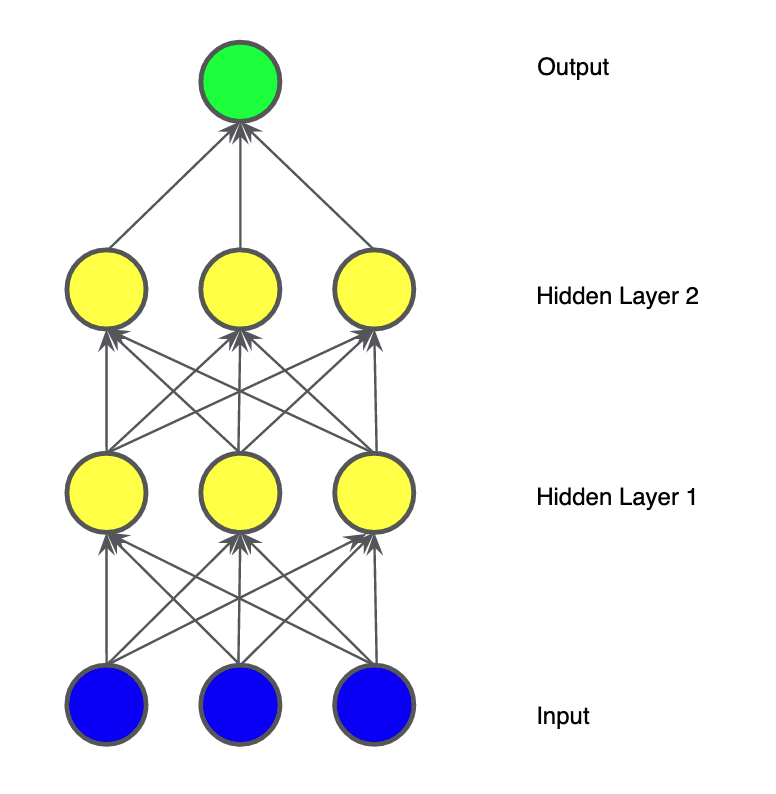

The diagram below is a simple way to visualise a simple neural network as graph.

Diagram liberally borrowed from.Neural Networks: Structure | Machine Learning | Google Developers

Neural networks work by processing input data through a series of layers, with each layer making increasingly complex transformations to the input data. This process is known as forward propagation. During training, the neural network adjusts its weights to minimise the difference between its predictions and the actual output, using an optimization algorithm called backpropagation. Loss functions are used to measure the difference between the predicted output and the actual output.

Training Neural Networks

To train a neural network, large amounts of labelled data are required. During training, the network adjusts its weights based on the difference between its predictions and the actual output. This process is repeated until the network’s predictions are sufficiently accurate. Gradient descent is the optimization algorithm used to train neural networks, which involves iteratively adjusting the weights to minimise the loss function. Regularisation techniques are used to prevent overfitting, which occurs when the network becomes too specialised to the training data.

Neural networks are used in a variety of applications, including natural language processing, image recognition, speech recognition, and robotics. In natural language processing, neural networks are used for tasks such as language translation, sentiment analysis, and text generation. In image recognition, neural networks are used for tasks such as object detection and facial recognition.

Common Challenges with Neural Networks

There are several challenges associated with building and training neural networks, including vanishing and exploding gradients, overfitting, and the black box problem. Vanishing and exploding gradients occur when the gradient of the loss function becomes too small or too large, respectively. Overfitting occurs when the network becomes too specialised to the training data, resulting in poor performance on new data. The black box problem refers to the difficulty in understanding how a neural network arrives at its predictions.

A primer on large language models

A language model is a type of AI model that assigns probabilities to sequences of words in a natural language. It learns the likelihood of a given sequence of words, allowing it to predict the next word or generate new text that is coherent and grammatically correct

Large language models are a type of neural language model that uses deep learning techniques to generate human-like language. They are called “large” because they use a massive number of parameters, ranging from tens of millions to billions, to represent the relationships between words in natural language.

Large language models such as OpenAI’s GPT-3 and Google’s BERT have demonstrated remarkable performance in various natural language processing tasks, including text classification, language translation, and question-answering. They have been trained on massive amounts of text data, including books, web pages, and social media posts, making them highly effective in understanding and generating natural language text.

Large language models work by analysing the relationships between words in a text corpus and learning to predict the likelihood of a given sequence of words. They use a neural network architecture that consists of multiple layers of neurons, each of which performs a specific function in the language generation process.

The network takes a sequence of input tokens, such as a sentence or a paragraph, and generates a sequence of output tokens, which can be a prediction of the next word or a generated sentence. The output tokens are generated by sampling from a probability distribution that is learned during the training process.

Training large language models is a computationally intensive task that requires massive amounts of data and processing power. The training process typically involves several steps, including:

- Data Collection:

- Large language models are trained on massive amounts of text data to learn the patterns and relationships between words in natural language. This data is typically collected from various sources, such as the internet, books, and other text corpora. The text data is collected in raw form, which means that it is unprocessed and may contain noise, errors, and irrelevant content.

- To collect the data, researchers use web scraping techniques to extract text data from web pages or use pre-existing text corpora. The data is then filtered to remove any irrelevant content, such as advertisements, headers, and footers. This ensures that the data is of high quality and can be used for training the language model.

- Preprocessing:

- Once the text data has been collected, it needs to be preprocessed to transform it into a format that can be used for training. The preprocessing step involves several tasks, such as tokenization, normalisation, and filtering.

- Tokenization involves breaking the text data into individual words or subwords, which are known as tokens. This is important because language models operate at the token level and need to understand the relationships between different tokens.

- Normalisation involves converting the tokens to a standard format, such as lowercase, to reduce the complexity of the text data. Filtering involves removing any irrelevant tokens or words, such as stop words or rare words, that are not useful for the language model.

- Training:

- Training large language models is a computationally intensive task that requires a massive amount of processing power and time. The training process involves using deep learning techniques such as backpropagation and stochastic gradient descent to optimise the model’s parameters.

- During the training process, the model is fed with a sequence of input tokens, and the output tokens are predicted based on the relationships between the input tokens. The model’s parameters are updated using the backpropagation algorithm, which calculates the gradient of the loss function with respect to the model’s parameters.

- The training process is repeated several times using different batches of data, and the model’s performance is evaluated on a validation set. The model’s performance is improved by adjusting the hyperparameters, such as the learning rate and the number of layers.

- Fine-tuning:

- Large language models can also be fine-tuned on specific tasks or domains to improve their performance on those tasks. Fine-tuning involves taking a pre-trained language model and training it on a specific task, such as sentiment analysis or language translation.

- During the fine-tuning process, the model’s parameters are updated using a smaller dataset that is specific to the task. The model’s performance is evaluated on a validation set, and the hyperparameters are adjusted to improve the model’s performance.

- Fine-tuning is a powerful technique that allows researchers to adapt pre-trained language models to specific tasks, which can save time and resources compared to training a model from scratch.

References

For those of you wanting a more comprehensive explanation of the terms that will be scattered throughout this blogpost there are wikipedia articles you can look up but I have included some references I enjoyed reading while fact checking & editing this blogpost

Tokens

https://blog.quickchat.ai/post/tokens-entropy-question/

Embeddings

https://jalammar.github.io/illustrated-word2vec/

Word Embedding Visual Inspector

https://ronxin.github.io/wevi/

Prompts

https://txt.cohere.ai/how-to-train-your-pet-llm-prompt-engineering/

Attention & transformer

https://arxiv.org/pdf/1706.03762.pdf

https://ai.googleblog.com/2017/08/transformer-novel-neural-network.html

Backpropagation

https://builtin.com/machine-learning/backpropagation-neural-network

Perplexity

https://towardsdatascience.com/perplexity-in-language-models-87a196019a94

Fine tuning

https://metatext.io/blog/how-to-finetune-llm-hugging-face-transformers

Hyperparameter

https://cloud.google.com/ai-platform/training/docs/hyperparameter-tuning-overview

Transfer learning

https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html

And if you can carve the time out there are some cool online training courses such as that from Hugging Face

Applications of Large language models

Grace: What you can do with large language models is only really starting to be explored particularly after the excitement generated by ChatGPT which caught the imagination of the public. More “intelligent” Chatbots, tooling to help coders be more efficient such as GitHub copilot , and creative uses such as what I did here with this blogpost are a small subset of some of the more common applications and if you look around you’ll find plenty more.

This is a short section but think of this like a netflix series where each episode is as long as it needs to be !

When I asked ChatGPT ( itself an application of a LLM) what are the applications of large language models it said this:

Large language models have been used in a variety of applications, including language generation, question-answering systems, chatbots, sentiment analysis, machine translation, and text classification. These applications rely on the ability of the language model to understand the structure and meaning of natural language.

NLP Use Cases for Large Language Models

Large language models have been used in several NLP use cases, such as:

- Text Generation: Large language models can generate high-quality text that is indistinguishable from human-written text. This application has been used in content creation, story writing, and even poetry.

- Chatbots: Large language models have been used to build conversational agents or chatbots that can simulate human-like conversation. These chatbots can be used in customer support, personal assistants, or even in mental health care.

- Sentiment Analysis: Large language models can be used to classify the sentiment of a given text, such as positive, negative, or neutral. This application has been used in social media monitoring, customer feedback analysis, and brand reputation management.

- Machine Translation: Large language models have been used to improve the accuracy of machine translation systems. By leveraging the pre-trained language model, machine translation systems can better understand the meaning and context of the source and target language.

Examples of Applications of Large Language Models

-

GPT-3 Language Model: GPT-3 is a large language model that has been used in several applications, such as language generation, question answering, and chatbots. The model has been trained on a massive amount of data and has been shown to generate high-quality text that is indistinguishable from human-written text.

Grace : The ASCII art generated by ChatGPT below is a high level illustration of what happens when you give ChatGPT a prompt

Prompt +--------------------------------------------------+ | ** "What does Grace like to..." ** | +---------------------+----------------------------+ / \ / \ / \ / \ Possible Next Words** and** Probabilities +-----------+ +-----------+ +-----------+ | eat | | dance | | ** read ** | +-----------+ +-----------+ +-----------+ 0.2 0.6 0.2 | | | | | | | | | V V V Output Response: **"Grace likes to dance."**

In the example illustrated in the diagram above , the context of the prompt (“What does Grace like to…”) influences the probabilities assigned to potential next words, with “dance” having the highest probability due to its association with activities that people might like to do. (Grace: Out of all those though Read is actually what I’d like to do most followed by eat. Just saying!)

- BERT Language Model: BERT is a large language model that has been used in several NLP tasks, such as sentiment analysis, text classification, and question-answering systems. The model has been pre-trained on a large corpus of text data and has shown to achieve state-of-the-art performance on several benchmark datasets.

- Language Translation: Large language models have been used to improve the accuracy of machine translation systems. By fine-tuning a pre-trained language model on a specific language pair, machine translation systems can better understand the meaning and context of the source and target language.

For a deeper dive read this

Developing with Large language models

Grace: I struggled with what to call this chapter but settled on developing with large language models & tuned my prompts accordingly . This is an intro to LLMs though and this section is easily the basis of a fat book .

To develop a large language model, there are several steps that need to be taken.

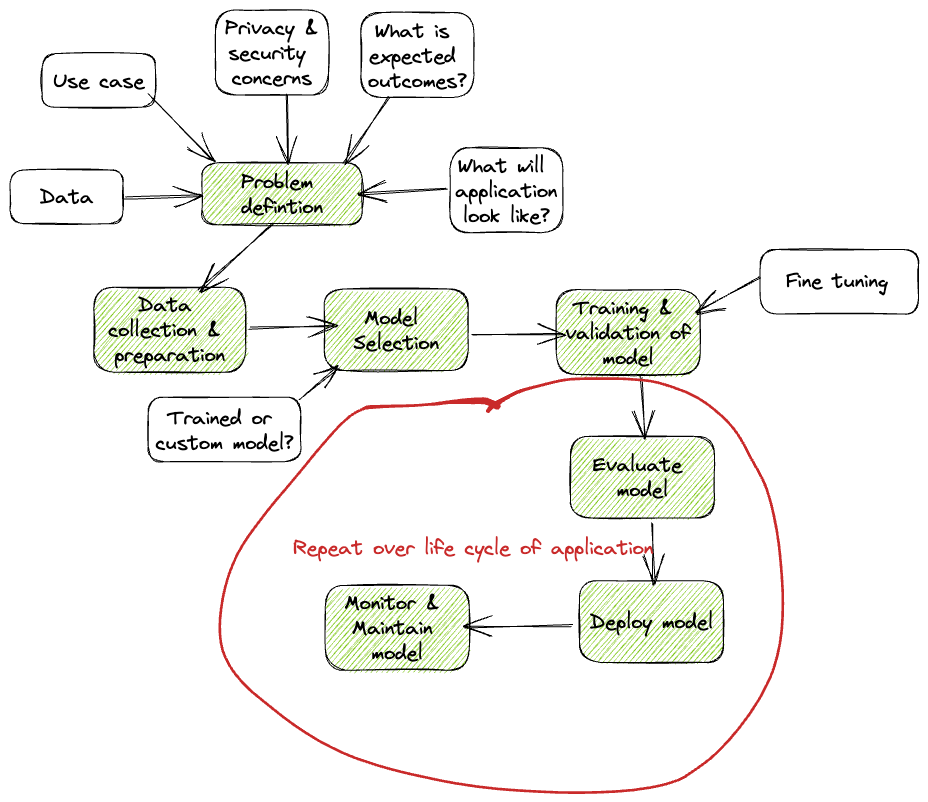

- Define the problem and data requirements: This involves understanding the use case, the data that will be used, and the expected outcomes. For example does the use case require text generation, language translation, sentiment analysis, or question-answering?

- Data collection and preparation: collect and prepare the data that will be used to train the model. This involves identifying the sources of data, cleaning and processing the data to remove noise and irrelevant content, and transforming the data into a format that can be used for training. Tasks such as tokenizing the data , and encoding it in a way that can be input to the model occur at this stage . This process may also include data augmentation techniques such as adding noise, shuffling the data, or generating new samples.

- Model Selection: You can choose to use an existing pre-trained language model that has already been trained on massive amounts of text data, such as GPT-3, BERT, or RoBERTa. Alternatively, you can create a custom model using transfer learning techniques and train it on a specific domain or task. This decision will depend on your specific requirements, available resources, and the complexity of the problem you are trying to solve.

- When choosing between using a pre-trained model or creating a custom model, there are several factors to consider. Pre-trained models are often faster and more convenient to use, but may not be optimised for specific use cases. Custom models, on the other hand, require more time and resources to develop, but can be tailored to specific use cases and offer better performance.

- To identify an appropriate pre-trained model, one should consider the size and complexity of the dataset, the type of language task, and the required level of accuracy. Pre-trained models such as GPT-3 are generally suitable for large and complex datasets, while smaller pre-trained models may be more suitable for simpler tasks.

- To create a custom model, one should consider the model architecture, the size of the dataset, and the availability of computational resources. Custom models can be built using popular deep learning frameworks such as TensorFlow and PyTorch, and can be trained using cloud-based platforms such as Google Cloud and AWS

- Training and validating the model:. During training, the model learns to recognize patterns and relationships in the data by adjusting its internal parameters using techniques such as backpropagation and stochastic gradient descent. This step involves feeding the data into the model, adjusting the hyperparameters as necessary, and validating the model’s performance on a separate validation dataset.

- Fine-tune the model: Fine tuning is sometimes called out as a separate step from training & validating but I ( Grace) feel it makes sense to just call it out under training & validation as it’s a technique. Once the model has been trained, you can fine-tune it on specific tasks or domains to improve its performance. Fine-tuning involves further training the model on a smaller set of data that is specific to the task or domain you are interested in. This process can help to optimise the model for the particular use case and improve its accuracy.

- Evaluate the model: To evaluate the model, you need to measure its performance on a set of validation or test data. This involves using metrics such as accuracy, precision, recall, or F1 score to assess how well the model is performing. If the performance is not satisfactory, you may need to go back and adjust the model architecture or training parameters.

- Deploy the model: Once the model has been trained and evaluated, you can deploy it in a production environment to start using it to solve real-world problems. This involves integrating the model into your existing infrastructure and setting up the necessary data pipelines and API endpoints.

- Monitoring and maintenance: Monitor the model’s performance over time, identify issues, and make necessary adjustments or updates to ensure it continues to function optimally.

Diagram : Development steps .

Evaluating large language models

Large language models have the potential to transform natural language processing and improve many real-world applications. However, in order to determine the effectiveness of a language model, it is important to evaluate it using appropriate metrics and techniques.

Evaluating the performance of a language model requires the use of appropriate metrics. There are several commonly used evaluation metrics for language models, including:

- Perplexity: a measure of how well the model predicts the next word in a sequence of words.The lower the perplexity, the better the model’s performance

- Accuracy: a measure of how many predictions made by the model are correct.

- F1 Score: a measure of the balance between precision and recall for binary classification tasks.

These metrics can be used to evaluate the performance of language models in a variety of tasks, such as text classification, machine translation, and question-answering.

There are several techniques that can be used to evaluate the performance of a language model, including:

- Holdout validation: This technique involves splitting the dataset into two parts: a training set and a testing set. The model is trained on the training set and evaluated on the testing set.

- Cross-validation: Cross-validation is a technique where the dataset is split into multiple folds ( or subsets). One of the folds being used for testing and the others used for training. The process is repeated changing which fold is used as the validation set for each repetition

- Leave-one-out validation: This technique involves leaving out one data point at a time for testing, while the rest is used for training.

These techniques can help ensure that the language model is evaluated using a representative dataset and that the evaluation results are reliable.

Evaluating large language models can be challenging due to the complexity of the models and the massive amount of data they are trained on. Some common challenges in evaluating language models include:

- Lack of labelled data: in some cases, it may be difficult to obtain enough labelled data to evaluate a language model effectively.

- Domain-specific language: language models trained on general text data may not perform as well when applied to domain-specific language, such as legal or medical jargon. Fine-tuning is a technique that can be used to adapt a pre-trained language model to a specific domain.

- Bias in the data: language models can sometimes learn biases from the data they are trained on, which can lead to inaccurate predictions or discriminatory behaviour. Debiasing is a technique that can be used to mitigate the impact of biased data on a language model’s performance.

To overcome these challenges, techniques such as transfer learning and fine-tuning can be used to adapt a pre-trained language model to a specific task or domain. Additionally, measures such as debiasing can be employed to reduce the impact of biased data on the model’s performance.

Evaluating large language models is a critical step in understanding their effectiveness and potential applications. By using appropriate evaluation metrics and techniques, and overcoming common evaluation challenges, we can ensure that language models are developed and used in an effective, reliable, and unbiased way.

Grace: I edited the cross validation definition to make it clearer. Maybe I am not the only one who wondered what a fold was? Note that the validation techniques listed are actually all some form of cross validation !

Operating & supporting a LLM environment

Grace: So finally we got to the all about you part of the blog post . You in this context being you the reader who is an IT pro but not a LLM expert but who will probably need to figure out how to support LLM Developers and apps that use LLM. Over to ChatGPT:

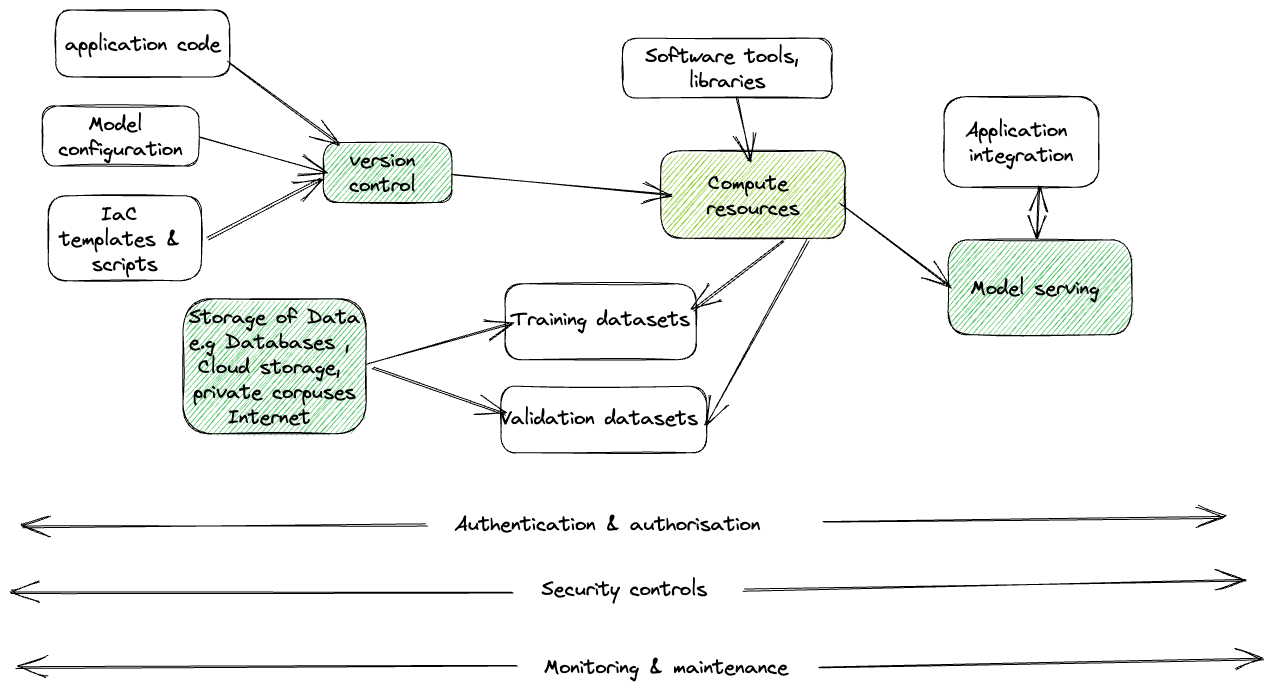

Large Language Models (LLMs) are powerful tools for natural language processing tasks, but they require specialised resources and infrastructure to operate effectively. In this chapter, we will discuss the components and resources required to operate and support LLM-based applications.

Diagram : What needs thinking about when supporting LLM

Compute :

Compute resources play a crucial role in operating and supporting a large language model (LLM)-based application. The requirements for computing resources depend on the size and complexity of the LLM, the size of the training dataset, and the intended use of the model.

One option for compute is to use cloud-based resources, such as Google Cloud Platform, Amazon Web Services (AWS) or Microsoft Azure. These cloud providers offer a variety of compute instances with different amounts of CPU, memory, and GPU resources. Using cloud resources allows for flexibility in scaling up or down as needed and can be cost-effective compared to purchasing and maintaining on-premise hardware.

Grace : I wanted to understand CPU requirements in more detail so I queried about the use of TPU’s versus GPUs

TPUs (Tensor Processing Units) are specialized hardware accelerators developed by Google specifically for machine learning workloads, including training large language models. In terms of raw processing power, TPUs are generally faster than GPUs for large-scale training tasks. However, the performance benefits of TPUs may vary depending on the specific task, the size and complexity of the model, and the amount of available data.

Compared to GPUs, TPUs offer several advantages, including faster training times, lower costs, and increased scalability. TPUs are designed to work with large datasets and complex models, making them ideal for training LLMs. They also offer higher performance than CPUs, which can lead to faster inference times for LLM-based applications.

In some cases, GPUs may still be a more cost-effective option for training LLMs, particularly for smaller models or when working with limited resources. It’s important to carefully consider the specific requirements of your project and compare the performance and cost trade-offs of different hardware options before making a decision.

To use TPUs, you need to have access to a cloud provider that offers them, such as Google Cloud Platform. You can then choose to train your LLM using TPUs instead of GPUs or CPUs. This will require some modifications to your training code, but many of the popular deep learning frameworks, such as TensorFlow and PyTorch, have built-in support for TPUs.

Grace: The paper Scalable Training of Language Models using JAX pjit and TPUv4 by cohere discusses using TPU’s for training language models if you want a deeper understanding.

Storage:

Developing LLMs can require significant storage capacity to store large datasets and model checkpoints. The amount of storage required will depend on the size of the dataset, the complexity of the model, and the number of training iterations.

For example, to train OpenAI’s GPT-3, which has 175 billion parameters ( Grace : I’d always thought it was over this number but I’m nit picking!) , requires hundreds of terabytes of storage. However, smaller models can be trained on less storage capacity.

In addition to storage for the dataset and model checkpoints, it is also recommended to have storage for logs, code, and other project artefacts. Cloud storage solutions such as Amazon S3 or Google Cloud Storage can be useful for storing and managing data and model artefacts. It is important to choose a storage solution that is reliable, scalable, and cost-effective.

Data Management :

LLM-based applications require large amounts of training data, and you need to manage and store this data effectively. You need a data management system that can handle the large volumes of data required to train LLMs. This system should be able to handle data preprocessing, indexing, and storage. Common data management systems used for LLM-based applications include Hadoop, Spark, and Elasticsearch.

Software and Libraries:

To operate and support LLM-based applications, you need access to software tools and libraries. The most popular libraries for developing and deploying LLM-based applications include TensorFlow, PyTorch, and Hugging Face Transformers. These libraries provide pre-trained models, optimization algorithms, and tools for fine-tuning models on specific tasks.

Model Deployment:

Once you have trained and fine-tuned your LLM-based application, you need to deploy it on a production system. The deployment process involves packaging the model and its dependencies into a container or virtual machine and deploying it to a cloud-based or on-premise environment. Common tools used for deploying LLM-based applications include Docker and Kubernetes

Monitoring and Maintenance:

LLM-based applications require continuous monitoring and maintenance to ensure they are performing as expected. You need to monitor the application’s performance, logs, and metrics to identify any issues that may arise. You also need to perform regular maintenance tasks such as updating the software, patching security vulnerabilities, and scaling the infrastructure to handle changes in demand.

Security:

LLM-based applications can be vulnerable to security threats, such as data breaches, hacking attempts, and cyberattacks. To ensure the security of your LLM-based application, you need to implement security measures such as encryption, access controls, and monitoring. You also need to comply with relevant data privacy and security regulations.

Grace : Come on let’s not forget what the folks who are developing using LLMs need!

Developers need access to powerful workstations ( These can obviously be on demand in the cloud!) with high-end CPUs and GPUs to support the development and testing of LLM models. These workstations should also have sufficient memory and storage capacity to handle large datasets and model files.

In addition to hardware, infrastructure includes software tools and frameworks for developing, deploying, and managing LLM-based applications. This includes programming languages such as Python, software frameworks such as TensorFlow, PyTorch, or Hugging Face Transformers, and development environments such as Jupyter notebooks and integrated development environments (IDEs).

In summary, operating and supporting LLM-based applications require specialized infrastructure, software, and data management tools. You also need to ensure the security and reliability of your application by monitoring and maintaining it regularly.

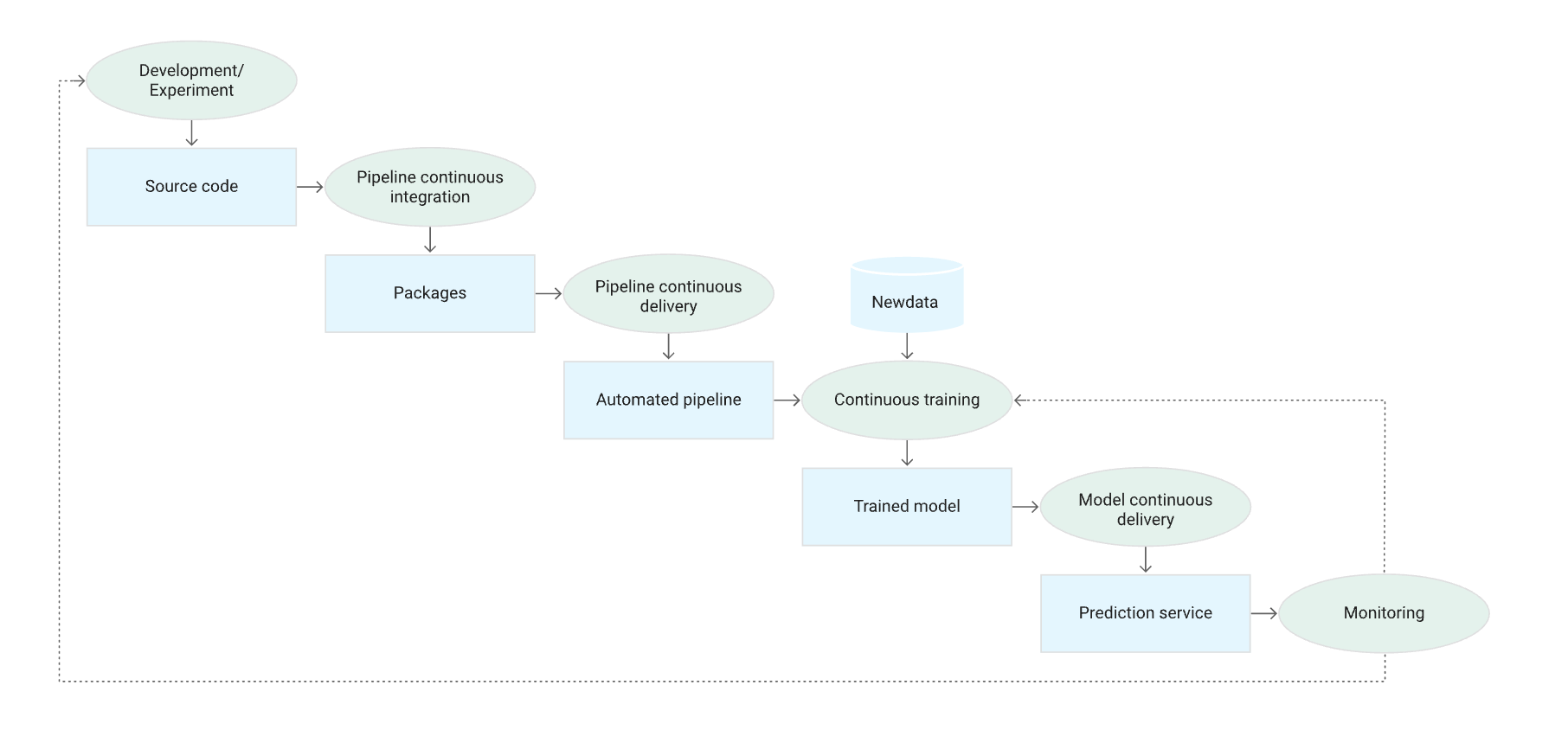

Grace: The practice of managing the pipeline described in the previous section in an automated fashion is known as MLOps ChatGPT is a fan as am I:

MLOps, or machine learning operations, is a set of practices and tools used to manage the lifecycle of machine learning models. It combines the principles of DevOps with the unique requirements of machine learning workflows to support the development, deployment, and monitoring of machine learning models at scale. In the context of a large language model (LLM) environment, MLOps plays a crucial role in ensuring that the LLM is operating at peak performance and delivering the expected results.

There are several ways in which MLOps can be used to support a LLM environment. Some of these include:

- Version control: MLOps relies heavily on version control to keep track of changes to models and their associated code. This is important for LLMs because they often require large amounts of data and processing power to train, and the ability to roll back to a previous version of the model or code can be critical in the event of errors or issues.

- Continuous integration and deployment (CI/CD): Similar to traditional software development, MLOps can use CI/CD pipelines to automate the build, test, and deployment of LLMs. This helps to ensure that changes to the LLM are thoroughly tested and validated before they are released into production, reducing the risk of errors or unexpected behavior.

- Model monitoring: Once a LLM is deployed, it is important to monitor its performance and behavior to ensure that it is delivering the expected results. MLOps tools can be used to monitor the performance of the LLM, such as its accuracy and response time, and alert developers to potential issues or anomalies.

- Automated scaling: LLMs can require significant processing power and resources, and MLOps tools can be used to automatically scale up or down the infrastructure supporting the LLM based on demand. This ensures that the LLM can handle spikes in traffic or processing requirements without impacting performance.

- Data management: Managing the large amounts of data required to train and operate a LLM can be challenging, but MLOps tools can help to automate the process of collecting, cleaning, and managing data. This can include tools for data preprocessing, data validation, and data governance to ensure that the data used to train the LLM is accurate, relevant, and compliant with any relevant regulations or policies.

Overall, MLOps is an important part of operating and supporting a LLM environment. By providing a framework for managing the lifecycle of LLMs, MLOps helps to ensure that LLMs are operating at peak performance and delivering the expected results, while also minimizing the risk of errors or issues that can impact the user experience.

Grace: Google Cloud has some great documentation on this practice start here to understand the concepts https://cloud.google.com/architecture/mlops-continuous-delivery-and-automation-pipelines-in-machine-learning and read the whitepaper it’s worth the read if you need to support any ML based apps not just LLM based applications

I borrowed the diagram below from that page

Diagram : ML CI/CD pipeline

And as a platform admin having to configure the environment to support both development and production of LLM based solutions you too can help make your life a little easier by taking advantage of AI to help you build out your infrastructure it’s not just “code” completion that GAI’s can help with it can help with YAML & JSON completion too for example https://github.com/gofireflyio/aiac

I would however caution that you use wisely & ensure you understand your responsibilities as a platform admin . You must understand the various best practice guidance and repos out there for example the Google cloud GitHub - GoogleCloudPlatform/cloud-foundation-fabric: End-to-end modular samples for Terraform on GCP. .

So I spoke a lot about MLOPs but when working with LLMs you need to think of LLMOps which is dedicated to overseeing the lifecycle of LLMs from training to maintenance using innovative tools and methodologies. It’s a subset of MLOPs and everything discussed applies. These two are great places to start: https://www.gantry.io/blog/test-driven-development-llm

https://github.com/tensorchord/Awesome-LLMOps

What you can expect to be doing

I have called out some obvious personas but I am totally aware that these roles overlap and inter mingle and it’s often hard to fit yourself into a box. That aside the following section should help when you are figuring out who would typically do what!. You will note that your role doesn’t actually change significantly but you now need to take into consideration LLM influences . With all the noise about what jobs will be affected by GAI’s it’s nice to see where you fit into the puzzle

Architect

As an architect, you are responsible for designing the architecture for the LLM pipeline. This includes selecting the appropriate tools, technologies, and platforms for building, deploying, and maintaining the LLM pipeline.

Some of the key tasks that you need to undertake as an architect include:

- Define the architecture: You need to define the high-level architecture of the LLM pipeline. This includes deciding the number of layers in the model, the type of layers, and the type of activation functions to be used.

- Choose the right platform: You need to choose the appropriate platform for building and deploying the LLM pipeline. This includes selecting the cloud platform, such as AWS or Google Cloud Platform, that provides the necessary resources for training and deploying the LLM model.

- Select the right tools: You need to select the right tools and libraries for developing the LLM pipeline. This includes selecting the appropriate deep learning frameworks such as TensorFlow or PyTorch.

- Decide on the data storage: You need to decide on the appropriate data storage solution for the LLM pipeline. This includes selecting the type of database to be used for storing training data and the final LLM model.

- Define the API endpoints: You need to define the API endpoints for the LLM pipeline. This includes deciding on the API interface for accessing the LLM model, and the parameters that can be passed to the model.

- Ensure scalability: You need to ensure that the LLM pipeline is scalable. This includes designing the architecture to handle a large volume of data and user requests, and selecting the appropriate infrastructure to support the LLM pipeline.

- Ensure security: You need to ensure that the LLM pipeline is secure. This includes designing the architecture to handle sensitive data, implementing authentication and authorization mechanisms, and monitoring the LLM pipeline for potential security threats.

- Document the architecture: You need to document the LLM pipeline architecture. This includes creating diagrams and documentation to describe the different components of the LLM pipeline and how they are interconnected.

Security admin responsibilities

As as security admin you will play a critical role in ensuring the security and integrity of LLM pipelines, and should work closely with developers, data scientists, and other stakeholders to implement appropriate security measures and best practices.

Grace: If you’ve read any of my posts before you’ll know I have bee in my bonnet about shifting left and the same principles apply here as they do with everything else.

ChatGPT sums up your responsibilities as security admin working with LLM based solutions well:

As a security admin, there are several tasks you may need to undertake to support LLM pipelines. Here are some examples:

- Risk assessment: Before deploying an LLM pipeline, it is important to conduct a thorough risk assessment to identify potential security risks and vulnerabilities. This includes assessing the security of the infrastructure, data storage, and communication channels used in the pipeline.

- Data privacy and compliance: Depending on the type of data used in the LLM pipeline, you may need to ensure compliance with data privacy regulations such as GDPR or HIPAA. This involves implementing appropriate data protection measures and ensuring that data is stored and processed securely.

- Access control and authentication: To prevent unauthorized access to the LLM pipeline and associated data, you need to implement strong access control measures. This includes user authentication, role-based access control, and monitoring of access logs.

- Threat detection and response: You need to have mechanisms in place to detect and respond to security threats such as malware, phishing attacks, and unauthorized access attempts. This includes implementing intrusion detection and prevention systems, firewalls, and other security tools.

- Incident response and disaster recovery: In the event of a security incident or system failure, you need to have a well-defined incident response and disaster recovery plan in place. This includes procedures for identifying and containing the incident, restoring services, and conducting post-incident analysis and remediation.

- Regular security audits and testing: To ensure ongoing security and compliance, you should conduct regular security audits and testing of the LLM pipeline and associated systems. This includes vulnerability scanning, penetration testing, and other security assessments.

Network admin responsibilities

As a network admin, there are several tasks you may need to undertake to support LLM pipelines, including:

- Network infrastructure: You need to ensure that your network infrastructure is capable of handling the increased traffic and bandwidth requirements associated with LLM pipelines. This may involve upgrading your network equipment, adding additional network capacity, or implementing Quality of Service (QoS) policies to prioritize LLM traffic.

- Security: LLM pipelines can involve sensitive data, so you need to ensure that your network is secure. This may involve implementing firewalls, intrusion detection/prevention systems, and access controls to prevent unauthorized access to LLM resources.

- Monitoring and troubleshooting: You need to be able to monitor your network and LLM pipeline for issues such as bottlenecks, dropped packets, and other performance problems. This may involve using network monitoring tools such as packet sniffers, bandwidth analyzers, and performance metrics to identify and troubleshoot issues.

- Integration: LLM pipelines may involve integrating with other systems or applications, such as data storage systems, analytics tools, or other third-party services. You need to ensure that these integrations are properly configured and tested to ensure smooth operation of the LLM pipeline.

- Scalability: LLM pipelines may need to be scaled up or down depending on the workload. You need to be able to adjust network capacity, hardware resources, and other infrastructure components to ensure that the LLM pipeline can handle increased traffic or demand.

Cloud Platform Admin responsibilities

Grace: I am making the assumption you are using the cloud!

As a cloud platform admin, you are responsible for managing the platform or infrastructure where the LLM pipelines run. The following are some tasks you need to undertake to support LLM pipelines:

As a cloud platform admin, some of the tasks that you may need to undertake to support LLM pipelines include:

- Provisioning and managing the cloud infrastructure: You need to ensure that the cloud infrastructure is provisioned and configured properly to support LLM pipelines. This includes setting up virtual machines, storage, networking, and security.

- Managing cloud resources: You need to monitor and manage cloud resources to ensure that there are enough resources available to support LLM pipelines. This includes monitoring CPU, memory, disk space, and network bandwidth usage.

- Install and configure the necessary software: You need to install and configure the software required for LLM pipelines, including the operating system, database, web server, and other tools. You also need to ensure that the software is up-to-date and properly configured for optimal performance.

- Ensuring high availability and fault tolerance: You need to ensure that the LLM pipelines are highly available and fault tolerant. This includes setting up load balancers, auto scaling groups, and configuring backup and recovery procedures.

- Managing security: You need to ensure that the cloud infrastructure is secured and meets compliance requirements. This includes setting up firewalls, configuring network security groups, and managing identity and access management.

- Manage user access: You need to manage user access to the platform and ensure that users have the appropriate permissions to access and use LLM pipelines. This involves setting up user accounts, roles, and permissions, and enforcing security policies and best practices.

- Monitoring and troubleshooting: You need to monitor the LLM pipelines and troubleshoot issues that arise. This includes setting up monitoring tools, logs analysis, and using metrics to identify issues.

- Performance optimization: You need to optimize the performance of the LLM pipelines by tuning the infrastructure, optimizing the software stack, and using performance monitoring tools.

- Automating tasks: You need to automate tasks such as provisioning, deployment, monitoring, and scaling using tools such as Terraform, Ansible, or Chef.

- Providing support: You need to provide support to the development team, data scientists, and other stakeholders who are using the LLM pipelines. This includes providing documentation, training, and troubleshooting assistance.

- Backup and restore data: You need to set up a backup and restore strategy for LLM pipelines data to ensure that data is protected and can be restored in case of data loss or corruption.

- Implement a Disaster recovery strategy

- Manage user access: You need to manage user access to the platform and ensure that users have the appropriate permissions to access and use LLM pipelines. This involves setting up user accounts, roles, and permissions, and enforcing security policies and best practices.

- Stay up-to-date on LLM trends and technologies: As a platform admin, you need to stay up-to-date on LLM trends and technologies to ensure that the platform meets the changing needs of users and can support the latest LLM pipelines and tools. This involves attending conferences, training sessions, and keeping up with industry news and developments.

Data scientist responsibilities

As a data scientist, there are several tasks that you need to undertake to support LLM pipelines, including:

- Data Preparation: One of the critical tasks of a data scientist is to ensure that the data used to train LLM models are clean, relevant, and representative of the task at hand. You will need to analyze and preprocess data to extract meaningful features and remove noise.

- Model Development: You will need to use software tools like TensorFlow, PyTorch, or Hugging Face Transformers to develop and train LLM models. You may also need to fine-tune pre-trained models to achieve better performance.

- Hyperparameter Tuning: LLM models have several hyperparameters that need to be optimized to achieve the best performance. As a data scientist, you will need to experiment with different values of hyperparameters and choose the best configuration for your use case.

- Model Evaluation: Once you have trained your LLM model, you will need to evaluate its performance. You will need to use metrics such as accuracy, perplexity, and F1 score to assess how well your model is performing.

- Model Deployment: After you have developed and evaluated your LLM model, you will need to deploy it to a production environment. You will need to work with DevOps engineers to ensure that the model is integrated into the production pipeline and deployed with the necessary infrastructure.

- Monitoring and Maintenance: Once your LLM model is deployed, you will need to monitor its performance and make necessary adjustments. You will need to work with DevOps engineers to ensure that the model is operating efficiently and address any issues that arise.

- Continual Improvement: As new data becomes available or the business requirements change, you will need to improve your LLM model continuously. This involves retraining the model, fine-tuning the hyperparameters, and deploying the new model to production.

Overall, as a data scientist working with LLM pipelines, you will need to collaborate closely with DevOps engineers, security administrators, and network administrators to ensure that the LLM environment is secure, reliable, and scalable.

Data engineer responsibilities

As a data engineer, your role in supporting LLM pipelines is crucial in ensuring that the pipelines are efficient, scalable, and reliable. Some of the tasks you need to undertake to support LLM pipelines include:

- Data preprocessing: You need to preprocess the data before feeding it into the LLM. This may involve data cleaning, formatting, and normalization to ensure that the data is consistent and compatible with the LLM.

- Data storage and retrieval: You need to design and implement a data storage system that can handle large volumes of data and support efficient data retrieval. This may involve using distributed file systems, such as Hadoop Distributed File System (HDFS) or Apache Cassandra, or cloud-based storage solutions, such as Amazon S3 or Google Cloud Storage.

- Data pipeline orchestration: You need to design and implement a data pipeline that can handle data ingestion, processing, and output. This may involve using data pipeline orchestration tools, such as Apache Airflow or Kubeflow, to manage the flow of data through the pipeline.

- Model training and deployment: You need to design and implement a model training and deployment system that can handle the large computing requirements of LLMs. This may involve using cloud-based services, such as AWS SageMaker or Google Cloud AI Platform, to train and deploy LLM models.

- Monitoring and maintenance: You need to monitor the LLM pipeline to ensure that it is running smoothly and efficiently. This may involve implementing monitoring and logging systems to detect and diagnose issues in the pipeline. Additionally, you need to perform regular maintenance tasks, such as software updates and system backups, to ensure the pipeline remains stable and secure.

Support responsibilities

As a support specialist, you play a crucial role in ensuring the smooth operation of LLM pipelines. Your responsibilities may include:

- Monitoring: You will need to monitor the LLM pipeline regularly to ensure it is running as expected. This includes monitoring performance, logs, and error messages.

- Troubleshooting: In case of any issues with the LLM pipeline, you will need to troubleshoot and resolve them quickly. This may involve debugging code, reviewing logs, or escalating issues to other teams.

- Responding to user queries: You will need to respond to user queries related to the LLM pipeline. This may involve answering questions related to usage, functionality, or resolving issues related to the application.

- Upgrades and maintenance: You will need to ensure that the LLM pipeline is up to date and perform regular maintenance to ensure it is running smoothly. This includes performing updates, backups, and maintaining system stability.

- Documentation: You will need to maintain documentation for the LLM pipeline, including user manuals, troubleshooting guides, and technical specifications. This will help users troubleshoot issues on their own and reduce the number of support requests.

- Training: You may need to provide training to users on how to use the LLM pipeline, its features, and its functionalities. This will help users get up to speed quickly and reduce the number of support requests.

- Coordination: You will need to coordinate with other teams, such as developers, data scientists, and platform administrators, to resolve issues related to the LLM pipeline.

- Escalation: If a problem cannot be resolved at the support level, you may need to escalate the issue to the appropriate team, such as development, platform, or data engineering team, for resolution

Grace :Before you ask, yes I did ask about SRE but I feel with 3 books out there and ChatGPT’s reply being way too shallow that after seeing how long this ended up being I had to decide to stop prompting at some point!.

After drafting this blogpost I read this paper https://arxiv.org/pdf/2303.12132.pdf which is probably the best thing I’ve read on GAI so far . I loved the history lesson, educated guesses , the fact it was very up to date ( at the time of writing) and having a security background it did seem it was aimed at me as a reader!