Top 10 Security & Privacy risks when using Large Language Models

As with any technology new or old, understanding what the security & privacy threats are is an important first step to figuring out how to safely incorporate their use as part of any solution. The explosion of interest and use cases for LLM has already led to a number of well publicised security & privacy concerns. This post aims to discuss what I consider to be the top 10 privacy and security risks ( in no particular order) for enterprises when contemplating incorporating LLMs into their solutions . I also explain a number of ways to mitigate the threats & include some great articles for further reading.

As it’s such a fast moving field and everything is changing fast my advice is to engage a professional cyber security firm who actually understands the issues to help so they can provide concrete actionable guidance to help mitigate the identified & emerging threats .

Anyway onwards and I apologise for the length but grab a cuppa or your beverage of choice and maybe a biscuit or two and settle down for hopefully what you’ll find a useful read.

I settled on the following as my top 10 privacy & security risks when using LLMs that enterprises need to be aware of

- Data leakage

- Reverse engineering of source training data

- Incorrect content generation

- Model bias

- Harmful content generation

- Prompt injection

- Unauthorised access to model training process

- Compliance/regulatory incompatibility

- In-secure code creation

- Deep Fakes

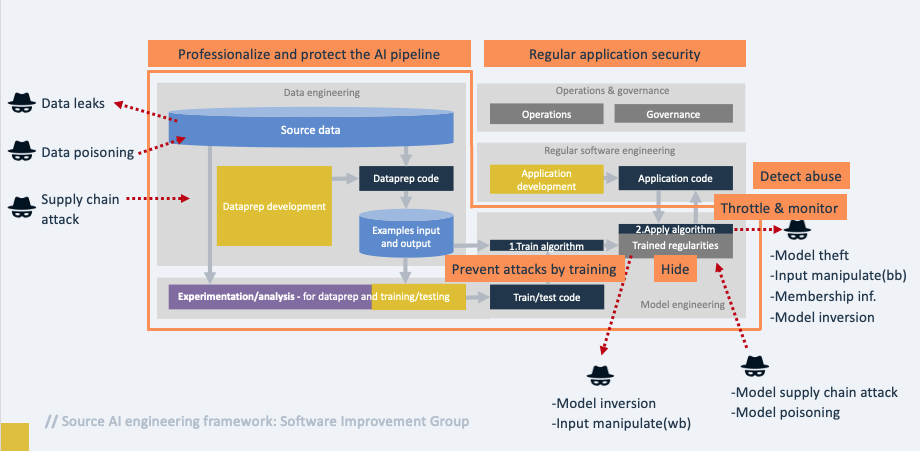

As I was getting to the end of writing this post I was happy to see that there is an OWASP Top 10 List for Large Language Models which I strongly recommend that you keep on top of. It’s also not nearly as verbose as this post! Their list differs from my list but we do have some overlaps. I also stumbled across the OWASP AI Security and Privacy Guide where I borrowed this great image from which captures in one picture a threat model for AI

It’s a great read and who knows if I’d seen that earlier I may not have decided to write this so I personally am glad I hadn’t stumbled across it earlier as I now have a deeper understanding of the threats so it wasn’t a wasted effort for me anyway plus I really enjoyed digging into this.

Update July : A colleague shared this great survey on the Challenges and Applications of Large Language Models . It’s long but worth reading.

Data leakage

LLMs are trained on massive datasets of text and code, which can contain sensitive information such as personally identifiable information (PII), trade secrets, and intellectual property. This means that they can potentially memorise and leak sensitive information that is present in the training data. There are a number of ways in which data leakage can occur when using LLMs:

Contextual information leakage: When using large language models, it’s common to provide some context or prompt to guide the model’s response. However, if the prompt contains sensitive information that should not be disclosed, there is a risk of leakage. The model might generate or infer sensitive details from the provided context and include them in its output.

Membership inference attacks: These attacks involve querying the LLM with specific prompts or patterns that are likely to trigger the model to generate text that contains sensitive information. For example, an attacker could query the LLM with the prompt “My credit card number is” and the model might generate the text “1234-5678-9012-3456”. This information can be used by the attacker to launch other attacks, such as data poisoning, disclosure of propretary information and identity theft .

Model extraction attacks: These attacks are typically carried out by attackers who have access to the LLM model. The attacker will try to reverse-engineer the model or use a technique called “model distillation ” to extract the model itself. Once the model has been extracted, the attacker can use it to generate text, which could contain sensitive information.

There are a number of steps that can be taken to mitigate the risk of data leakage when using LLMs:

Sanitize the training data: This involves removing any sensitive information from the training data before the model is trained.

Use a privacy-preserving training algorithm/approach: These algorithms are designed to prevent the model from memorising sensitive information.

- Differential privacy is a technique that adds noise to data in a way that preserves the overall distribution of the data, while making it difficult to identify individual records. This can be used to protect the privacy of individuals whose data is being used to train a large language model.

- Homomorphic encryption is a technique that allows data to be encrypted and then processed without decrypting it. This can be used to train a large language model on encrypted data, which can help to protect the privacy of the data.

- Federated learning is a technique that allows multiple parties to train a machine learning model on their own data, without sharing the data with each other. This can help to protect the privacy of the data, as each party only shares the model updates with the other parties.

- Secure Multi-Party Computation (MPC) is a cryptographic technique that allows multiple parties to jointly compute a function on their private inputs without revealing those inputs to each other. By utilising MPC, training data can be kept private while still collaborating to train a model. The computations are performed in a distributed manner, ensuring that no individual party has access to the complete training data

- Knowledge distillation involves training a smaller, less resource-intensive model (student model) to mimic the behaviour of a larger, more powerful model (teacher model). By using the teacher model’s predictions as a training target, the student model can be trained without directly accessing or revealing the sensitive training data used for training the teacher model.

- Synthetic data generation techniques can be employed to create a data set that simulates the statistical properties of the original data. This synthetic data can be used for training without revealing any real user information. Techniques like generative adversarial networks (GANs) or differential privacy-based synthetic data generation methods can be utilised for this purpose. Tools such as https://gretel.ai/ can be used to assist with this

Each of the privacy-preserving training algorithm/approach techniques described has its own advantages and limitations. The choice of privacy-preserving training algorithm depends on factors such as the specific use case, the sensitivity of the data, computational requirements, and the level of privacy protection required.

The following articles provide more information to get you started on a deeper dive on Differential privacy for LLMs if you so desire . It’s a rabbit hole so you have been warned. They’re friendly rabbits though !

https://arxiv.org/pdf/2204.07667.pdf

https://www.private-ai.com/2022/10/26/top-7-privacy-papers-for-language-models/

Deploy the model in a secure environment: This involves taking steps to protect the model from unauthorised access. Use techniques such as IAM, VPC controls and encrypting data at rest.

Reverse engineering of source training data

Reverse engineering the source training data for large language models are complex and multifaceted. There are both potential benefits and risks associated with this activity

Benefits are transparency and accountability and by providing help to improve the understanding of how large language models work . By understanding the training data, researchers can better understand the biases and limitations of these models.

Reverse engineering of source training data can be used to attack large language models. By understanding the training data, attackers can develop techniques for generating text that is more likely to be accepted by the model. This can be used to create fake news, spam, or other forms of harmful content. In addition, reverse engineering can also lead to the disclosure of confidential information. If the training data contains sensitive information, such as personal data or trade secrets, then reverse engineering could lead to the unauthorized disclosure of this information.

There are a few ways to reverse engineer the source training data for large language models.

Distillation is a technique for transferring knowledge from a large model to a smaller model. The large model is first trained on a large dataset of text. The smaller model is then trained on a smaller dataset of text, but it is also given the predictions of the large model as input. This helps the smaller model to learn the patterns in the text that the large model has learned

Model extraction is a technique for extracting the parameters of a model from its code. The parameters of a model are the values that the model learns during training. Once the parameters have been extracted, they can be used to train a new model.

Analysis of model output: By analysing the output of a large language model, it is possible to identify the sources of the data that the model was trained on. For example, if the model is trained on a dataset of news articles, then the model’s output will likely contain words and phrases that are commonly found in news articles. By analysing the model’s output, it is possible to identify the sources of the data that the model was trained on.

How to mitigate:

- Data obfuscation/anonymization This can be done by removing identifying information, replacing words with synonyms, and adding noise to the training data ( see Differential privacy below). For example, if the training data includes a list of names, the names could be replaced with numbers.

- Deploy differential privacy techniques to add noise to a dataset in a way that protects any PII data in the dataset. This means that it is still possible to use the model to make predictions, but it is more difficult for an attacker to learn anything about the PII data involved in the creation of the model.

- Data can be aggregated or combined from multiple sources to make it harder to trace back specific data points to their original sources. Aggregating diverse datasets helps to blend the characteristics of different sources and makes it more challenging to identify individual data samples.

- Model protection is a technique for making the model itself more difficult to access and use. This can be done by encrypting the model’s parameters so that they cannot be read without access to the encryption key. Storing the model in a secure location is also a technique that can be employed.

- The encryption key can be stored in a secure location, such as a hardware security module (HSM). This makes it very difficult for attackers to access the model’s parameters, even if they have access to the model’s code.

- Implementation of robust data handling practices to ensure that the training data is securely stored, accessed, and used. This involves employing encryption, access controls, and monitoring systems to prevent unauthorised access or data breaches.

- Limit model outputs:Implementing measures to prevent the model from generating certain sensitive information or restricting the output in specific domains can help safeguard against potential misuse.

- Establishing clear ethical guidelines and principles for data usage is crucial. Organisations should prioritise user privacy, ensure compliance with applicable laws and regulations, and define responsible practices for handling sensitive information.

There is no perfect way to mitigate against reverse engineering. However, by using a combination of the techniques described above, it is possible to make it more difficult for attackers to reverse engineer the training data for large language models.

In-correct Content generation

LLMs work by analysing the relationships between words in a text corpus and learning to predict the likelihood of a given sequence of words. Typically the LLM is passed a sequence of input tokens, such as a sentence or a paragraph, and generates a sequence of output tokens, which can be a prediction of the next word or a generated sentence. The output tokens are generated by sampling from a probability distribution that is learned during the training process. This is how ChatGPT and Bard behave.

The large corpuses of text the LLM is trained on can be out of date, of poor quality , from dubious sources on the internet ( which may be up to date but can be factually incorrect & difficult to validate).

Understanding how LLMs work and what they are trained on it becomes clear why there are so many reports of them making things up (hallucinations) and generating incorrect content. The issue is they come across as very plausible even when the response is nonsense or just factually incorrect . The implications of in-correct responses can have serious consequences such as the wrong diagnosis, encouraging the person using the LLM to follow poor advice and many more unfortunate outcomes .

How to mitigate:

Use a high-quality training dataset. The quality and freshness of the training dataset is critical to the accuracy of the model. The dataset should be comprehensive, covering a wide range of topics and formats. It should also be accurate, with no errors or biases. However if you are using a LLM that you do not have direct control of the dataset being used you will have to use a number of other techniques.

Train the LLM on a smaller, task-specific dataset. This allows the LLM to learn the specific nuances of the task, such as the vocabulary, grammar, and patterns of the language used in the task domain. This is known as Fine-tuning. Fine-tuning is typically done after the LLM has been pre-trained on a large, general-purpose dataset.

Context stuffing. is a technique for feeding LLMs with additional information that is not included in the prompt or dialogue. This information can be used to improve the model’s understanding of the request and to generate more informative responses. For example, if you wanted to use a LLM to generate instructions for making a cup of coffee using a coffee machine, you could first feed the model with the following information:

-

The make and model of the coffee machine.

-

The type of coffee you want to make.

-

The amount of water and coffee grounds to use.

-

The steps involved in making the coffee.

This could look like the following :

"ACME Coffee maker , black coffee, 8 ounces of water, 2 tablespoons of ground coffee"Once you have provided the model with this information, you can ask it to generate the instructions for you. The model will use the information you have provided to generate instructions that are specific to your situation.

Human reinforcement can help improve the accuracy of responses by providing feedback to the model on its performance. This feedback can be in the form of positive or negative reinforcement, depending on whether the model’s response is accurate or not. For example, if the model is asked to answer the question “What is the capital of France?” and it responds with “Paris,” the user can provide positive reinforcement by saying “Correct!” This will help the model to learn that its response was accurate and that it should continue to generate responses like that. Alternatively , if the model is asked the same question and it responds with “London,” the user can provide negative reinforcement by saying “Incorrect!” This will help the model to learn that its response was inaccurate and that it should not generate responses like that in the future.

Model bias

LLMs are trained on large datasets of text and code and can inherit biases from the data used to train them. Enterprises may be concerned about the potential impact of biased models on their operations, customers, or reputation.

There are a number of ways to mitigate the effects of model biases when working with LLMs.

The first step to mitigating bias is to be aware of its potential. This means understanding how bias can manifest in language models and being on the lookout for signs of bias in the model’s output.

It is important to be transparent about the data that was used to train the language model. This will help users to understand the potential biases that may be present in the model. Clearly documenting the limitations and potential biases of the model, along with the steps taken to mitigate them, is important for transparency and accountability. This helps users understand the model’s strengths and limitations and encourages responsible usage.

There are a number of tools available that can help users to identify bias in LLMs. These tools can be used to flag potential biases in the model’s output and to help users to understand the potential impact of these biases. Some tools are

- AI Fairness 360 (AIF360) is an open-source toolkit developed by IBM that provides a comprehensive set of metrics, algorithms, and explanations to help detect and mitigate biases in AI systems. It includes fairness metrics, bias mitigation techniques, and algorithms to measure and mitigate bias in datasets and models.

- What-If Tool , developed by Google, is a web-based tool that allows users to analyze the behavior and outputs of machine learning models. It provides an interactive interface to explore model outputs across different slices of data and compare the impact of various features, helping users identify potential biases in model predictions.

- FairML is an open-source toolkit that can be used to detect and mitigate bias in machine learning models. It includes a number of features that make it well-suited for detecting bias in language models, such as the ability to compare the performance of a model on different subsets of data.

Diverse Training Data: Ensuring that the training data used for the model is diverse and representative of different perspectives and demographics can help reduce biases. This involves including a wide range of sources and viewpoints to avoid skewing the model towards a particular bias.

Implementing robust mechanisms to detect and analyze biases in the model’s outputs is crucial. This can involve employing techniques such as pre-training and fine-tuning on carefully curated datasets to identify and quantify biases present in the model.

Bias-Aware Fine-Tuning: During the fine-tuning process, it is possible to incorporate additional steps to make the model more aware of and sensitive to biases. This can involve augmenting the training data with specific examples that highlight potential biases and explicitly instructing the model to minimize those biases in its responses.

User Feedback and Iterative Improvement: Actively seeking feedback from users and the wider community is essential to identify and address biases that may arise in real-world usage. Open dialogue with users can help uncover biases and prompt improvements to the model’s behavior and responses.

Collaborative Efforts: Engaging in collaborative efforts with external researchers, organizations, and the wider AI community can help identify biases and develop shared best practices for bias mitigation. Sharing knowledge and insights can lead to more effective strategies and better overall results.

It is important to note that there is no single solution to the problem of bias in large language models. However, by using a combination of the methods described above, it is possible to reduce the impact of bias and make these models more fair and equitable. Ongoing research, collaboration, and continuous improvement are necessary to make progress in this area.

Harmful content generation

There are a number of reasons why LLMs can be used to generate harmful content

LLMs are trained on massive datasets of text and code. This data can include harmful content, such as hate speech, misinformation, and violence. When LLMs are trained on this data, they learn to generate text that is similar to the harmful content that they were trained on.

LLMs are very good at generating text that is similar to human-written text. This means that it can be difficult to distinguish between text that was generated by an LLM and text that was written by a human. This can make it easier for people to spread harmful content, without being aware of the harm that they are causing.

Lack of Context Understanding: While language models like GPT-3 have impressive language generation capabilities, they lack true understanding of the context and nuances of human communication. As a result, they may generate responses that are inappropriate, offensive, or harmful without fully comprehending the consequences.

Reflecting Human Inputs: LLMs learn from human-generated text, including both high-quality and low-quality inputs. If users provide harmful or biased prompts to the model, it may generate responses that amplify or expand upon those negative aspects, potentially leading to harmful content.

Incomplete Training Data Filtering: Filtering and moderating training data is a complex process, and it is challenging to ensure that all harmful or inappropriate content is removed. Some harmful language or biased perspectives might still be present in the training data, and the model can inadvertently reproduce such content during generation.

Amplification of Extreme Views: LLMs aim to generate diverse and engaging responses. However, they may inadvertently amplify extreme or harmful views present in the training data or prompt inputs. This can lead to the spread of misinformation, hate speech, or other harmful content.

There are a number of ways to mitigate the risk of LLMs being used to generate harmful content.

- Train LLMs on filtered datasets: This can be done by manually removing harmful content from the dataset, or by using automated tools to detect and remove harmful content. For example, Google AI has developed a tool called Text-To-Text Transfer Transformer (T5) that can be used to filter harmful content from datasets. ( see end of this post for a walk through of how to do this)

- Use tools to detect harmful content with LLMs: This can be done by using algorithms that are specifically designed to detect harmful content. For example, Perspective API that can be used to detect harmful content in text.

- Red Teaming Language Models with Language Models describes ways to automatically find failure cases . By identifying the failure cases you are then in a position to fix harmful model behaviour

- Educate users about the risks of LLMs: This can be done by providing users with information about how to identify and avoid harmful content, and by making them aware of the potential consequences of using LLMs to generate harmful content. Use sources such as https://ai.google/responsibility/responsible-ai-practices/ as a guide .

It is important to note that these mitigation techniques are not perfect. There is always the possibility that LLMs will be used to generate harmful content, even if these mitigation techniques are in place. However, by implementing these mitigation techniques, you can help reduce the likelihood that LLMs will be used to generate harmful content, and we can make it more difficult for people to use LLMs to generate harmful content.

Prompt injection

Prompt injection is a security vulnerability that can be exploited to trick a large language model into generating unintended or malicious output. This can be done by carefully crafting the prompt text in a way that exploits the model’s natural language processing capabilities. For example, a malicious user could inject a prompt that contains code that the model will execute, or that contains instructions for the model to generate text that is harmful or offensive. Prompt injection attacks try to accomplish different outcomes including

- Code injection: This type of attack involves injecting malicious code into the prompt that is used to generate output. This code can then be executed by the LLM, which could lead to a number of different problems, such as stealing the user’s data or displaying malicious content. For example : If you use an LLM to generate a translation of a text from English to French. The prompt that you could use is:

Translate the following text from English to French:

"This is a test."

An attacker could inject malicious text into the prompt by changing it to:

Translate the following text from English to French:

"This is a test. <script-tag-here>alert('This is an attack!') </script-tag-here>"

When the LLM generates the translation, it will also generate the malicious JavaScript code. This code will then be executed in the user’s browser, which could lead to a number of different problems, such as stealing the user’s data or displaying malicious content.

- Data injection: This type of attack involves injecting sensitive data into the prompt that is used to generate output. This data could then be stolen by the attacker, which could lead to a number of different problems, such as identity theft or financial fraud.

- Control injection: This type of attack involves injecting commands into the prompt that is used to generate output. These commands can then be executed by the LLM, which could lead to a number of different problems, such as taking control of the user’s device or causing the LLM to crash.

Prompt injection attacks can be classified as :

- Active prompt injection: In this type of attack, the attacker injects malicious text into the prompt that is used to generate output. This can be done by tricking the user into entering malicious text, or by injecting malicious text into a web page or other document that is used to generate the prompt.

- Passive prompt injection:. In this type of attack, the attacker does not inject malicious text into the prompt. Instead, they wait for the user to generate output and then inject malicious text into the output. This can be done by tricking the user into generating output that contains malicious text, or by injecting malicious text into a web page or other document that is used to generate the output.

Both active and passive prompt injection attacks can be used to manipulate LLMs. In active attacks, the attacker can control the output that is generated by the LLM. In passive attacks, the attacker can only control the output that is generated by the user. However, both types of attacks can be used to cause harm, such as stealing data, spreading malware, or causing other types of damage.

It is difficult to mitigate against prompt injection attacks but implementing some of the following techniques can help

- Using a secure LLM provider: Make sure that the LLM provider that you are using has taken steps to secure their models against prompt injection attacks. This could include things like filtering user input, using sandboxes, and monitoring for suspicious activity.

- Using a secure prompt: Do not use any prompts that you did not generate yourself. If you are unsure whether a prompt is safe, it is best to avoid using it.

- Monitoring your output: Be sure to monitor the output that is generated by the LLM for any signs of malicious content. If you see anything suspicious, report it to the LLM provider immediately.

- Using a secret phrase: One way to help prevent prompt injection attacks is to use a secret phrase that must be included in the prompt in order for the LLM to generate output. This makes it more difficult for attackers to inject malicious text into the prompt.

- Using parameterized queries: Another way to help prevent prompt injection attacks is to use parameterized queries. This involves passing parameters to the LLM as part of the prompt. This makes it more difficult for attackers to inject malicious text into the prompt, as the LLM will only generate output based on the parameters that are passed to it.

- Using input validation: Input validation is another technique that can be used to help prevent prompt injection attacks. This involves checking user input for malicious content before it is passed to the LLM. This can help to prevent attackers from injecting malicious text into the prompt.

If you want to learn everything there is to learn about prompt injection you will soon discover Prompt injection explained, with video, slides, and a transcript and the magnificent Simon Willison , El Reg likes him too. He really is doing good work imho!

A couple of other good reads

Exploring Prompt Injection Attacks | NCC Group Research Blog

https://haystack.deepset.ai/blog/how-to-prevent-prompt-injections

Unauthorised access to model training process

It is possible to insert backdoors into large language models that could be used to gain unauthorized access to the model or the systems it is used on.

Attacks on the model training process include:

- Attacking the model training process in order to corrupt the model or introduce bias into the model.

- Attacking the model deployment process in order to disrupt or disable the model.

- Injecting malicious code into the model that can be executed when the model is used. This can cause the model to make incorrect predictions.

- Deleting or corrupting the training data , this will prevent the model from being trained properly.

- Attackers could take control of the model and use it for their own purposes. For example, an attacker could use a chatbot model to spread misinformation or propaganda.

- Changing the model’s parameters: This can change the way the model works, which may make it less accurate or useful.

There are many ways that attackers can gain unauthorised access to the model training process including Social engineering, Phishing, Malware and Zero-day attacks.

It is important to take steps to protect the model training process from unauthorised access. Some of the best practices for doing this include:

- Use strong passwords and two-factor authentication: This will make it more difficult for attackers to gain access to user accounts.

- Keep the software that is used to train the model up to date: This will help to protect against known vulnerabilities.

- Implement guardrails such as IAM, VPC service controls Firewall rules, Monitoring access and suspicious activity and acting upon the alerts in a timely manner: This will help to prevent attackers from gaining access to the model training environment.

- Monitor the model training process for unusual activity: This will help to identify any attempts by attackers to disrupt or damage the model.

- Have a tested Disaster recovery process so you can roll back to a known good state

Compliance/regulatory incompatibility

The use of large language models may raise regulatory compliance concerns, such as those related to data privacy, data protection, and intellectual property.

Large language models can be used to generate content that violates laws and regulations. This could lead to fines or other penalties for the enterprise that uses the model.

The use of large language models is still in its early stages, and there is a lack of clear regulatory guidance. This uncertainty can make it difficult for enterprises to assess the risks and make informed decisions about how to use these models.

Uncertainty aside it would probably be reasonable to assume a set of basic principles regardless of the requirements you know you have to meet and to be ready for the compliance and regulatory requirements yet to be defined. Things are moving fast and getting ahead of potential regulations would be prudent :

-

Be transparent about how the model is trained and used. This includes disclosing the data that was used to train the model, as well as the algorithms and processes that were used to develop it.

-

Ensure that the model is fair and does not discriminate against any particular group of people. This can be done by using techniques such as bias mitigation and fairness testing.

-

Be accountable for the model’s outputs. This means being able to explain why the model generated a particular output, and being able to take steps to correct any errors or biases that may be present.

-

Protect the privacy of the data that was used to train the model. This includes using appropriate security measures to prevent unauthorized access to the data, and ensuring that the data is deleted when it is no longer needed.

-

**Be aware of the applicable regulations **including ones going through the approval process . The specific regulations that apply to your use of LLMs will vary depending on your industry and locality.

-

Obtain the necessary consents. In some cases, you may need to obtain consent from users before using their data to train or train your LLM. For example, the European Union’s General Data Protection Regulation (GDPR) requires companies to obtain consent before processing personal data.

-

Take steps to mitigate risks. There are a number of risks associated with the use of LLMs, such as bias, discrimination, and privacy violations. You should take steps to mitigate these risks by developing and implementing appropriate safeguards.

-

Be prepared to audit your practices. Regulators may audit your practices to ensure that you are complying with the applicable regulations. It is important to be prepared for these audits by keeping records of your data collection, use, and sharing practices.

In addition to adopting a set of principles apply the processes you already have in place such as :

-

Develop a risk assessment. This will help you to identify and assess the risks associated with using large language models, and to develop appropriate mitigation strategies.

-

Implement appropriate controls. This includes technical controls, such as security measures to protect the data, and operational controls, such as processes for managing the model’s outputs.

-

Training your employees. Make sure that your employees are aware of the risks associated with using large language models, and that they know how to mitigate those risks.

-

Monitor your use of large language models. This will help you to identify any potential problems early on, and to take corrective action as needed.

In-secure code creation

LLMs can create insecure code this occurs due to a number of reasons including:

- Generating code that is not secure by design. This can happen if the LLM is not trained on a dataset of secure code, or if the code is generated using a technique that does not take security into account. This can lead to code that is vulnerable to known attacks such as SQL injection, cross-site scripting etc .

- Generating code that is difficult to test. If it’s difficult to test for security vulnerabilities this can make it more difficult to identify and fix security problems and vulnerabilities . Consequently security vulnerabilities may not be discovered until after the code is in use.

- Generating code that is intentionally insecure. Some attackers may use LLMs to generate code that is intentionally insecure, such as malware or phishing attacks.

There are steps you can take to mitigate the risks:

- Use a security scanner to scan the generated code for vulnerabilities.

- Have a security expert review the generated code.

- Use a code review tool to help you find and fix security vulnerabilities.

- Train the LLM on a dataset of code that is free of known vulnerabilities.

- Use “prompt engineering” to help the LLM generate more secure code.

- Use a security-focused testing process. In addition to a security-focused code review process, it is also important to use a security-focused testing process. This process should include a variety of security tests, such as penetration testing and fuzz testing.

Deep Fakes

Large language models can be used to create deep fakes, which are videos or audio recordings that have been manipulated to make it appear as if someone is saying or doing something they never actually said or did.

This last threat in the list may at first glance appear to be a consumer focused issue but what if the target is your CEO or a VP or someone who has access to sensitive data ?

Deepfakes are a growing threat, and there is no one-size-fits-all solution to mitigating them. However, there are a number of things that individuals and organisations can do to reduce their risk of being targeted by deepfakes. There is no single solution that will completely eliminate the threat of deepfakes. However, by taking a combination of mitigating techniques, it can be more difficult for bad actors to use deepfakes to spread misinformation and harm others.

-

Be aware of the signs of a deepfake. Deepfakes can be difficult to detect, but there are some telltale signs that can help you spot them. These include:

- Inconsistencies in the person’s appearance or behaviour.

- Blurry or pixelated areas in the video.

- Strange lighting or shadows.

- Audio that doesn’t match the video.

-

Don’t share or believe everything seen online. It’s important to be sceptical of any video or image that you see online, especially if it’s of someone famous or powerful. If you’re not sure if something is real, do some research to verify it.

-

Use strong passwords and security measures. This is to help protect personal information from being compromised, as it could be used to create a deepfake of you. Make sure to use strong passwords, multi-factor authentication solutions and complementary security measures to protect your data.

-

Report deepfakes to the appropriate authorities. If you see a deepfake that you believe is harmful or malicious, report it to the appropriate authorities. This could help to prevent the deepfake from being shared and used to cause harm.

-

Invest in solutions that can be used to detect and prevent deepfakes. These include artificial intelligence (AI)-based tools that can identify inconsistencies in videos and audio, as well as watermarking and encryption technologies that can make it more difficult to create and distribute deepfakes. Intel’s fakecatcher and Google DeepMind’s synthid are two ways to help mitigate.

-

Keep up to date in advances in the field

———

Bonus walkthrough on using T5 to detect and remove harmful content

Pre-training:

Pre-training involves training the T5 model on a large corpus of text data, which includes both clean and potentially harmful content. During pre-training, the model learns to predict missing or masked words and generate coherent text. However, it is important to ensure that the pre-training dataset is carefully curated to minimise the inclusion of harmful content.

Fine-tuning for Filtering:

After pre-training, the T5 model can be fine-tuned specifically for the task of filtering harmful content. The fine-tuning process involves training the model on a smaller dataset that is annotated or labelled to identify and classify harmful content. Here’s how the fine-tuning process can be approached:

Dataset Preparation:

Collect or create a dataset that consists of examples of both harmful and clean content. Annotate or label each example to indicate whether it is harmful or not. The annotations can be binary (harmful or not harmful) or multi-class (e.g., hate speech, offensive language, explicit content, etc.).

Model Configuration:

Modify the T5 model architecture and the training objective to suit the filtering task. The model can be adapted to perform binary classification (harmful or not harmful) or multi-class classification (e.g., classifying into different types of harmful content).

Fine-tuning Process:

Initialise the T5 model with the pre-trained weights and then fine-tune it on the annotated dataset. During fine-tuning, the model learns to associate the input text with its corresponding harmful or clean label. This process involves optimising the model’s parameters using techniques such as gradient descent and backpropagation.

Evaluation and Iterative Improvement:

Evaluate the fine-tuned model’s performance on a validation set to assess its effectiveness in filtering harmful content. Iterate on the fine-tuning process by adjusting hyperparameters, training duration, or dataset composition to improve the model’s accuracy, precision, recall, and overall performance.

It’s worth noting that creating a comprehensive harmful content filter is a challenging task, and it requires careful consideration of the specific types of harmful content that need to be filtered. The fine-tuned T5 model can be further enhanced by incorporating additional techniques such as ensembling multiple models, incorporating external knowledge bases, and integrating human review processes to address the limitations of purely automated filtering systems.