An Adventure with my twitter feed using BigQuery

I’ve been using Twitter for over 10 years now and I was thinking it would be cool to analyse my tweets over the years. It also would give me a new dataset that I could use when looking at data related stuff ( I find it easier to use data I actually care about when exploring data things).

You can download your twitter archive so there is no need to use the twitter API to get hold of my data. Hoorah!

As a bonus if I was able to do this simply then it may get other folks who aren’t interested in streaming twitter data to have a bit of fun with their own tweets while getting hands on with BigQuery ( There’s a free tier!) .

If you want to stream tweets into GCP then any of these posts by Sara or Lee should help get you started on that route

comparing tweets about trump hillary with natural language processing

analyzing data with bigquery and machine learning apis/

This post is a description of the ETL process I used and the subsequent initial analysis. For those of you who just want to start your own adventures with your own twitter feed dataset. So I’ll stop twittering on and get on with it 😀

The Extract phase

I downloaded my twitter data , You can download yours too. Just go to your profile select “settings & privacy”, then from “Your Twitter data” scroll down and click download your twitter data. You’ll get an email notification with a link when it’s ready to be downloaded.

The download is a zip file.

The dataset

You get your archive as a zip file. Mine was just over 1/2 GB. At the time of the download it was 20.9 k tweets since 2009 when I joined twitter.

The archive consisted of a set of js files that included profile information, my Tweets, my DMs, Moments ( needless to say I had none of these!) , media (images, videos and GIFs ) I’d attached to Tweets , a list of followers, a list of accounts following me, my address book, Lists I’ve created, are a member of, or are subscribed to.

Each file Is succinctly described in the readme that is supplied with the download

I was going to start my adventures by just using the tweet.js file

The README describes this file as

" Tweets posted to account. This record contains the API output of Tweets for this account. Definitions for each of the variables that may be included in any particular Tweet are available in our API documentation "

or you can look at the schema def in BigQuery

Introduction to Tweet JSON page is another page that gives you info on the schema.

The Transform phase

Alas, you can’t just upload your tweets.js file you need to do a little transformation to get it into a format that BigQuery can deal with. I have no idea why they don’t provide the archive files in JSON as you can’t do a lot with a bunch of js files really so transformation would be required. I found this great little bash script to start that for me .

Although I have no idea what the csv file he refers to is or how you get hold of that. There was no csv file in my archive! Guess If I had the csv I wouldn’t be writing this post though.

I ran the script against my tweet.js file and it gave me a tidied up tweet.json but crucially it didn’t add a newline between each record. So I then used handy jq to sort that out for me with this single line :

cat tweet.json | jq -c '.[]' > mytweets.json

( Note the twitter-archive-to-json shell script removed the leading “[{” so fix that before running the jq command)

The Load phase

If you don’t already use GCP ( go on you know you want to!) you can still easily do what I’ve done by signing up to use the GCP free tier

At the time of writing this post the free tier gives you 1 TB of BigQuery queries per month and you can store 5 GB on cloud storage before you get charged.

I’m going to assume you have your project set up and ready to go though!

I created a cloud storage bucket & uploaded my transformed file

You can do this via the console & if you are new to GCP then go ahead and try doing that but I just used a couple of gsutil commands to create the bucket and copy up the JSON file.

gsutil mb gs://my-bucket/

gsutil cp mytweets.json gs://my-bucket

Make sure that your bucket and tweets are not public . It’s good practice to not make your bucket and the objects stored in them publicly accessible unless you need to do so say for storing static assets for a web app. There are plenty of visual indicators in the console to make sure you aren’t inadvertently leaking data you didn’t mean to.

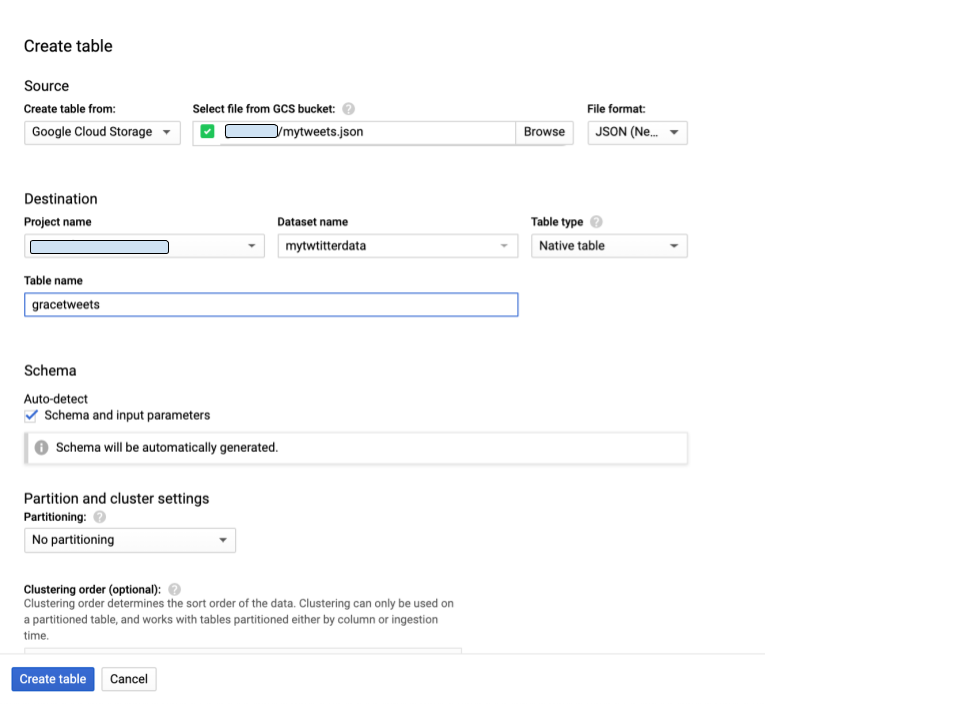

I created a BigQuery dataset and table using the console and followed the steps here to load my Newline JSON dataset from a cloud storage bucket into Big Query letting BigQuery autodetect the schema.

Querying my twitter data

So now the real fun began

The first query I did was to check how many records got loaded into BigQuery

SELECT count(*) FROM 'myproject.mytwtitterdata.gracetweets'

This told me I had 20867 tweets ! Good that sounded about right. Now I wanted to start asking past me some questions.

The Introduction to Tweet JSON gives you info on the schema or you can look at the schema def in BigQuery

BigQuery uses standard sql now which is great as there isn’t really any need to reset your brain if you are already used to Standard SQL This link has the query syntax .

A couple of early queries i tried

SELECT full_text, created_at FROM 'myproject.mytwtitterdata.gracetweets' WHERE full_text LIKE '%flowchart%' order by created_at

Surprisingly only gave me 30 rows

SELECT full_text, created_at FROM myproject.mytwtitterdata.gracetweets' WHERE full_text LIKE LOWER('%googlecloud%') order by created_at

Gave me 610 rows

Then I thought wonder how many tweets combined flowchart & googlecloud

SELECT full_text, created_at FROM 'myproject.mytwtitterdata.gracetweets' WHERE full_text LIKE LOWER('%googlecloud%') and full_text LIKE LOWER('%flowchart%') order by created_at

Only 11!

Thanks to @felipehoffa who had a few tweet related queries here I was able to easily find which of my hashtagged tweets got retweeted the most. No suprises really I guess that the top retweeted tweet was a tweet with a hashtag of #googlecloud 😃

SELECT hashtag, SUM(retweets) total_retweets , ARRAY_AGG(STRUCT(retweets, full_text) ORDER BY retweets DESC LIMIT 1) top_tweet FROM ( SELECT REGEXP_EXTRACT_ALL(full_text, r'(?i)#[^ ,:\.\";\!]*') hashtags, full_text, retweet_count retweets FROM 'myproject.mytwtitterdata.gracetweets' a ), UNNEST(hashtags) hashtag WHERE ARRAY_LENGTH(hashtags)>0 GROUP BY 1 ORDER BY 2 DESC LIMIT 1000