Intro to Gatekeeper Policies

In my incredibly narrow and brief tour of auditing and enforcement policies for GKE I spoke about OPA and gatekeeper . In this post I am having a look at creating Gatekeeper policies . This however was going to entail me getting to grips with Rego!

So what is Rego?

To understand what Rego is you need to know what Open policy agent ( OPA ) is. I discussed it in my post but the key thing is it’s a general-purpose policy engine.

Rego is the native query language that is used with OPA. It’s a declarative language that is used to write the policies that are used by OPA .

So just a few more definitions and explanations of how things are related that you need to know before I get started on looking at how to create gatekeeper policies. Hopefully my journey won’t give you a headache.

Custom resources are extensions of the Kubernetes API. Custom resources let you store and retrieve structured data. When you combine a custom resource with a custom controller, custom resources provide a true declarative API .

A declarative API allows you to declare or specify the desired state of your resource The controller tries to keep the current state of k8s objects in sync with the desired state as defined in the structured data stored in the custom resource.

Once a custom resource is installed, users can create and access its objects using kubectl, just as they do for built-in resources like Pods.

The CustomResourceDefinition ( CRD ) API resource allows you to define custom resources. Defining a CRD object creates a new custom resource with a name and schema that you specify. The Kubernetes API serves and handles the storage of your custom resource.

Gatekeeper enforces CRD based policies executed by OPA .

To work with Gatekeeper policies you need to create Constraints and Constraint templates.

A Constraint is a declaration of requirements that a system needs to meet. Constraints are written in Rego.

A Constraint template describes the Rego logic that enforces the Constraint and the schema for the Constraint, which includes the schema of the CRD and the parameters that can be passed into a Constraint, much like arguments to a function.

Okay that was a lot of new things so now I wanted to see how it all fitted together in the “actuality”

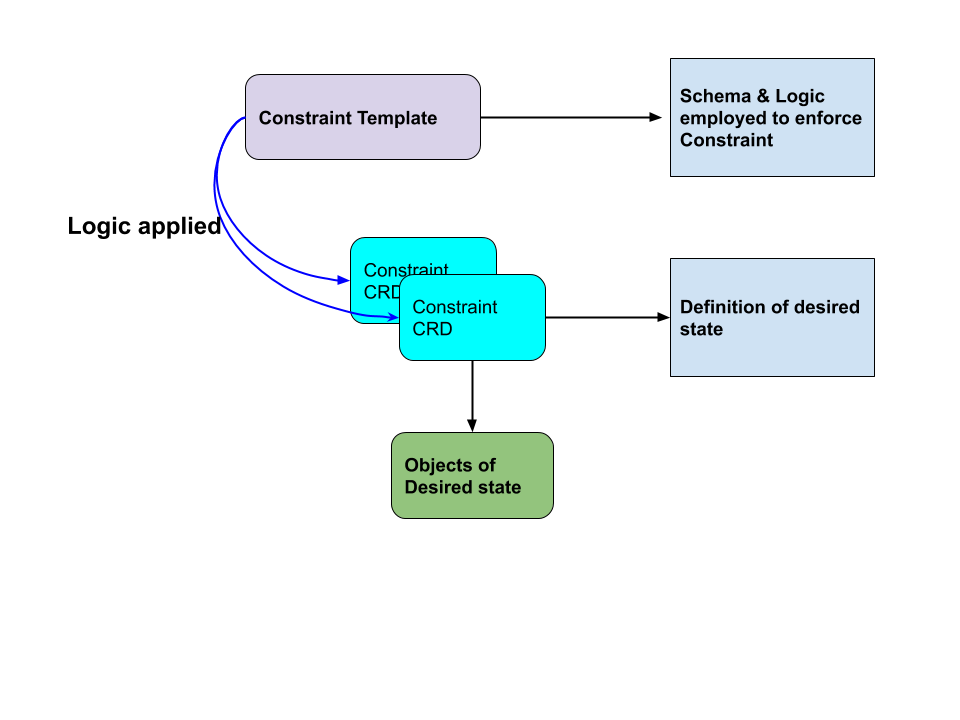

This post actually does a great job of discussing the inter-relationships between Constraint templates and Constraint CRDs. The diagram below is my pictorial representation of the relationship:

So now we know the relationships next was diving into the format of the YAML for the Constraint templates and the Constraints by looking at an example . The example I looked at is how to enforce labels on objects. This is a good one to start with as it’s probably the most common thing you want to enforce in your clusters. It’s also the example everyone seems to use when discussing Gatekeeper so why stray from that well trodden path?

The example folder in the Gatekeeper repo has the YAML files for this walkthrough.

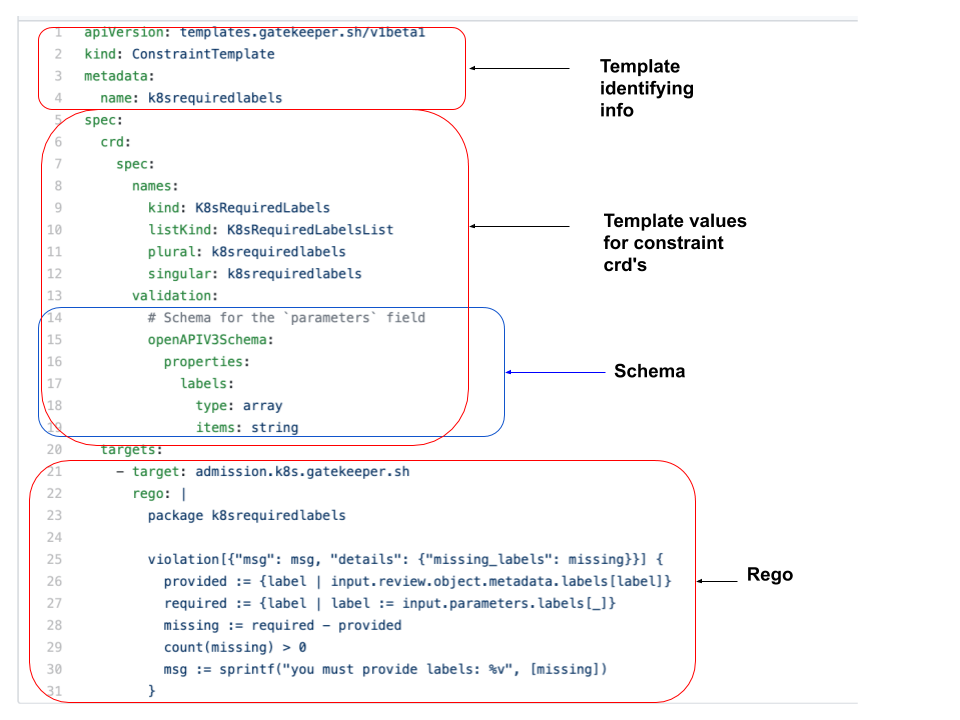

Starting with the constraint template which is a YAML file named k8srequiredlabels_template.yaml.

I’ve broken up the constraint template into composite parts required for creating a constraint template.

The two areas worth dwelling on are the schema and the Rego.

Schema: - The schema defining the format for the values that are passed as parameters to the constraint CRD’s that are enforced by this constraint template. So in this example we are expecting an array of strings called labels in the constraint crd ( we’ll get to those later)

Note If you want to dig into some other parts of the YAML e.g to understand what spec is for refer to Kubernetes api conventions

Looking at the Rego -

- target: admission.k8s.gatekeeper.sh - The gatekeeper admission controller will process the crd that this template enforces

- package k8srequiredlabels - The rules that enforce the logic for a constraint crd are grouped together within a namespace called a package

in this example the package is called “k8srequiredlabels”

The Rego statement indicates that you require all labels on the object that is defined by the constraint CRD to be present. If the required labels are not present then gatekeeper will reject the request and an error message “msg” that indicates what the problem is is passed back . In this case a message indicating that you must provide the labels as defined in the crd .

Rego syntax is a little strange to say the least but by reading the OPA policy reference and looking at example constraint templates you will probably stop making typos or trying to write policies where the logic is the wrong way round ! The key takeaway for me was that if the rules are true than it’s a violation . So you are defining what will trigger the violation.

So next we need some constraint CRD’s that the constraint template will enforce.

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sRequiredLabels

metadata:

name: ns-must-have-mydemoproject

spec:

match:

kinds:

- apiGroups: [""]

kinds: ["Namespace"]

parameters:

labels: ["mydemoproject"]

The CRD above requires namespaces to be created with a label of _mydemoproject . To restrict to a specific set of namespaces provide the list of namespaces it should apply to.

The CRD below is scoped to pods and requires the pods to have a label identifying the pods as backend

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sRequiredLabels

metadata:

name: ns-must-have-backend

spec:

match:

kinds:

- apiGroups: [""]

kinds: ["Pod"]

parameters:

labels: ["backend"]

This simple but useful example shows how flexible using OPA Gatekeeper is and demonstrates nicely how you can reuse a single constraint template definition in different scopes within your cluster.

Still looking at enforcing labels the Gatekeeper library has a more interesting and more flexible example .

Looking at the Constraint template used in the example it has the composite parts as per the first example but with some variations

The schema -

validation:

# Schema for the `parameters` field

openAPIV3Schema:

properties:

message:

type: string

labels:

type: array

items:

type: object

properties:

key:

type: string

allowedRegex:

type: string

This has now extended the label parameter so it has a key and its associated value . The associated value is a regex value which makes this really very flexible as you’ll see when we look at an example CRD.

It also has an additional parameter message.

The Rego for this version of the k8srequiredlabels constraint template is expanded to accommodate the changes to the schema

rego: |

package k8srequiredlabels

get_message(parameters, _default) = msg {

not parameters.message

msg := _default

}

get_message(parameters, _default) = msg {

msg := parameters.message

}

violation[{"msg": msg, "details": {"missing_labels": missing}}] {

provided := {label | input.review.object.metadata.labels[label]}

required := {label | label := input.parameters.labels[_].key}

missing := required - provided

count(missing) > 0

def_msg := sprintf("you must provide labels: %v", [missing])

msg := get_message(input.parameters, def_msg)

}

violation[{"msg": msg}] {

value := input.review.object.metadata.labels[key]

expected := input.parameters.labels[_]

expected.key == key

# do not match if allowedRegex is not defined, or is an empty string

expected.allowedRegex != ""

not re_match(expected.allowedRegex, value)

def_msg := sprintf("Label <%v: %v> does not satisfy allowed regex: %v", [key, value, expected.allowedRegex])

msg := get_message(input.parameters, def_msg)

}

It’s easy enough to see how it’s an evolution of the Rego we looked at in the previous example. it introduces some new Rego to us :

msg := get_message(input.parameters, def_msg) This is setting the message to be returned on a violation from a message you define in the constraint crd . This means each constraint crd can have a very specific message.

expected.key == key Here we meet the comparison operator.

not re_match(expected.allowedRegex, value)

re_match is true if the value matches the regex pattern

An example Constraint CRD that is enforced using the newer version of the constraint template looks like :

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sRequiredLabels

metadata:

name: all-must-have-owner

spec:

match:

kinds:

- apiGroups: [""]

kinds: ["Namespace"]

parameters:

message: "All namespaces must have an `owner` label that points to your company username"

labels:

- key: owner

allowedRegex: "^[a-zA-Z]+.agilebank.demo$"

The CRD as you would expect is now modified to match the schema defined in the constraint

Happily there’s a library of examples from where to start which means in many cases you don’t need to write your own or at least you don’t have to start from a blank YAML file. A nice thing about these examples is that they have tests with them so you can start off with good habits.

Oh and always use the dry run feature of Gatekeeper . Enforcing policies without a way to test their effect is probably gonna cause a lot of pain the dry run feature will help avert that scenario!

You can also run audits to ensure compliance with your rules.